MySQL NDB Cluster 8.0

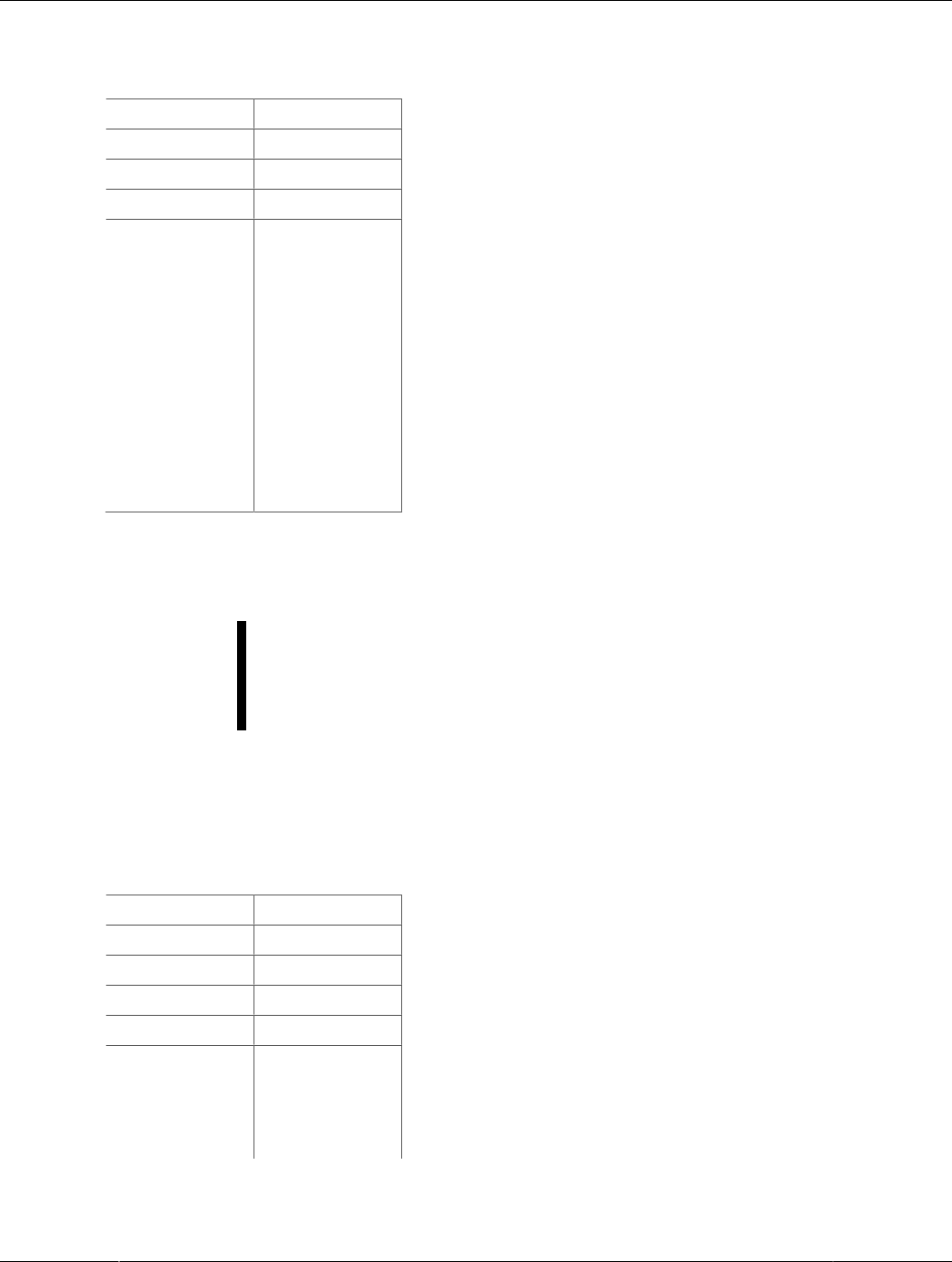

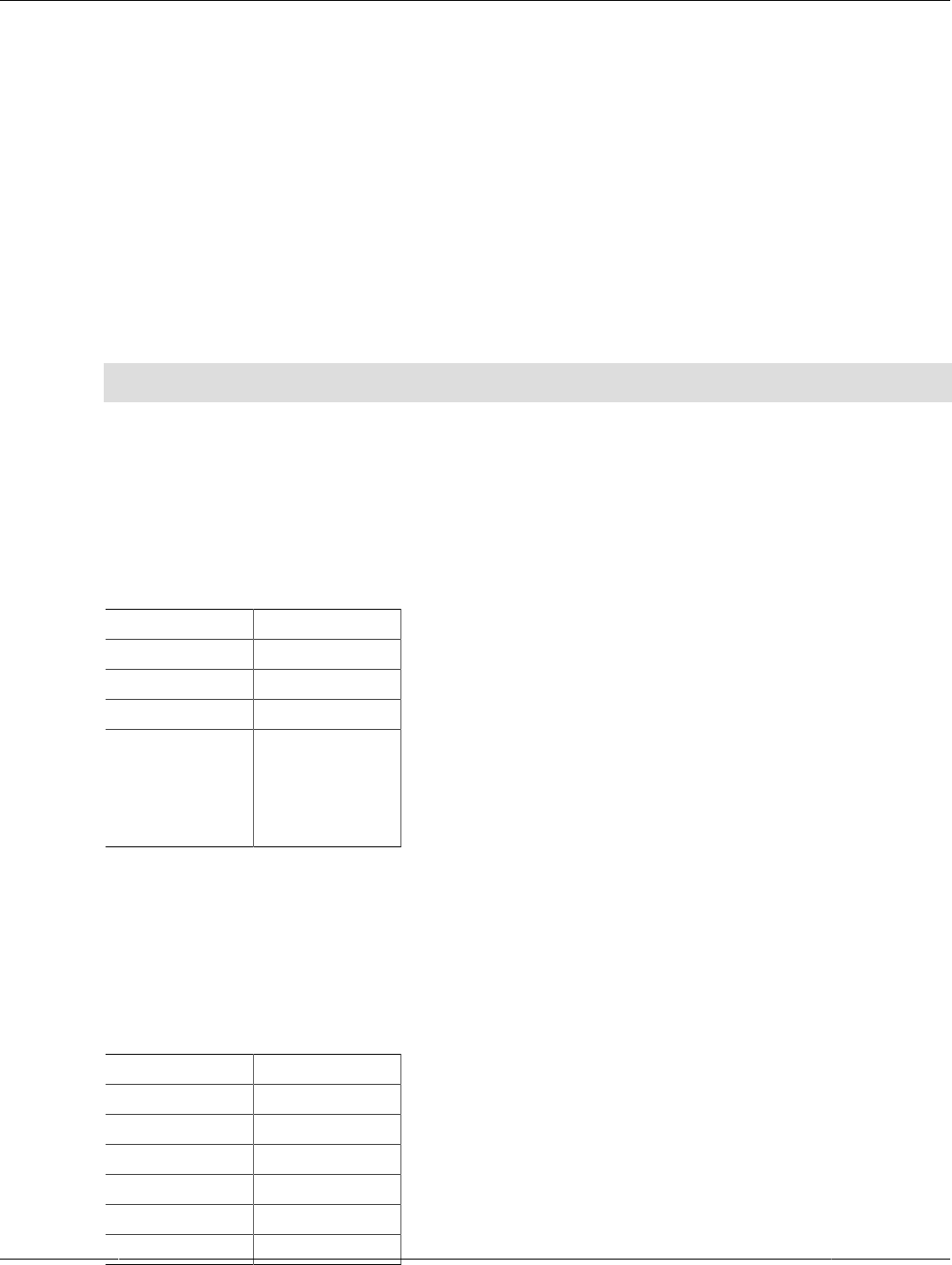

Table of Contents

Preface and Legal Notices ................................................................................................................. ix

1 General Information ......................................................................................................................... 1

2 NDB Cluster Overview .................................................................................................................... 5

2.1 NDB Cluster Core Concepts ................................................................................................. 7

2.2 NDB Cluster Nodes, Node Groups, Fragment Replicas, and Partitions .................................. 10

2.3 NDB Cluster Hardware, Software, and Networking Requirements .......................................... 13

2.4 What is New in MySQL NDB Cluster 8.0 ............................................................................. 14

2.5 Options, Variables, and Parameters Added, Deprecated or Removed in NDB 8.0 ................... 45

2.6 MySQL Server Using InnoDB Compared with NDB Cluster ................................................... 51

2.6.1 Differences Between the NDB and InnoDB Storage Engines ...................................... 52

2.6.2 NDB and InnoDB Workloads .................................................................................... 53

2.6.3 NDB and InnoDB Feature Usage Summary .............................................................. 54

2.7 Known Limitations of NDB Cluster ....................................................................................... 54

2.7.1 Noncompliance with SQL Syntax in NDB Cluster ....................................................... 55

2.7.2 Limits and Differences of NDB Cluster from Standard MySQL Limits ........................... 57

2.7.3 Limits Relating to Transaction Handling in NDB Cluster ............................................. 59

2.7.4 NDB Cluster Error Handling ..................................................................................... 61

2.7.5 Limits Associated with Database Objects in NDB Cluster ........................................... 62

2.7.6 Unsupported or Missing Features in NDB Cluster ...................................................... 62

2.7.7 Limitations Relating to Performance in NDB Cluster .................................................. 63

2.7.8 Issues Exclusive to NDB Cluster .............................................................................. 63

2.7.9 Limitations Relating to NDB Cluster Disk Data Storage .............................................. 64

2.7.10 Limitations Relating to Multiple NDB Cluster Nodes ................................................. 65

2.7.11 Previous NDB Cluster Issues Resolved in NDB Cluster 8.0 ...................................... 66

3 NDB Cluster Installation ................................................................................................................. 67

3.1 Installation of NDB Cluster on Linux .................................................................................... 70

3.1.1 Installing an NDB Cluster Binary Release on Linux .................................................... 70

3.1.2 Installing NDB Cluster from RPM .............................................................................. 72

3.1.3 Installing NDB Cluster Using .deb Files ..................................................................... 77

3.1.4 Building NDB Cluster from Source on Linux .............................................................. 77

3.1.5 Deploying NDB Cluster with Docker Containers ......................................................... 78

3.2 Installing NDB Cluster on Windows ..................................................................................... 80

3.2.1 Installing NDB Cluster on Windows from a Binary Release ......................................... 80

3.2.2 Compiling and Installing NDB Cluster from Source on Windows ................................. 84

3.2.3 Initial Startup of NDB Cluster on Windows ................................................................ 85

3.2.4 Installing NDB Cluster Processes as Windows Services ............................................. 87

3.3 Initial Configuration of NDB Cluster ..................................................................................... 89

3.4 Initial Startup of NDB Cluster .............................................................................................. 91

3.5 NDB Cluster Example with Tables and Data ........................................................................ 92

3.6 Safe Shutdown and Restart of NDB Cluster ......................................................................... 95

3.7 Upgrading and Downgrading NDB Cluster ........................................................................... 96

3.8 The NDB Cluster Auto-Installer (NO LONGER SUPPORTED) ............................................. 102

4 Configuration of NDB Cluster ....................................................................................................... 103

4.1 Quick Test Setup of NDB Cluster ...................................................................................... 103

4.2 Overview of NDB Cluster Configuration Parameters, Options, and Variables ........................ 106

4.2.1 NDB Cluster Data Node Configuration Parameters .................................................. 106

4.2.2 NDB Cluster Management Node Configuration Parameters ...................................... 114

4.2.3 NDB Cluster SQL Node and API Node Configuration Parameters ............................. 115

4.2.4 Other NDB Cluster Configuration Parameters .......................................................... 116

4.2.5 NDB Cluster mysqld Option and Variable Reference ................................................ 117

4.3 NDB Cluster Configuration Files ........................................................................................ 128

iii

MySQL NDB Cluster 8.0

4.3.1 NDB Cluster Configuration: Basic Example ............................................................. 129

4.3.2 Recommended Starting Configuration for NDB Cluster ............................................. 132

4.3.3 NDB Cluster Connection Strings ............................................................................. 135

4.3.4 Defining Computers in an NDB Cluster ................................................................... 136

4.3.5 Defining an NDB Cluster Management Server ......................................................... 138

4.3.6 Defining NDB Cluster Data Nodes .......................................................................... 146

4.3.7 Defining SQL and Other API Nodes in an NDB Cluster ............................................ 246

4.3.8 Defining the System ............................................................................................... 257

4.3.9 MySQL Server Options and Variables for NDB Cluster ............................................. 258

4.3.10 NDB Cluster TCP/IP Connections ......................................................................... 323

4.3.11 NDB Cluster TCP/IP Connections Using Direct Connections ................................... 330

4.3.12 NDB Cluster Shared-Memory Connections ............................................................ 331

4.3.13 Data Node Memory Management ......................................................................... 338

4.3.14 Configuring NDB Cluster Send Buffer Parameters .................................................. 342

4.4 Using High-Speed Interconnects with NDB Cluster ............................................................. 343

5 NDB Cluster Programs ................................................................................................................ 345

5.1 ndbd — The NDB Cluster Data Node Daemon .................................................................. 346

5.2 ndbinfo_select_all — Select From ndbinfo Tables ............................................................... 357

5.3 ndbmtd — The NDB Cluster Data Node Daemon (Multi-Threaded) ...................................... 363

5.4 ndb_mgmd — The NDB Cluster Management Server Daemon ............................................ 364

5.5 ndb_mgm — The NDB Cluster Management Client ............................................................ 376

5.6 ndb_blob_tool — Check and Repair BLOB and TEXT columns of NDB Cluster Tables .......... 382

5.7 ndb_config — Extract NDB Cluster Configuration Information .............................................. 389

5.8 ndb_delete_all — Delete All Rows from an NDB Table ....................................................... 402

5.9 ndb_desc — Describe NDB Tables ................................................................................... 407

5.10 ndb_drop_index — Drop Index from an NDB Table .......................................................... 417

5.11 ndb_drop_table — Drop an NDB Table ............................................................................ 422

5.12 ndb_error_reporter — NDB Error-Reporting Utility ............................................................ 427

5.13 ndb_import — Import CSV Data Into NDB ....................................................................... 428

5.14 ndb_index_stat — NDB Index Statistics Utility .................................................................. 446

5.15 ndb_move_data — NDB Data Copy Utility ....................................................................... 454

5.16 ndb_perror — Obtain NDB Error Message Information ...................................................... 460

5.17 ndb_print_backup_file — Print NDB Backup File Contents ................................................ 463

5.18 ndb_print_file — Print NDB Disk Data File Contents ......................................................... 468

5.19 ndb_print_frag_file — Print NDB Fragment List File Contents ............................................ 470

5.20 ndb_print_schema_file — Print NDB Schema File Contents .............................................. 471

5.21 ndb_print_sys_file — Print NDB System File Contents ...................................................... 471

5.22 ndb_redo_log_reader — Check and Print Content of Cluster Redo Log .............................. 472

5.23 ndb_restore — Restore an NDB Cluster Backup .............................................................. 475

5.23.1 Restoring an NDB Backup to a Different Version of NDB Cluster ............................ 504

5.23.2 Restoring to a different number of data nodes ....................................................... 506

5.23.3 Restoring from a backup taken in parallel .............................................................. 509

5.24 ndb_secretsfile_reader — Obtain Key Information from an Encrypted NDB Data File ........... 510

5.25 ndb_select_all — Print Rows from an NDB Table ............................................................. 512

5.26 ndb_select_count — Print Row Counts for NDB Tables .................................................... 519

5.27 ndb_show_tables — Display List of NDB Tables .............................................................. 524

5.28 ndb_size.pl — NDBCLUSTER Size Requirement Estimator ............................................... 529

5.29 ndb_top — View CPU usage information for NDB threads ................................................ 532

5.30 ndb_waiter — Wait for NDB Cluster to Reach a Given Status ........................................... 538

5.31 ndbxfrm — Compress, Decompress, Encrypt, and Decrypt Files Created by NDB Cluster .... 545

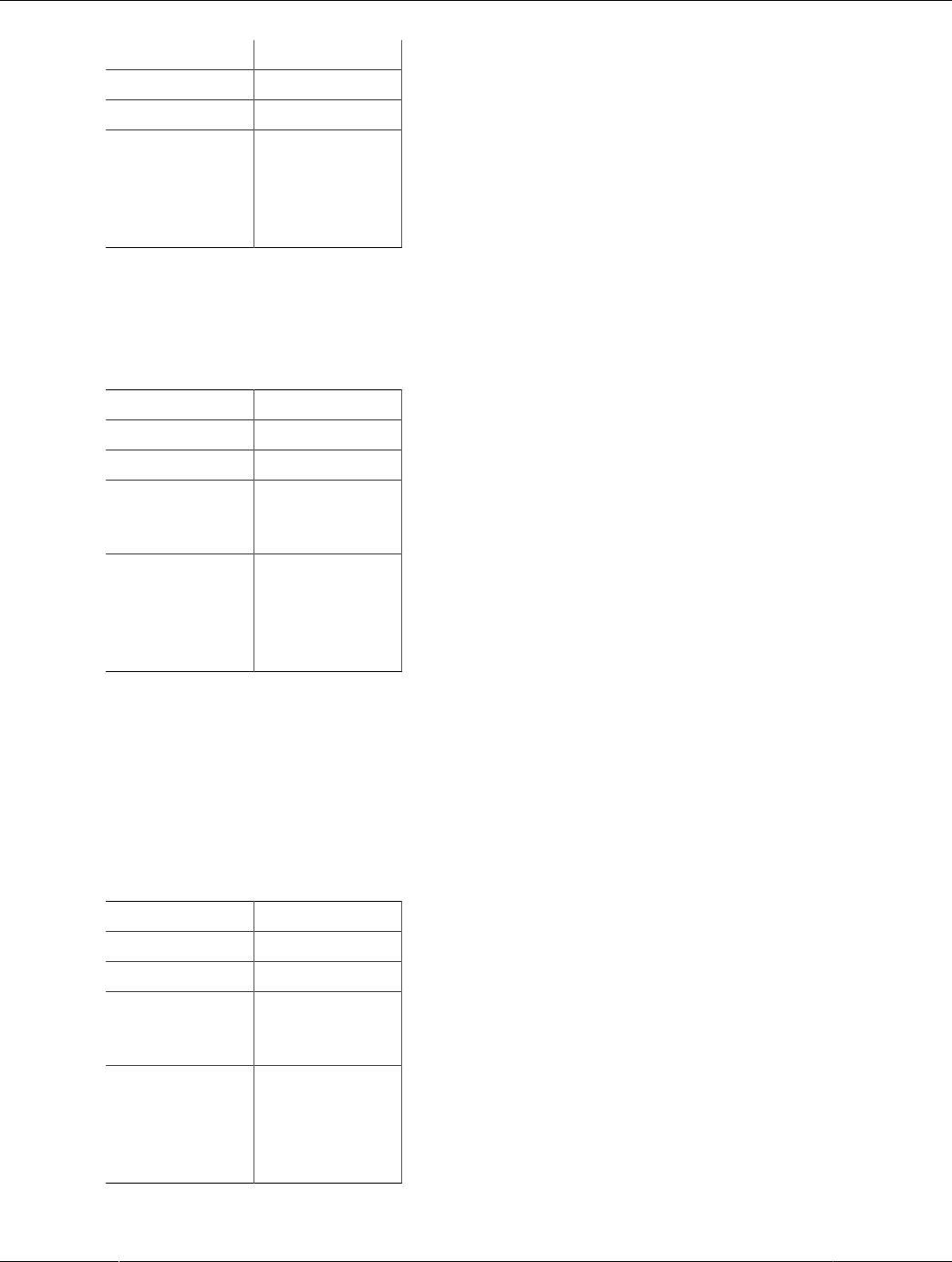

6 Management of NDB Cluster ....................................................................................................... 555

6.1 Commands in the NDB Cluster Management Client ............................................................ 557

6.2 NDB Cluster Log Messages .............................................................................................. 564

6.2.1 NDB Cluster: Messages in the Cluster Log ............................................................. 564

iv

MySQL NDB Cluster 8.0

6.2.2 NDB Cluster Log Startup Messages ........................................................................ 579

6.2.3 Event Buffer Reporting in the Cluster Log ............................................................... 580

6.2.4 NDB Cluster: NDB Transporter Errors ..................................................................... 581

6.3 Event Reports Generated in NDB Cluster .......................................................................... 582

6.3.1 NDB Cluster Logging Management Commands ....................................................... 584

6.3.2 NDB Cluster Log Events ........................................................................................ 586

6.3.3 Using CLUSTERLOG STATISTICS in the NDB Cluster Management Client ............... 592

6.4 Summary of NDB Cluster Start Phases ............................................................................. 595

6.5 Performing a Rolling Restart of an NDB Cluster ................................................................. 597

6.6 NDB Cluster Single User Mode ......................................................................................... 599

6.7 Adding NDB Cluster Data Nodes Online ............................................................................ 600

6.7.1 Adding NDB Cluster Data Nodes Online: General Issues ......................................... 600

6.7.2 Adding NDB Cluster Data Nodes Online: Basic procedure ........................................ 602

6.7.3 Adding NDB Cluster Data Nodes Online: Detailed Example ...................................... 603

6.8 Online Backup of NDB Cluster .......................................................................................... 611

6.8.1 NDB Cluster Backup Concepts ............................................................................... 611

6.8.2 Using The NDB Cluster Management Client to Create a Backup ............................... 612

6.8.3 Configuration for NDB Cluster Backups ................................................................... 616

6.8.4 NDB Cluster Backup Troubleshooting ..................................................................... 616

6.8.5 Taking an NDB Backup with Parallel Data Nodes .................................................... 617

6.9 Importing Data Into MySQL Cluster ................................................................................... 617

6.10 MySQL Server Usage for NDB Cluster ............................................................................ 618

6.11 NDB Cluster Disk Data Tables ........................................................................................ 620

6.11.1 NDB Cluster Disk Data Objects ............................................................................ 620

6.11.2 NDB Cluster Disk Data Storage Requirements ...................................................... 625

6.12 Online Operations with ALTER TABLE in NDB Cluster ..................................................... 626

6.13 Privilege Synchronization and NDB_STORED_USER ....................................................... 630

6.14 File System Encryption for NDB Cluster ........................................................................... 631

6.14.1 NDB File System Encryption Setup and Usage ...................................................... 631

6.14.2 NDB File System Encryption Implementation ......................................................... 633

6.14.3 NDB File System Encryption Limitations ................................................................ 634

6.15 NDB API Statistics Counters and Variables ...................................................................... 634

6.16 ndbinfo: The NDB Cluster Information Database ............................................................... 647

6.16.1 The ndbinfo arbitrator_validity_detail Table ............................................................ 652

6.16.2 The ndbinfo arbitrator_validity_summary Table ...................................................... 653

6.16.3 The ndbinfo backup_id Table ................................................................................ 653

6.16.4 The ndbinfo blobs Table ....................................................................................... 654

6.16.5 The ndbinfo blocks Table ..................................................................................... 655

6.16.6 The ndbinfo cluster_locks Table ............................................................................ 655

6.16.7 The ndbinfo cluster_operations Table .................................................................... 657

6.16.8 The ndbinfo cluster_transactions Table ................................................................. 658

6.16.9 The ndbinfo config_nodes Table ........................................................................... 660

6.16.10 The ndbinfo config_params Table ....................................................................... 660

6.16.11 The ndbinfo config_values Table ......................................................................... 661

6.16.12 The ndbinfo counters Table ................................................................................ 664

6.16.13 The ndbinfo cpudata Table ................................................................................. 666

6.16.14 The ndbinfo cpudata_1sec Table ........................................................................ 666

6.16.15 The ndbinfo cpudata_20sec Table ....................................................................... 667

6.16.16 The ndbinfo cpudata_50ms Table ....................................................................... 668

6.16.17 The ndbinfo cpuinfo Table .................................................................................. 669

6.16.18 The ndbinfo cpustat Table .................................................................................. 669

6.16.19 The ndbinfo cpustat_50ms Table ........................................................................ 670

6.16.20 The ndbinfo cpustat_1sec Table ......................................................................... 671

6.16.21 The ndbinfo cpustat_20sec Table ........................................................................ 672

v

MySQL NDB Cluster 8.0

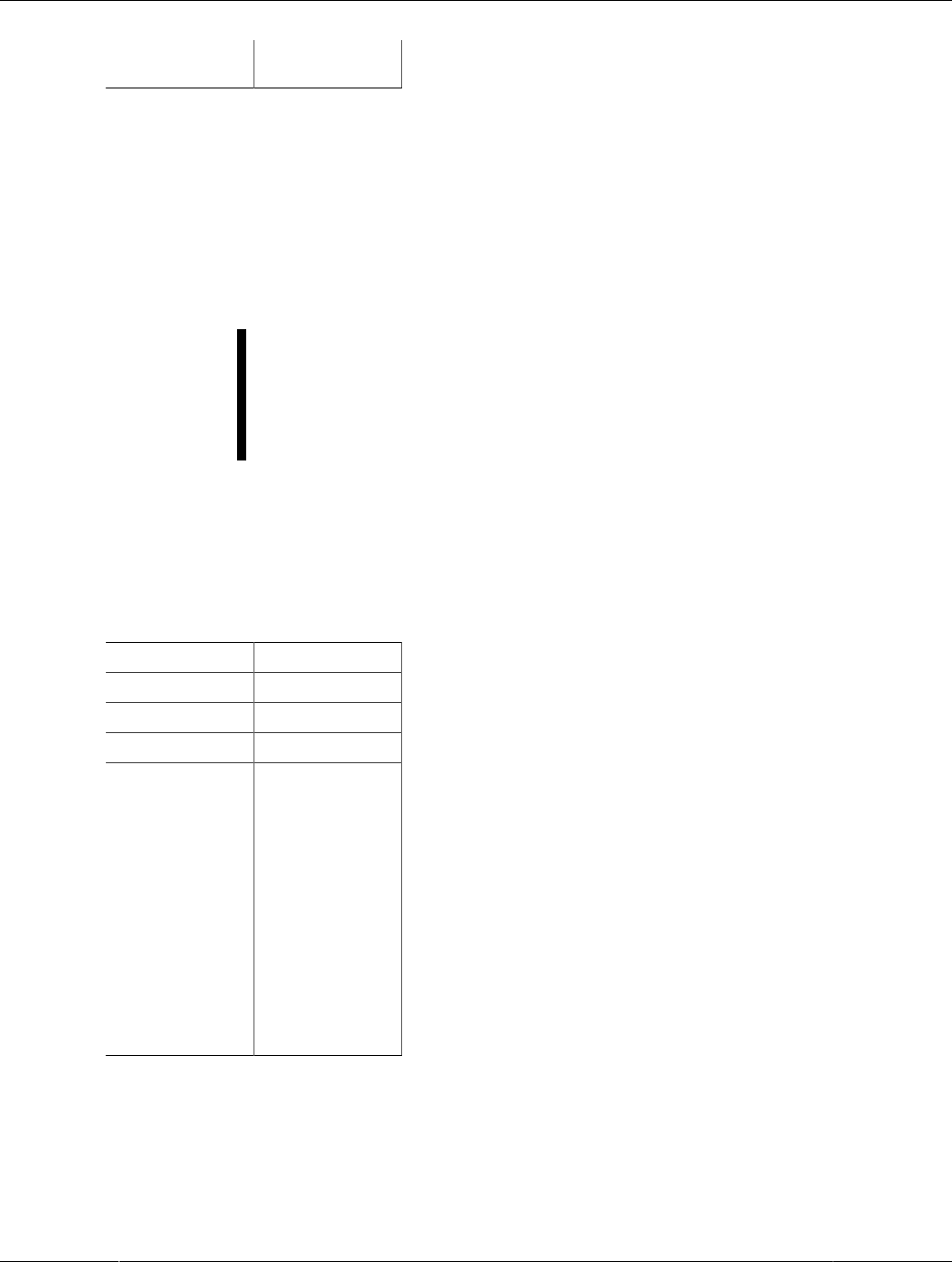

6.16.22 The ndbinfo dictionary_columns Table ................................................................. 673

6.16.23 The ndbinfo dictionary_tables Table .................................................................... 674

6.16.24 The ndbinfo dict_obj_info Table .......................................................................... 676

6.16.25 The ndbinfo dict_obj_tree Table .......................................................................... 677

6.16.26 The ndbinfo dict_obj_types Table ........................................................................ 680

6.16.27 The ndbinfo disk_write_speed_base Table .......................................................... 680

6.16.28 The ndbinfo disk_write_speed_aggregate Table ................................................... 681

6.16.29 The ndbinfo disk_write_speed_aggregate_node Table .......................................... 682

6.16.30 The ndbinfo diskpagebuffer Table ....................................................................... 683

6.16.31 The ndbinfo diskstat Table .................................................................................. 685

6.16.32 The ndbinfo diskstats_1sec Table ....................................................................... 686

6.16.33 The ndbinfo error_messages Table ..................................................................... 687

6.16.34 The ndbinfo events Table ................................................................................... 688

6.16.35 The ndbinfo files Table ....................................................................................... 689

6.16.36 The ndbinfo foreign_keys Table .......................................................................... 690

6.16.37 The ndbinfo hash_maps Table ............................................................................ 691

6.16.38 The ndbinfo hwinfo Table ................................................................................... 691

6.16.39 The ndbinfo index_columns Table ....................................................................... 692

6.16.40 The ndbinfo index_stats Table ............................................................................ 693

6.16.41 The ndbinfo locks_per_fragment Table ................................................................ 693

6.16.42 The ndbinfo logbuffers Table .............................................................................. 695

6.16.43 The ndbinfo logspaces Table .............................................................................. 696

6.16.44 The ndbinfo membership Table ........................................................................... 696

6.16.45 The ndbinfo memoryusage Table ........................................................................ 699

6.16.46 The ndbinfo memory_per_fragment Table ............................................................ 700

6.16.47 The ndbinfo nodes Table .................................................................................... 711

6.16.48 The ndbinfo operations_per_fragment Table ........................................................ 713

6.16.49 The ndbinfo pgman_time_track_stats Table ......................................................... 717

6.16.50 The ndbinfo processes Table .............................................................................. 718

6.16.51 The ndbinfo resources Table .............................................................................. 719

6.16.52 The ndbinfo restart_info Table ............................................................................ 720

6.16.53 The ndbinfo server_locks Table .......................................................................... 724

6.16.54 The ndbinfo server_operations Table .................................................................. 725

6.16.55 The ndbinfo server_transactions Table ................................................................ 727

6.16.56 The ndbinfo table_distribution_status Table ......................................................... 728

6.16.57 The ndbinfo table_fragments Table ..................................................................... 729

6.16.58 The ndbinfo table_info Table ............................................................................... 731

6.16.59 The ndbinfo table_replicas Table ......................................................................... 731

6.16.60 The ndbinfo tc_time_track_stats Table ................................................................ 733

6.16.61 The ndbinfo threadblocks Table .......................................................................... 734

6.16.62 The ndbinfo threads Table .................................................................................. 735

6.16.63 The ndbinfo threadstat Table .............................................................................. 736

6.16.64 The ndbinfo transporter_details Table .................................................................. 737

6.16.65 The ndbinfo transporters Table ........................................................................... 739

6.17 INFORMATION_SCHEMA Tables for NDB Cluster ........................................................... 741

6.18 NDB Cluster and the Performance Schema ..................................................................... 742

6.19 Quick Reference: NDB Cluster SQL Statements ............................................................... 743

6.20 NDB Cluster Security Issues ........................................................................................... 750

6.20.1 NDB Cluster Security and Networking Issues ........................................................ 750

6.20.2 NDB Cluster and MySQL Privileges ...................................................................... 754

6.20.3 NDB Cluster and MySQL Security Procedures ....................................................... 756

7 NDB Cluster Replication .............................................................................................................. 759

7.1 NDB Cluster Replication: Abbreviations and Symbols ......................................................... 761

7.2 General Requirements for NDB Cluster Replication ............................................................ 761

vi

MySQL NDB Cluster 8.0

7.3 Known Issues in NDB Cluster Replication .......................................................................... 762

7.4 NDB Cluster Replication Schema and Tables ..................................................................... 770

7.5 Preparing the NDB Cluster for Replication ......................................................................... 777

7.6 Starting NDB Cluster Replication (Single Replication Channel) ............................................ 780

7.7 Using Two Replication Channels for NDB Cluster Replication ............................................. 782

7.8 Implementing Failover with NDB Cluster Replication ........................................................... 783

7.9 NDB Cluster Backups With NDB Cluster Replication .......................................................... 785

7.9.1 NDB Cluster Replication: Automating Synchronization of the Replica to the Source

Binary Log ...................................................................................................................... 787

7.9.2 Point-In-Time Recovery Using NDB Cluster Replication ........................................... 790

7.10 NDB Cluster Replication: Bidirectional and Circular Replication ......................................... 791

7.11 NDB Cluster Replication Using the Multithreaded Applier .................................................. 796

7.12 NDB Cluster Replication Conflict Resolution ..................................................................... 799

A NDB Cluster FAQ ....................................................................................................................... 819

vii

viii

Preface and Legal Notices

Licensing information—MySQL NDB Cluster 8.0. If you are using a Commercial release of MySQL

NDB Cluster 8.0, see the MySQL NDB Cluster 8.0 Commercial Release License Information User Manual

for licensing information, including licensing information relating to third-party software that may be

included in this Commercial release. If you are using a Community release of MySQL NDB Cluster 8.0,

see the MySQL NDB Cluster 8.0 Community Release License Information User Manual for licensing

information, including licensing information relating to third-party software that may be included in this

Community release.

Legal Notices

Copyright © 1997, 2024, Oracle and/or its affiliates.

License Restrictions

This software and related documentation are provided under a license agreement containing restrictions

on use and disclosure and are protected by intellectual property laws. Except as expressly permitted

in your license agreement or allowed by law, you may not use, copy, reproduce, translate, broadcast,

modify, license, transmit, distribute, exhibit, perform, publish, or display any part, in any form, or by any

means. Reverse engineering, disassembly, or decompilation of this software, unless required by law for

interoperability, is prohibited.

Warranty Disclaimer

The information contained herein is subject to change without notice and is not warranted to be error-free.

If you find any errors, please report them to us in writing.

Restricted Rights Notice

If this is software, software documentation, data (as defined in the Federal Acquisition Regulation), or

related documentation that is delivered to the U.S. Government or anyone licensing it on behalf of the U.S.

Government, then the following notice is applicable:

U.S. GOVERNMENT END USERS: Oracle programs (including any operating system, integrated

software, any programs embedded, installed, or activated on delivered hardware, and modifications

of such programs) and Oracle computer documentation or other Oracle data delivered to or accessed

by U.S. Government end users are "commercial computer software," "commercial computer software

documentation," or "limited rights data" pursuant to the applicable Federal Acquisition Regulation and

agency-specific supplemental regulations. As such, the use, reproduction, duplication, release, display,

disclosure, modification, preparation of derivative works, and/or adaptation of i) Oracle programs (including

any operating system, integrated software, any programs embedded, installed, or activated on delivered

hardware, and modifications of such programs), ii) Oracle computer documentation and/or iii) other Oracle

data, is subject to the rights and limitations specified in the license contained in the applicable contract.

The terms governing the U.S. Government's use of Oracle cloud services are defined by the applicable

contract for such services. No other rights are granted to the U.S. Government.

Hazardous Applications Notice

This software or hardware is developed for general use in a variety of information management

applications. It is not developed or intended for use in any inherently dangerous applications, including

applications that may create a risk of personal injury. If you use this software or hardware in dangerous

applications, then you shall be responsible to take all appropriate fail-safe, backup, redundancy, and other

measures to ensure its safe use. Oracle Corporation and its affiliates disclaim any liability for any damages

caused by use of this software or hardware in dangerous applications.

ix

Documentation Accessibility

Trademark Notice

Oracle, Java, MySQL, and NetSuite are registered trademarks of Oracle and/or its affiliates. Other names

may be trademarks of their respective owners.

Intel and Intel Inside are trademarks or registered trademarks of Intel Corporation. All SPARC trademarks

are used under license and are trademarks or registered trademarks of SPARC International, Inc. AMD,

Epyc, and the AMD logo are trademarks or registered trademarks of Advanced Micro Devices. UNIX is a

registered trademark of The Open Group.

Third-Party Content, Products, and Services Disclaimer

This software or hardware and documentation may provide access to or information about content,

products, and services from third parties. Oracle Corporation and its affiliates are not responsible for and

expressly disclaim all warranties of any kind with respect to third-party content, products, and services

unless otherwise set forth in an applicable agreement between you and Oracle. Oracle Corporation and its

affiliates will not be responsible for any loss, costs, or damages incurred due to your access to or use of

third-party content, products, or services, except as set forth in an applicable agreement between you and

Oracle.

Use of This Documentation

This documentation is NOT distributed under a GPL license. Use of this documentation is subject to the

following terms:

You may create a printed copy of this documentation solely for your own personal use. Conversion to other

formats is allowed as long as the actual content is not altered or edited in any way. You shall not publish

or distribute this documentation in any form or on any media, except if you distribute the documentation in

a manner similar to how Oracle disseminates it (that is, electronically for download on a Web site with the

software) or on a CD-ROM or similar medium, provided however that the documentation is disseminated

together with the software on the same medium. Any other use, such as any dissemination of printed

copies or use of this documentation, in whole or in part, in another publication, requires the prior written

consent from an authorized representative of Oracle. Oracle and/or its affiliates reserve any and all rights

to this documentation not expressly granted above.

Documentation Accessibility

For information about Oracle's commitment to accessibility, visit the Oracle Accessibility Program website

at

http://www.oracle.com/pls/topic/lookup?ctx=acc&id=docacc.

Access to Oracle Support for Accessibility

Oracle customers that have purchased support have access to electronic support through My Oracle

Support. For information, visit

http://www.oracle.com/pls/topic/lookup?ctx=acc&id=info or visit http://www.oracle.com/pls/topic/

lookup?ctx=acc&id=trs if you are hearing impaired.

x

Chapter 1 General Information

MySQL NDB Cluster uses the MySQL server with the NDB storage engine. Support for the NDB storage

engine is not included in standard MySQL Server 8.0 binaries built by Oracle. Instead, users of NDB

Cluster binaries from Oracle should upgrade to the most recent binary release of NDB Cluster for

supported platforms—these include RPMs that should work with most Linux distributions. NDB Cluster 8.0

users who build from source should use the sources provided for MySQL 8.0 and build with the options

required to provide NDB support. (Locations where the sources can be obtained are listed later in this

section.)

Important

MySQL NDB Cluster does not support InnoDB Cluster, which must be deployed

using MySQL Server 8.0 with the InnoDB storage engine as well as additional

applications that are not included in the NDB Cluster distribution. MySQL Server

8.0 binaries cannot be used with MySQL NDB Cluster. For more information about

deploying and using InnoDB Cluster, see MySQL AdminAPI. Section 2.6, “MySQL

Server Using InnoDB Compared with NDB Cluster”, discusses differences between

the NDB and InnoDB storage engines.

Supported Platforms. NDB Cluster is currently available and supported on a number of platforms.

For exact levels of support available for on specific combinations of operating system versions,

operating system distributions, and hardware platforms, please refer to https://www.mysql.com/support/

supportedplatforms/cluster.html.

Availability. NDB Cluster binary and source packages are available for supported platforms from

https://dev.mysql.com/downloads/cluster/.

NDB Cluster release numbers. NDB 8.0 follows the same release pattern as the MySQL Server 8.0

series of releases, beginning with MySQL 8.0.13 and MySQL NDB Cluster 8.0.13. In this Manual and other

MySQL documentation, we identify these and later NDB Cluster releases employing a version number that

begins with “NDB”. This version number is that of the NDBCLUSTER storage engine used in the NDB 8.0

release, and is the same as the MySQL 8.0 server version on which the NDB Cluster 8.0 release is based.

Version strings used in NDB Cluster software. The version string displayed by the mysql client

supplied with the MySQL NDB Cluster distribution uses this format:

mysql-mysql_server_version-cluster

mysql_server_version represents the version of the MySQL Server on which the NDB Cluster

release is based. For all NDB Cluster 8.0 releases, this is 8.0.n, where n is the release number. Building

from source using -DWITH_NDB or the equivalent adds the -cluster suffix to the version string. (See

Section 3.1.4, “Building NDB Cluster from Source on Linux”, and Section 3.2.2, “Compiling and Installing

NDB Cluster from Source on Windows”.) You can see this format used in the mysql client, as shown here:

$> mysql

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 2

Server version: 8.0.38-cluster Source distribution

Type 'help;' or '\h' for help. Type '\c' to clear the buffer.

mysql> SELECT VERSION()\G

*************************** 1. row ***************************

VERSION(): 8.0.38-cluster

1 row in set (0.00 sec)

1

The first General Availability release of NDB Cluster using MySQL 8.0 is NDB 8.0.19, using MySQL 8.0.19.

The version string displayed by other NDB Cluster programs not normally included with the MySQL 8.0

distribution uses this format:

mysql-mysql_server_version ndb-ndb_engine_version

mysql_server_version represents the version of the MySQL Server on which the NDB Cluster

release is based. For all NDB Cluster 8.0 releases, this is 8.0.n, where n is the release number.

ndb_engine_version is the version of the NDB storage engine used by this release of the NDB Cluster

software. For all NDB 8.0 releases, this number is the same as the MySQL Server version. You can see

this format used in the output of the SHOW command in the ndb_mgm client, like this:

ndb_mgm> SHOW

Connected to Management Server at: localhost:1186

Cluster Configuration

---------------------

[ndbd(NDB)] 2 node(s)

id=1 @10.0.10.6 (mysql-8.0.38 ndb-8.0.38, Nodegroup: 0, *)

id=2 @10.0.10.8 (mysql-8.0.38 ndb-8.0.38, Nodegroup: 0)

[ndb_mgmd(MGM)] 1 node(s)

id=3 @10.0.10.2 (mysql-8.0.38 ndb-8.0.38)

[mysqld(API)] 2 node(s)

id=4 @10.0.10.10 (mysql-8.0.38 ndb-8.0.38)

id=5 (not connected, accepting connect from any host)

Compatibility with standard MySQL 8.0 releases. While many standard MySQL schemas and

applications can work using NDB Cluster, it is also true that unmodified applications and database

schemas may be slightly incompatible or have suboptimal performance when run using NDB Cluster (see

Section 2.7, “Known Limitations of NDB Cluster”). Most of these issues can be overcome, but this also

means that you are very unlikely to be able to switch an existing application datastore—that currently

uses, for example, MyISAM or InnoDB—to use the NDB storage engine without allowing for the possibility

of changes in schemas, queries, and applications. A mysqld compiled without NDB support (that is,

built without -DWITH_NDB or -DWITH_NDBCLUSTER_STORAGE_ENGINE) cannot function as a drop-in

replacement for a mysqld that is built with it.

NDB Cluster development source trees. NDB Cluster development trees can also be accessed from

https://github.com/mysql/mysql-server.

The NDB Cluster development sources maintained at https://github.com/mysql/mysql-server are licensed

under the GPL. For information about obtaining MySQL sources using Git and building them yourself, see

Installing MySQL Using a Development Source Tree.

Note

As with MySQL Server 8.0, NDB Cluster 8.0 releases are built using CMake.

NDB Cluster 8.0 is available beginning with NDB 8.0.19 as a General Availability release, and is

recommended for new deployments. NDB Cluster 7.6 and 7.5 are previous GA releases still supported

in production; for information about NDB Cluster 7.6, see What is New in NDB Cluster 7.6. For similar

information about NDB Cluster 7.5, see What is New in NDB Cluster 7.5. NDB Cluster 7.4 and 7.3

are previous GA releases which are no longer maintained. We recommend that new deployments for

production use MySQL NDB Cluster 8.0.

The contents of this chapter are subject to revision as NDB Cluster continues to evolve. Additional

information regarding NDB Cluster can be found on the MySQL website at http://www.mysql.com/products/

cluster/.

2

Additional Resources. More information about NDB Cluster can be found in the following places:

• For answers to some commonly asked questions about NDB Cluster, see Appendix A, NDB Cluster

FAQ.

• The NDB Cluster Forum: https://forums.mysql.com/list.php?25.

• Many NDB Cluster users and developers blog about their experiences with NDB Cluster, and make

feeds of these available through PlanetMySQL.

3

4

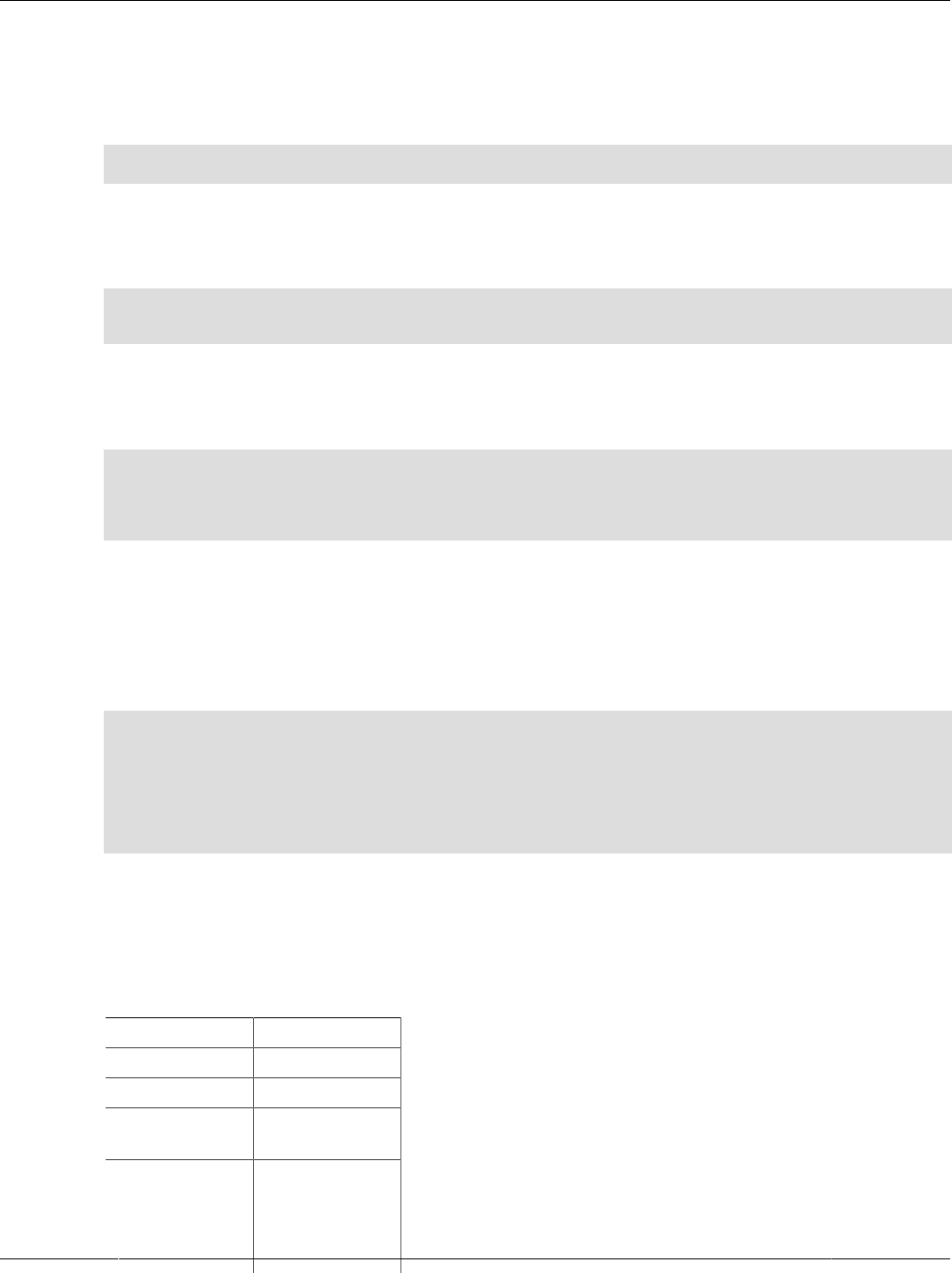

Chapter 2 NDB Cluster Overview

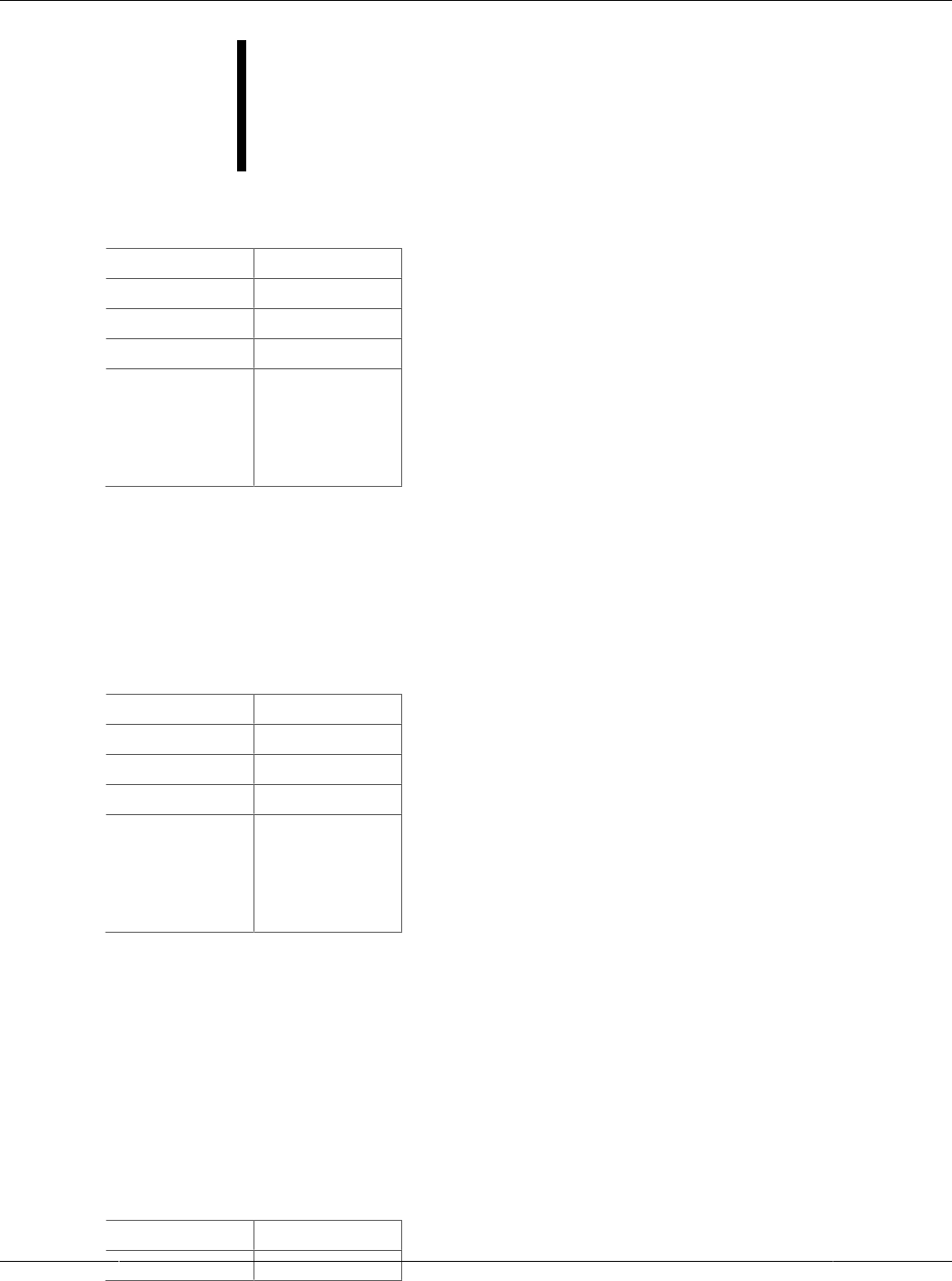

Table of Contents

2.1 NDB Cluster Core Concepts ......................................................................................................... 7

2.2 NDB Cluster Nodes, Node Groups, Fragment Replicas, and Partitions .......................................... 10

2.3 NDB Cluster Hardware, Software, and Networking Requirements .................................................. 13

2.4 What is New in MySQL NDB Cluster 8.0 ..................................................................................... 14

2.5 Options, Variables, and Parameters Added, Deprecated or Removed in NDB 8.0 ........................... 45

2.6 MySQL Server Using InnoDB Compared with NDB Cluster ........................................................... 51

2.6.1 Differences Between the NDB and InnoDB Storage Engines .............................................. 52

2.6.2 NDB and InnoDB Workloads ............................................................................................ 53

2.6.3 NDB and InnoDB Feature Usage Summary ...................................................................... 54

2.7 Known Limitations of NDB Cluster ............................................................................................... 54

2.7.1 Noncompliance with SQL Syntax in NDB Cluster ............................................................... 55

2.7.2 Limits and Differences of NDB Cluster from Standard MySQL Limits ................................... 57

2.7.3 Limits Relating to Transaction Handling in NDB Cluster ..................................................... 59

2.7.4 NDB Cluster Error Handling ............................................................................................. 61

2.7.5 Limits Associated with Database Objects in NDB Cluster ................................................... 62

2.7.6 Unsupported or Missing Features in NDB Cluster .............................................................. 62

2.7.7 Limitations Relating to Performance in NDB Cluster .......................................................... 63

2.7.8 Issues Exclusive to NDB Cluster ...................................................................................... 63

2.7.9 Limitations Relating to NDB Cluster Disk Data Storage ...................................................... 64

2.7.10 Limitations Relating to Multiple NDB Cluster Nodes ......................................................... 65

2.7.11 Previous NDB Cluster Issues Resolved in NDB Cluster 8.0 .............................................. 66

NDB Cluster is a technology that enables clustering of in-memory databases in a shared-nothing system.

The shared-nothing architecture enables the system to work with very inexpensive hardware, and with a

minimum of specific requirements for hardware or software.

NDB Cluster is designed not to have any single point of failure. In a shared-nothing system, each

component is expected to have its own memory and disk, and the use of shared storage mechanisms such

as network shares, network file systems, and SANs is not recommended or supported.

NDB Cluster integrates the standard MySQL server with an in-memory clustered storage engine called NDB

(which stands for “Network DataBase”). In our documentation, the term NDB refers to the part of the setup

that is specific to the storage engine, whereas “MySQL NDB Cluster” refers to the combination of one or

more MySQL servers with the NDB storage engine.

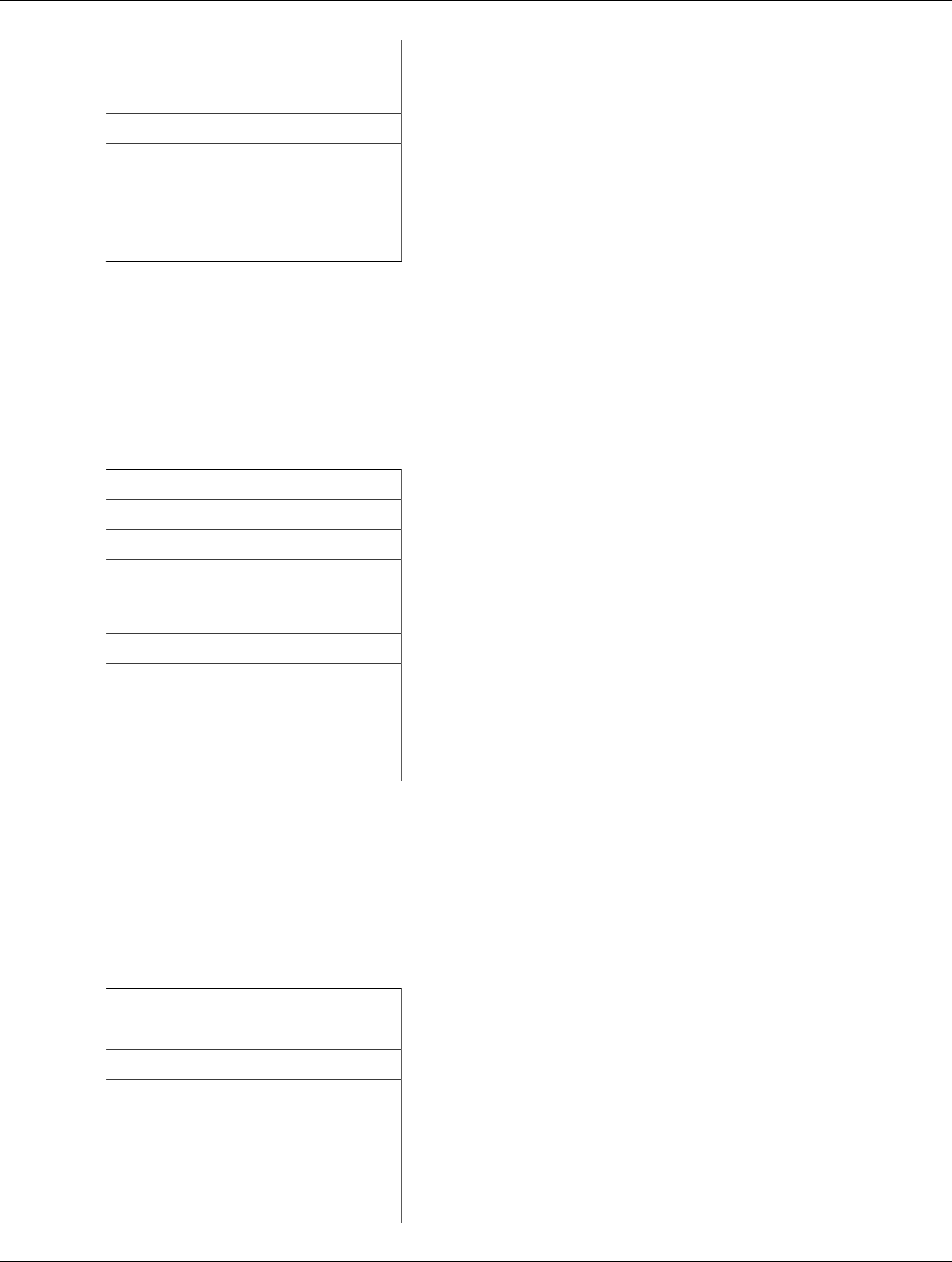

An NDB Cluster consists of a set of computers, known as hosts, each running one or more processes.

These processes, known as nodes, may include MySQL servers (for access to NDB data), data nodes

(for storage of the data), one or more management servers, and possibly other specialized data access

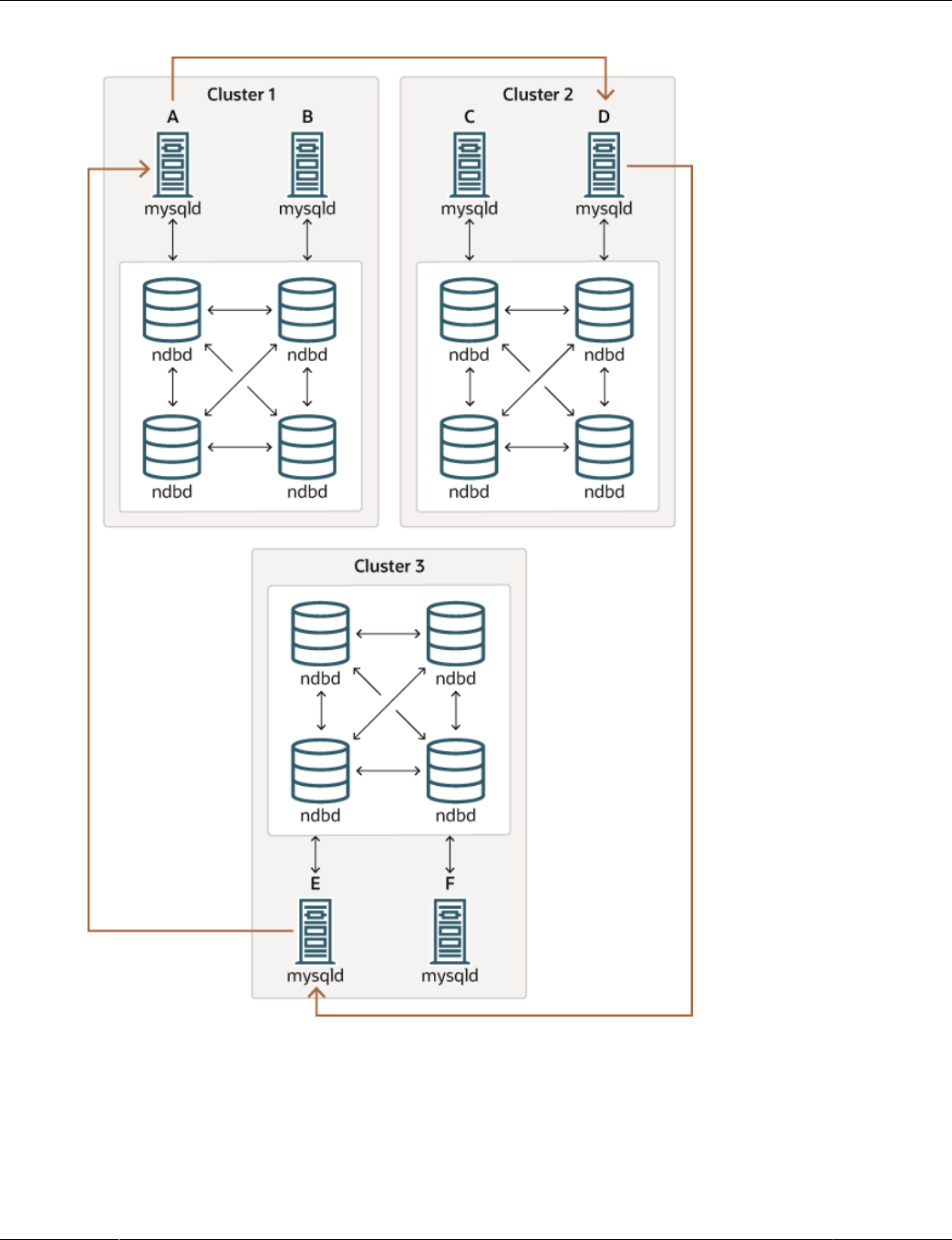

programs. The relationship of these components in an NDB Cluster is shown here:

5

Figure 2.1 NDB Cluster Components

All these programs work together to form an NDB Cluster (see Chapter 5, NDB Cluster Programs. When

data is stored by the NDB storage engine, the tables (and table data) are stored in the data nodes. Such

tables are directly accessible from all other MySQL servers (SQL nodes) in the cluster. Thus, in a payroll

application storing data in a cluster, if one application updates the salary of an employee, all other MySQL

servers that query this data can see this change immediately.

As of NDB 8.0.31, an NDB Cluster 8.0 SQL node uses the mysqld server daemon which is the same as

the mysqld supplied with MySQL Server 8.0 distributions. In NDB 8.0.30 and previous releases, it differed

in a number of critical respects from the mysqld binary supplied with MySQL Server, and the two versions

of mysqld were not interchangeable. You should keep in mind that an instance of mysqld, regardless of

version, that is not connected to an NDB Cluster cannot use the NDB storage engine and cannot access

any NDB Cluster data.

The data stored in the data nodes for NDB Cluster can be mirrored; the cluster can handle failures of

individual data nodes with no other impact than that a small number of transactions are aborted due to

losing the transaction state. Because transactional applications are expected to handle transaction failure,

this should not be a source of problems.

Individual nodes can be stopped and restarted, and can then rejoin the system (cluster). Rolling restarts

(in which all nodes are restarted in turn) are used in making configuration changes and software upgrades

(see Section 6.5, “Performing a Rolling Restart of an NDB Cluster”). Rolling restarts are also used as

part of the process of adding new data nodes online (see Section 6.7, “Adding NDB Cluster Data Nodes

Online”). For more information about data nodes, how they are organized in an NDB Cluster, and how

6

NDB Cluster Core Concepts

they handle and store NDB Cluster data, see Section 2.2, “NDB Cluster Nodes, Node Groups, Fragment

Replicas, and Partitions”.

Backing up and restoring NDB Cluster databases can be done using the NDB-native functionality found

in the NDB Cluster management client and the ndb_restore program included in the NDB Cluster

distribution. For more information, see Section 6.8, “Online Backup of NDB Cluster”, and Section 5.23,

“ndb_restore — Restore an NDB Cluster Backup”. You can also use the standard MySQL functionality

provided for this purpose in mysqldump and the MySQL server. See mysqldump — A Database Backup

Program, for more information.

NDB Cluster nodes can employ different transport mechanisms for inter-node communications; TCP/IP

over standard 100 Mbps or faster Ethernet hardware is used in most real-world deployments.

2.1 NDB Cluster Core Concepts

NDBCLUSTER (also known as NDB) is an in-memory storage engine offering high-availability and data-

persistence features.

The NDBCLUSTER storage engine can be configured with a range of failover and load-balancing options,

but it is easiest to start with the storage engine at the cluster level. NDB Cluster's NDB storage engine

contains a complete set of data, dependent only on other data within the cluster itself.

The “Cluster” portion of NDB Cluster is configured independently of the MySQL servers. In an NDB

Cluster, each part of the cluster is considered to be a node.

Note

In many contexts, the term “node” is used to indicate a computer, but when

discussing NDB Cluster it means a process. It is possible to run multiple nodes on a

single computer; for a computer on which one or more cluster nodes are being run

we use the term cluster host.

There are three types of cluster nodes, and in a minimal NDB Cluster configuration, there are at least three

nodes, one of each of these types:

• Management node: The role of this type of node is to manage the other nodes within the NDB Cluster,

performing such functions as providing configuration data, starting and stopping nodes, and running

backups. Because this node type manages the configuration of the other nodes, a node of this type

should be started first, before any other node. A management node is started with the command

ndb_mgmd.

• Data node: This type of node stores cluster data. There are as many data nodes as there are fragment

replicas, times the number of fragments (see Section 2.2, “NDB Cluster Nodes, Node Groups, Fragment

Replicas, and Partitions”). For example, with two fragment replicas, each having two fragments, you

need four data nodes. One fragment replica is sufficient for data storage, but provides no redundancy;

therefore, it is recommended to have two (or more) fragment replicas to provide redundancy, and thus

high availability. A data node is started with the command ndbd (see Section 5.1, “ndbd — The NDB

Cluster Data Node Daemon”) or ndbmtd (see Section 5.3, “ndbmtd — The NDB Cluster Data Node

Daemon (Multi-Threaded)”).

NDB Cluster tables are normally stored completely in memory rather than on disk (this is why we refer to

NDB Cluster as an in-memory database). However, some NDB Cluster data can be stored on disk; see

Section 6.11, “NDB Cluster Disk Data Tables”, for more information.

• SQL node: This is a node that accesses the cluster data. In the case of NDB Cluster, an SQL node is a

traditional MySQL server that uses the NDBCLUSTER storage engine. An SQL node is a mysqld process

7

NDB Cluster Core Concepts

started with the --ndbcluster and --ndb-connectstring options, which are explained elsewhere

in this chapter, possibly with additional MySQL server options as well.

An SQL node is actually just a specialized type of API node, which designates any application which

accesses NDB Cluster data. Another example of an API node is the ndb_restore utility that is used

to restore a cluster backup. It is possible to write such applications using the NDB API. For basic

information about the NDB API, see Getting Started with the NDB API.

Important

It is not realistic to expect to employ a three-node setup in a production

environment. Such a configuration provides no redundancy; to benefit from NDB

Cluster's high-availability features, you must use multiple data and SQL nodes. The

use of multiple management nodes is also highly recommended.

For a brief introduction to the relationships between nodes, node groups, fragment replicas, and partitions

in NDB Cluster, see Section 2.2, “NDB Cluster Nodes, Node Groups, Fragment Replicas, and Partitions”.

Configuration of a cluster involves configuring each individual node in the cluster and setting up individual

communication links between nodes. NDB Cluster is currently designed with the intention that data nodes

are homogeneous in terms of processor power, memory space, and bandwidth. In addition, to provide a

single point of configuration, all configuration data for the cluster as a whole is located in one configuration

file.

The management server manages the cluster configuration file and the cluster log. Each node in the

cluster retrieves the configuration data from the management server, and so requires a way to determine

where the management server resides. When interesting events occur in the data nodes, the nodes

transfer information about these events to the management server, which then writes the information to the

cluster log.

In addition, there can be any number of cluster client processes or applications. These include standard

MySQL clients, NDB-specific API programs, and management clients. These are described in the next few

paragraphs.

Standard MySQL clients. NDB Cluster can be used with existing MySQL applications written in PHP,

Perl, C, C++, Java, Python, and so on. Such client applications send SQL statements to and receive

responses from MySQL servers acting as NDB Cluster SQL nodes in much the same way that they interact

with standalone MySQL servers.

MySQL clients using an NDB Cluster as a data source can be modified to take advantage of the ability

to connect with multiple MySQL servers to achieve load balancing and failover. For example, Java

clients using Connector/J 5.0.6 and later can use jdbc:mysql:loadbalance:// URLs (improved in

Connector/J 5.1.7) to achieve load balancing transparently; for more information about using Connector/J

with NDB Cluster, see Using Connector/J with NDB Cluster.

NDB client programs. Client programs can be written that access NDB Cluster data directly from the

NDBCLUSTER storage engine, bypassing any MySQL Servers that may be connected to the cluster, using

the NDB API, a high-level C++ API. Such applications may be useful for specialized purposes where an

SQL interface to the data is not needed. For more information, see The NDB API.

NDB-specific Java applications can also be written for NDB Cluster using the NDB Cluster Connector for

Java. This NDB Cluster Connector includes ClusterJ, a high-level database API similar to object-relational

mapping persistence frameworks such as Hibernate and JPA that connect directly to NDBCLUSTER, and so

does not require access to a MySQL Server. See Java and NDB Cluster, and The ClusterJ API and Data

Object Model, for more information.

8

NDB Cluster Core Concepts

NDB Cluster also supports applications written in JavaScript using Node.js. The MySQL Connector for

JavaScript includes adapters for direct access to the NDB storage engine and as well as for the MySQL

Server. Applications using this Connector are typically event-driven and use a domain object model similar

in many ways to that employed by ClusterJ. For more information, see MySQL NoSQL Connector for

JavaScript.

Management clients. These clients connect to the management server and provide commands for

starting and stopping nodes gracefully, starting and stopping message tracing (debug versions only),

showing node versions and status, starting and stopping backups, and so on. An example of this type

of program is the ndb_mgm management client supplied with NDB Cluster (see Section 5.5, “ndb_mgm

— The NDB Cluster Management Client”). Such applications can be written using the MGM API, a C-

language API that communicates directly with one or more NDB Cluster management servers. For more

information, see The MGM API.

Oracle also makes available MySQL Cluster Manager, which provides an advanced command-line

interface simplifying many complex NDB Cluster management tasks, such restarting an NDB Cluster with

a large number of nodes. The MySQL Cluster Manager client also supports commands for getting and

setting the values of most node configuration parameters as well as mysqld server options and variables

relating to NDB Cluster. MySQL Cluster Manager 8.0 provides support for NDB 8.0. See MySQL Cluster

Manager 8.0.39 User Manual, for more information.

Event logs. NDB Cluster logs events by category (startup, shutdown, errors, checkpoints, and so

on), priority, and severity. A complete listing of all reportable events may be found in Section 6.3, “Event

Reports Generated in NDB Cluster”. Event logs are of the two types listed here:

• Cluster log: Keeps a record of all desired reportable events for the cluster as a whole.

• Node log: A separate log which is also kept for each individual node.

Note

Under normal circumstances, it is necessary and sufficient to keep and examine

only the cluster log. The node logs need be consulted only for application

development and debugging purposes.

Checkpoint. Generally speaking, when data is saved to disk, it is said that a checkpoint has been

reached. More specific to NDB Cluster, a checkpoint is a point in time where all committed transactions

are stored on disk. With regard to the NDB storage engine, there are two types of checkpoints which work

together to ensure that a consistent view of the cluster's data is maintained. These are shown in the

following list:

• Local Checkpoint (LCP): This is a checkpoint that is specific to a single node; however, LCPs take place

for all nodes in the cluster more or less concurrently. An LCP usually occurs every few minutes; the

precise interval varies, and depends upon the amount of data stored by the node, the level of cluster

activity, and other factors.

NDB 8.0 supports partial LCPs, which can significantly improve performance under some conditions.

See the descriptions of the EnablePartialLcp and RecoveryWork configuration parameters which

enable partial LCPs and control the amount of storage they use.

• Global Checkpoint (GCP): A GCP occurs every few seconds, when transactions for all nodes are

synchronized and the redo-log is flushed to disk.

For more information about the files and directories created by local checkpoints and global checkpoints,

see NDB Cluster Data Node File System Directory.

9

NDB Cluster Nodes, Node Groups, Fragment Replicas, and Partitions

Transporter. We use the term transporter for the data transport mechanism employed between data

nodes. MySQL NDB Cluster 8.0 supports three of these, which are listed here:

• TCP/IP over Ethernet. See Section 4.3.10, “NDB Cluster TCP/IP Connections”.

• Direct TCP/IP. Uses machine-to-machine connections. See Section 4.3.11, “NDB Cluster TCP/IP

Connections Using Direct Connections”.

Although this transporter uses the same TCP/IP protocol as mentioned in the previous item, it requires

setting up the hardware differently and is configured differently as well. For this reason, it is considered a

separate transport mechanism for NDB Cluster.

• Shared memory (SHM). See Section 4.3.12, “NDB Cluster Shared-Memory Connections”.

Because it is ubiquitous, most users employ TCP/IP over Ethernet for NDB Cluster.

Regardless of the transporter used, NDB attempts to make sure that communication between data node

processes is performed using chunks that are as large as possible since this benefits all types of data

transmission.

2.2 NDB Cluster Nodes, Node Groups, Fragment Replicas, and

Partitions

This section discusses the manner in which NDB Cluster divides and duplicates data for storage.

A number of concepts central to an understanding of this topic are discussed in the next few paragraphs.

Data node. An ndbd or ndbmtd process, which stores one or more fragment replicas—that is, copies of

the partitions (discussed later in this section) assigned to the node group of which the node is a member.

Each data node should be located on a separate computer. While it is also possible to host multiple data

node processes on a single computer, such a configuration is not usually recommended.

It is common for the terms “node” and “data node” to be used interchangeably when referring to an ndbd or

ndbmtd process; where mentioned, management nodes (ndb_mgmd processes) and SQL nodes (mysqld

processes) are specified as such in this discussion.

Node group. A node group consists of one or more nodes, and stores partitions, or sets of fragment

replicas (see next item).

The number of node groups in an NDB Cluster is not directly configurable; it is a function of the number of

data nodes and of the number of fragment replicas (NoOfReplicas configuration parameter), as shown

here:

[# of node groups] = [# of data nodes] / NoOfReplicas

Thus, an NDB Cluster with 4 data nodes has 4 node groups if NoOfReplicas is set to 1 in the

config.ini file, 2 node groups if NoOfReplicas is set to 2, and 1 node group if NoOfReplicas is set

to 4. Fragment replicas are discussed later in this section; for more information about NoOfReplicas, see

Section 4.3.6, “Defining NDB Cluster Data Nodes”.

Note

All node groups in an NDB Cluster must have the same number of data nodes.

10

NDB Cluster Nodes, Node Groups, Fragment Replicas, and Partitions

You can add new node groups (and thus new data nodes) online, to a running NDB Cluster; see

Section 6.7, “Adding NDB Cluster Data Nodes Online”, for more information.

Partition. This is a portion of the data stored by the cluster. Each node is responsible for keeping at

least one copy of any partitions assigned to it (that is, at least one fragment replica) available to the cluster.

The number of partitions used by default by NDB Cluster depends on the number of data nodes and the

number of LDM threads in use by the data nodes, as shown here:

[# of partitions] = [# of data nodes] * [# of LDM threads]

When using data nodes running ndbmtd, the number of LDM threads is controlled by the setting for

MaxNoOfExecutionThreads. When using ndbd there is a single LDM thread, which means that there

are as many cluster partitions as nodes participating in the cluster. This is also the case when using

ndbmtd with MaxNoOfExecutionThreads set to 3 or less. (You should be aware that the number of

LDM threads increases with the value of this parameter, but not in a strictly linear fashion, and that there

are additional constraints on setting it; see the description of MaxNoOfExecutionThreads for more

information.)

NDB and user-defined partitioning. NDB Cluster normally partitions NDBCLUSTER tables

automatically. However, it is also possible to employ user-defined partitioning with NDBCLUSTER tables.

This is subject to the following limitations:

1. Only the KEY and LINEAR KEY partitioning schemes are supported in production with NDB tables.

2. The maximum number of partitions that may be defined explicitly for any NDB table is 8 * [number

of LDM threads] * [number of node groups], the number of node groups in an NDB Cluster

being determined as discussed previously in this section. When running ndbd for data node processes,

setting the number of LDM threads has no effect (since ThreadConfig applies only to ndbmtd);

in such cases, this value can be treated as though it were equal to 1 for purposes of performing this

calculation.

See Section 5.3, “ndbmtd — The NDB Cluster Data Node Daemon (Multi-Threaded)”, for more

information.

For more information relating to NDB Cluster and user-defined partitioning, see Section 2.7, “Known

Limitations of NDB Cluster”, and Partitioning Limitations Relating to Storage Engines.

Fragment replica. This is a copy of a cluster partition. Each node in a node group stores a fragment

replica. Also sometimes known as a partition replica. The number of fragment replicas is equal to the

number of nodes per node group.

A fragment replica belongs entirely to a single node; a node can (and usually does) store several fragment

replicas.

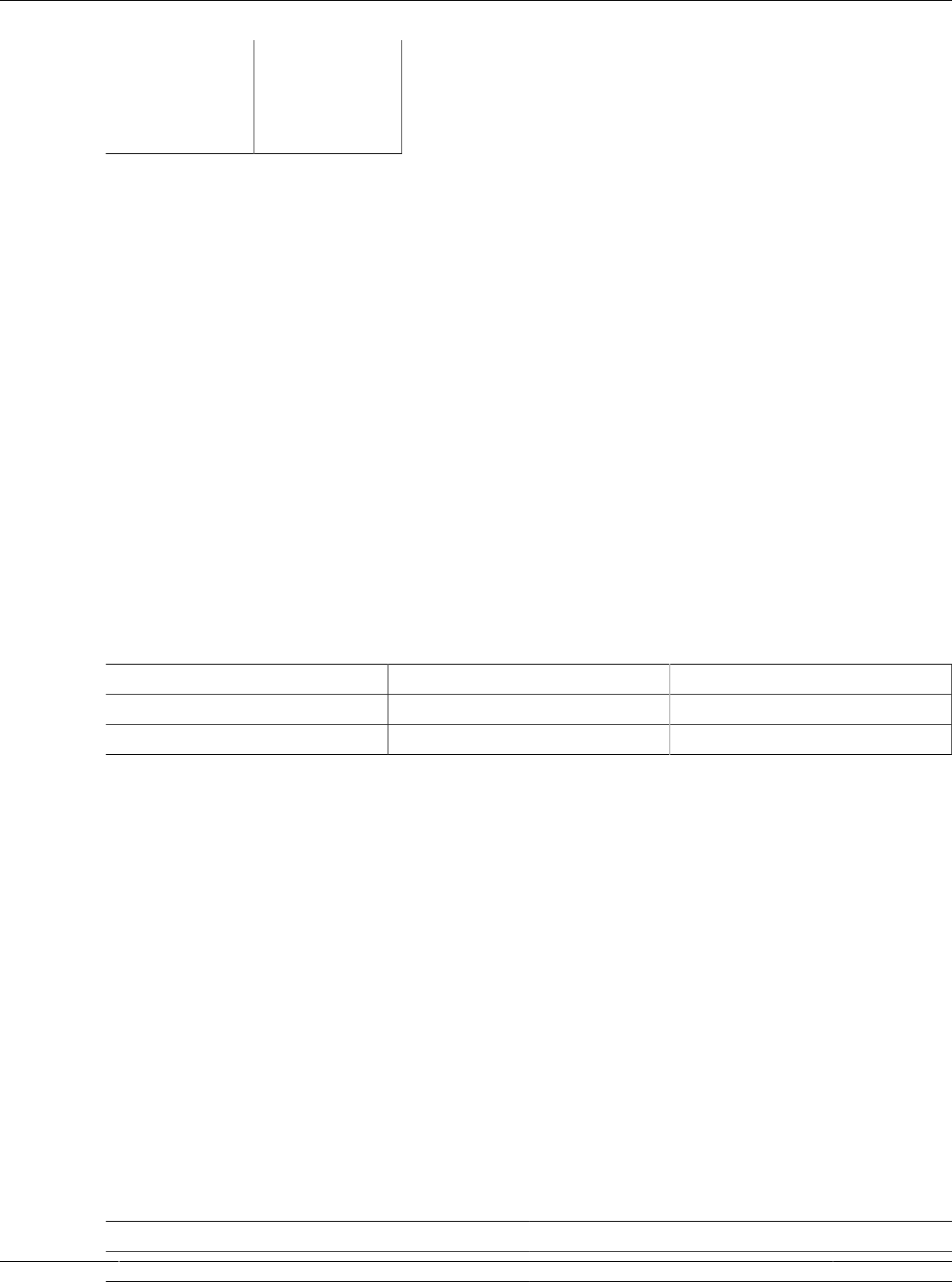

The following diagram illustrates an NDB Cluster with four data nodes running ndbd, arranged in two node

groups of two nodes each; nodes 1 and 2 belong to node group 0, and nodes 3 and 4 belong to node

group 1.

Note

Only data nodes are shown here; although a working NDB Cluster requires an

ndb_mgmd process for cluster management and at least one SQL node to access

the data stored by the cluster, these have been omitted from the figure for clarity.

11

NDB Cluster Nodes, Node Groups, Fragment Replicas, and Partitions

Figure 2.2 NDB Cluster with Two Node Groups

The data stored by the cluster is divided into four partitions, numbered 0, 1, 2, and 3. Each partition is

stored—in multiple copies—on the same node group. Partitions are stored on alternate node groups as

follows:

• Partition 0 is stored on node group 0; a primary fragment replica (primary copy) is stored on node 1, and

a backup fragment replica (backup copy of the partition) is stored on node 2.

• Partition 1 is stored on the other node group (node group 1); this partition's primary fragment replica is

on node 3, and its backup fragment replica is on node 4.

• Partition 2 is stored on node group 0. However, the placing of its two fragment replicas is reversed from

that of Partition 0; for Partition 2, the primary fragment replica is stored on node 2, and the backup on

node 1.

• Partition 3 is stored on node group 1, and the placement of its two fragment replicas are reversed from

those of partition 1. That is, its primary fragment replica is located on node 4, with the backup on node 3.

What this means regarding the continued operation of an NDB Cluster is this: so long as each node group

participating in the cluster has at least one node operating, the cluster has a complete copy of all data and

remains viable. This is illustrated in the next diagram.

12

NDB Cluster Hardware, Software, and Networking Requirements

Figure 2.3 Nodes Required for a 2x2 NDB Cluster

In this example, the cluster consists of two node groups each consisting of two data nodes. Each data

node is running an instance of ndbd. Any combination of at least one node from node group 0 and at least

one node from node group 1 is sufficient to keep the cluster “alive”. However, if both nodes from a single

node group fail, the combination consisting of the remaining two nodes in the other node group is not

sufficient. In this situation, the cluster has lost an entire partition and so can no longer provide access to a

complete set of all NDB Cluster data.

The maximum number of node groups supported for a single NDB Cluster instance is 48.

2.3 NDB Cluster Hardware, Software, and Networking Requirements

One of the strengths of NDB Cluster is that it can be run on commodity hardware and has no unusual

requirements in this regard, other than for large amounts of RAM, due to the fact that all live data storage

is done in memory. (It is possible to reduce this requirement using Disk Data tables—see Section 6.11,

“NDB Cluster Disk Data Tables”, for more information about these.) Naturally, multiple and faster CPUs

can enhance performance. Memory requirements for other NDB Cluster processes are relatively small.

The software requirements for NDB Cluster are also modest. Host operating systems do not require any

unusual modules, services, applications, or configuration to support NDB Cluster. For supported operating

systems, a standard installation should be sufficient. The MySQL software requirements are simple: all that

is needed is a production release of NDB Cluster. It is not strictly necessary to compile MySQL yourself

merely to be able to use NDB Cluster. We assume that you are using the binaries appropriate to your

platform, available from the NDB Cluster software downloads page at https://dev.mysql.com/downloads/

cluster/.

For communication between nodes, NDB Cluster supports TCP/IP networking in any standard topology,

and the minimum expected for each host is a standard 100 Mbps Ethernet card, plus a switch, hub, or

router to provide network connectivity for the cluster as a whole. We strongly recommend that an NDB

Cluster be run on its own subnet which is not shared with machines not forming part of the cluster for the

following reasons:

• Security. Communications between NDB Cluster nodes are not encrypted or shielded in any way.

The only means of protecting transmissions within an NDB Cluster is to run your NDB Cluster on a

protected network. If you intend to use NDB Cluster for Web applications, the cluster should definitely

reside behind your firewall and not in your network's De-Militarized Zone (DMZ) or elsewhere.

13

What is New in MySQL NDB Cluster 8.0

See Section 6.20.1, “NDB Cluster Security and Networking Issues”, for more information.

• Efficiency. Setting up an NDB Cluster on a private or protected network enables the cluster to make

exclusive use of bandwidth between cluster hosts. Using a separate switch for your NDB Cluster not only

helps protect against unauthorized access to NDB Cluster data, it also ensures that NDB Cluster nodes

are shielded from interference caused by transmissions between other computers on the network. For

enhanced reliability, you can use dual switches and dual cards to remove the network as a single point

of failure; many device drivers support failover for such communication links.

Network communication and latency. NDB Cluster requires communication between data nodes

and API nodes (including SQL nodes), as well as between data nodes and other data nodes, to execute

queries and updates. Communication latency between these processes can directly affect the observed

performance and latency of user queries. In addition, to maintain consistency and service despite the

silent failure of nodes, NDB Cluster uses heartbeating and timeout mechanisms which treat an extended

loss of communication from a node as node failure. This can lead to reduced redundancy. Recall that, to

maintain data consistency, an NDB Cluster shuts down when the last node in a node group fails. Thus, to

avoid increasing the risk of a forced shutdown, breaks in communication between nodes should be avoided

wherever possible.

The failure of a data or API node results in the abort of all uncommitted transactions involving the failed

node. Data node recovery requires synchronization of the failed node's data from a surviving data node,

and re-establishment of disk-based redo and checkpoint logs, before the data node returns to service. This

recovery can take some time, during which the Cluster operates with reduced redundancy.

Heartbeating relies on timely generation of heartbeat signals by all nodes. This may not be possible if the

node is overloaded, has insufficient machine CPU due to sharing with other programs, or is experiencing

delays due to swapping. If heartbeat generation is sufficiently delayed, other nodes treat the node that is

slow to respond as failed.

This treatment of a slow node as a failed one may or may not be desirable in some circumstances,

depending on the impact of the node's slowed operation on the rest of the cluster. When setting timeout

values such as HeartbeatIntervalDbDb and HeartbeatIntervalDbApi for NDB Cluster, care

must be taken care to achieve quick detection, failover, and return to service, while avoiding potentially

expensive false positives.

Where communication latencies between data nodes are expected to be higher than would be expected

in a LAN environment (on the order of 100 µs), timeout parameters must be increased to ensure that any

allowed periods of latency periods are well within configured timeouts. Increasing timeouts in this way has

a corresponding effect on the worst-case time to detect failure and therefore time to service recovery.

LAN environments can typically be configured with stable low latency, and such that they can provide

redundancy with fast failover. Individual link failures can be recovered from with minimal and controlled

latency visible at the TCP level (where NDB Cluster normally operates). WAN environments may offer a

range of latencies, as well as redundancy with slower failover times. Individual link failures may require

route changes to propagate before end-to-end connectivity is restored. At the TCP level this can appear as

large latencies on individual channels. The worst-case observed TCP latency in these scenarios is related

to the worst-case time for the IP layer to reroute around the failures.

2.4 What is New in MySQL NDB Cluster 8.0

The following sections describe changes in the implementation of MySQL NDB Cluster in NDB Cluster

8.0 through 8.0.38, as compared to earlier release series. NDB Cluster 8.0 is available as a General

Availability (GA) release, beginning with NDB 8.0.19. NDB Cluster 7.6 and 7.5 are previous GA releases

still supported in production; for information about NDB Cluster 7.6, see What is New in NDB Cluster 7.6.

14

What is New in NDB Cluster 8.0

For similar information about NDB Cluster 7.5, see What is New in NDB Cluster 7.5. NDB Cluster 7.4 and

7.3 were previous GA releases which have reached their end of life, and which are no longer supported or

maintained. We recommend that new deployments for production use MySQL NDB Cluster 8.0.

What is New in NDB Cluster 8.0

Major changes and new features in NDB Cluster 8.0 which are likely to be of interest are shown in the

following list:

• Compatibility enhancements. The following changes reduce longstanding nonessential differences

in NDB behavior as compared to that of other MySQL storage engines:

• Development in parallel with MySQL server. Beginning with this release, MySQL NDB Cluster

is being developed in parallel with the standard MySQL 8.0 server under a new unified release model

with the following features:

• NDB 8.0 is developed in, built from, and released with the MySQL 8.0 source code tree.

• The numbering scheme for NDB Cluster 8.0 releases follows the scheme for MySQL 8.0.

• Building the source with NDB support appends -cluster to the version string returned by mysql -

V, as shown here:

$> mysql -V

mysql Ver 8.0.38-cluster for Linux on x86_64 (Source distribution)

NDB binaries continue to display both the MySQL Server version and the NDB engine version, like

this:

$> ndb_mgm -V

MySQL distrib mysql-8.0.38 ndb-8.0.38, for Linux (x86_64)

In MySQL Cluster NDB 8.0, these two version numbers are always the same.

To build the MySQL source with NDB Cluster support, use the CMake option -DWITH_NDB (NDB

8.0.31 and later; for earlier releases, use -DWITH_NDBCLUSTER instead).

• Platform support notes. NDB 8.0 makes the following changes in platform support:

• NDBCLUSTER no longer supports 32-bit platforms. Beginning with NDB 8.0.21, the NDB build

process checks the system architecture and aborts if it is not a 64-bit platform.

• It is now possible to build NDB from source for 64-bit ARM CPUs. Currently, this support is source-

only, and we do not provide any precompiled binaries for this platform.

• Database and table names. NDB 8.0 removes the previous 63-byte limit on identifiers for

databases and tables. These identifiers can now use up to 64 bytes, as for such objects using other

MySQL storage engines. See Section 2.7.11, “Previous NDB Cluster Issues Resolved in NDB Cluster

8.0”.

• Generated names for foreign keys. NDB now uses the pattern tbl_name_fk_N for naming

internally generated foreign keys. This is similar to the pattern used by InnoDB.

• Schema and metadata distribution and synchronization. NDB 8.0 makes use of the MySQL

data dictionary to distribute schema information to SQL nodes joining a cluster and to synchronize new

schema changes between existing SQL nodes. The following list describes individual enhancements

relating to this integration work:

15

What is New in NDB Cluster 8.0

• Schema distribution enhancements. The NDB schema distribution coordinator, which handles

schema operations and tracks their progress, has been extended in NDB 8.0 to ensure that resources

used during a schema operation are released at its conclusion. Previously, some of this work was

done by the schema distribution client; this has been changed due to the fact that the client did not

always have all needed state information, which could lead to resource leaks when the client decided

to abandon the schema operation prior to completion and without informing the coordinator.

To help fix this issue, schema operation timeout detection has been moved from the schema

distribution client to the coordinator, providing the coordinator with an opportunity to clean up

any resources used during the schema operation. The coordinator now checks ongoing schema

operations for timeout at regular intervals, and marks participants that have not yet completed a given

schema operation as failed when detecting timeout. It also provides suitable warnings whenever

a schema operation timeout occurs. (It should be noted that, after such a timeout is detected, the

schema operation itself continues.) Additional reporting is done by printing a list of active schema