Springer Texts in Statistics

PeterK.Dunn· GordonK.Smyth

Generalized

Linear Models

With Examples

in R

Springer Texts in Statistics

Series Editors

R. DeVeaux

S.E. Fienberg

I. Olkin

Peter K. Dunn • Gordon K. Smyth

Generalized Linear Models

With Examples in R

123

Peter K. Dunn

Faculty of Science, Health, Education

and Engineering

School of Health of Sport Science

University of the Sunshine Coast

QLD, Australia

Gordon K. Smyth

Bioinformatics Division

Walter and Eliza Hall Institute

of Medical Research

Parkville, VIC, Australia

ISSN 1431-875X ISSN 2197-4136 (electronic)

Springer Texts in Statistics

ISBN 978-1-4419-0117-0 ISBN 978-1-4419-0118-7 (eBook)

https://doi.org/10.1007/978-1-4419-0118-7

Library of Congress Control Number: 2018954737

© Springer Science+Business Media, LLC, part of Springer Nature 2018

This work is subject to copyright. All rights are reserved by the Publisher, whether the whole or part of

the material is concerned, specifically the rights of translation, reprinting, reuse of illustrations, recitation,

broadcasting, reproduction on microfilms or in any other physical way, and transmission or information

storage and retrieval, electronic adaptation, computer software, or by similar or dissimilar methodology

now known or hereafter developed.

The use of general descriptive names, registered names, trademarks, service marks, etc. in this publication

does not imply, even in the absence of a specific statement, that such names are exempt from the relevant

protective laws and regulations and therefore free for general use.

The publisher, the authors, and the editors are safe to assume that the advice and information in this book

are believed to be true and accurate at the date of publication. Neither the publisher nor the authors or

the editors give a warranty, express or implied, with respect to the material contained herein or for any

errors or omissions that may have been made. The publisher remains neutral with regard to jurisdictional

claims in published maps and institutional affiliations.

This Springer imprint is published by the registered company Springer Science+Business Media, LLC

part of Springer Nature.

The registered company address is: 233 Spring Street, New York, NY 10013, U.S.A.

To my wife Alison; our children Jessica,

Emily, Jemima, Samuel, Josiah and

Elijah; and my parents: Thank you for

your love and support and for giving so

much so I could get this far. PKD

To those who taught me about glms 40

years ago and to all the students who, in

the years since, have patiently listened to

me on the subject, given feedback and

generally made it rewarding to be a

teacher. GKS

Preface

A sophisticated analysis is wasted if the results cannot be

communicated effectively to the client.

Reese [4, p. 201]

Our purpose in writing this book is to combine a good applied introduction to

generalized linear models (glms) with a thorough explanation of the theory

that is understandable from an elementary point of view.

We assume students to have basic knowledge of statistics and calculus. A

working familiarity with probability, probability distributions and hypothe-

sis testing is assumed, but a self-contained introduction to all other topics is

given in the book including linear regression. The early chapters of the book

give an introduction to linear regression and analysis of variance suitable

for a second course in statistics. Students with more advanced backgrounds,

including matrix algebra, will benefit from optional sections that give a de-

tailed introduction to the theory and algorithms. The book can therefore be

read at multiple levels. It can be read by students with only a first course in

statistics, but at the same time, it contains advanced material suitable for

graduate students and professionals.

The book should be appropriate for graduate students in statistics at either

the masters or PhD levels. It should be also be appropriate for advanced

undergraduate students taking majors in statistics in Britain or Australia.

Students in psychology, biometrics and related disciplines will also benefit.

In general, it is appropriate for anyone wanting a practical working knowledge

of glms with a sound theoretical background.

r is a powerful and freely available environment for statistical computing

and graphics that has become widely adopted around the world. This book

includes a self-contained introduction to R (Appendix A), and use of r is

integrated into the text throughout the book. This includes comprehensive

r code examples and complete code for most data analyses and case studies.

Detailed use of relevant r functions is described in each chapter.

A practical working knowledge of good applied statistical practice is de-

veloped through the use of real data sets and numerous case studies. This

book makes almost exclusive use of real data. These data sets are collected in

the r package GLMsData [1] (see Appendix A for instructions for obtaining

vii

viii Preface

this r package), which has been prepared especially for use with this book

and which contains 97 data sets. Each example in the text is cross-referenced

with the relevant data set so that readers can load the relevant data to follow

the analysis in their own r session. Complete reproducible r code is provided

with the text for most examples.

The development of the theoretical background sometimes requires more

advanced mathematical techniques, including the use of matrix algebra. How-

ever, knowledge of these techniques is not required to read this book. We have

ensured that readers without this knowledge can still follow the theoretical

development, by flagging the corresponding sections with a star * in the*

margin. Readers unfamiliar with these techniques may skip these sections

and problems without loss of continuity. However, those with the necessary

knowledge can gain more insight by reading the optional starred sections.

A set of problems is given at the end of each chapter and at the end of the

book. The balance between theory and practice is evident in the list of prob-

lems, which vary in difficulty and purpose. These problems cover many areas

of application and test understanding, theory, application, interpretation and

the ability to read publications that use glms.

This book begins with an introduction to multiple linear regression. In

abookaboutglms, at least three reasons exist for beginning with a short

discussion of multiple linear regression:

• Linear regression is familiar. Starting with regression consolidates this

material and establishes common notation, terminology and knowledge

for all readers. Notation and new terms are best introduced in a familiar

context.

• Linear regression is foundational. Many concepts and ideas from linear

regression are used as approximations in glms. A firm foundation in

linear regression ensures a better understanding of glms.

• Linear regression is motivational. Glmsoftenimprove linear regression.

Studying linear regression reveals its weaknesses and shows how glms

can often overcome most of these, motivating the need for glms.

Connections between linear regression and glms are emphasized throughout

this book.

This book contains a number of important but advanced topics and tools

that have not typically been included in introductions to glms before. These

include Tweedie family distributions with power variance functions, saddle-

point approximations, likelihood score tests, modified profile likelihood and

randomized quantile residuals, as well as regression splines and orthogonal

polynomials. Particular features are the use of saddlepoint approximations

to clarify the asymptotical distribution of residual deviances from glmsand

an explanation of the relationship between score tests and Pearson statis-

tics. Practical and specific guidelines are developed for the use of asymptotic

approximations.

Preface ix

Throughout this book, r functions are shown in typewriter font fol-

lowed by parentheses; for example, glm(). Operators, data frames and vari-

ables in r are shown in typewriter font; for example, Smoke. r packages

are shown in bold and sans serif font; for example, GLMsData.

We thank those who have contributed to the writing of this book and

especially students who have contributed to earlier versions of this text. We

particularly thank Janette Benson, Alison Howes and Martine Maron for the

permission to use data.

This book was prepared using L

A

T

E

Xandr version 3.4.3 [3], integrated

using Sweave [2].

Sippy Downs, QLD, Australia Peter K. Dunn

Parkville, VIC, Australia Gordon K. Smyth

December 2017

References

[1] Dunn, P.K., Smyth, G.K.: GLMsData: Generalized linear model data sets

(2017). URL https://CRAN.R-project.org/package=GLMsData. R pack-

age version 1.0.0

[2] Leisch, F.: Dynamic generation of statistical reports using literate data

analysis. In: W. Härdle, B. Rönz (eds.) Compstat 2002—Proceedings

in Computational Statistics, pp. 575–580. Physika Verlag, Heidelberg,

Germany (2002)

[3] R Development Core Team: R: A Language and Environment for Statisti-

cal Computing. R Foundation for Statistical Computing, Vienna, Austria

(2017). URL https://www.R-project.org

[4] Reese, R.A.: Data analysis: The need for models? The Statistician 35(2),

199–206 (1986). Special Issue: Statistical Modelling

Contents

1 Statistical Models ......................................... 1

1.1 Introduction and Overview .............................. 1

1.2 Conventions for Describing Data ......................... 1

1.3 Plotting Data ......................................... 5

1.4 Coding for Factors ..................................... 10

1.5 Statistical Models Describe Both Random and Systematic

Features of Data ....................................... 11

1.6 Regression Models ..................................... 12

1.7 Interpreting Regression Models .......................... 16

1.8 All Models Are Wrong, but Some Are Useful .............. 17

1.9 The Purpose of a Statistical Model Affects How It Is

Developed ............................................ 18

1.10 Accuracy vs Parsimony ................................. 19

1.11 Experiments vs Observational Studies: Causality

vs Association ......................................... 21

1.12 Data Collection and Generalizability ..................... 22

1.13 Using R for Statistical Modelling ........................ 23

1.14 Summary ............................................. 24

Problems ................................................... 25

References .................................................. 29

2 Linear Regression Models ................................. 31

2.1 Introduction and Overview .............................. 31

2.2 Linear Regression Models Defined ........................ 31

2.3 Simple Linear Regression ............................... 35

2.3.1 Least-Squares Estimation ........................ 35

2.3.2 Coefficient Estimates ............................ 36

2.3.3 Estimating the Variance σ

2

...................... 38

2.3.4 Standard Errors of the Coefficients ................ 39

2.3.5 Standard Errors of Fitted Values ................. 39

xi

xii Contents

2.4 Estimation for Multiple Regression ....................... 40

2.4.1 Coefficient Estimates ............................ 40

2.4.2 Estimating the Variance σ

2

...................... 42

2.4.3 Standard Errors ................................ 42

* 2.5 Matrix Formulation of Linear Regression Models ........... 43

* 2.5.1 Matrix Notation ................................ 43

* 2.5.2 Coefficient Estimates ............................ 44

* 2.5.3 Estimating the Variance σ

2

...................... 46

* 2.5.4 Estimating the Variance of

ˆ

β ..................... 47

* 2.5.5 Estimating the Variance of Fitted Values .......... 47

2.6 Fitting Linear Regression Models Using R ................ 48

2.7 Interpreting the Regression Coefficients ................... 52

2.8 Inference for Linear Regression Models: t-Tests ............ 53

2.8.1 Normal Linear Regression Models ................. 53

2.8.2 The Distribution of

ˆ

β

j

........................... 53

2.8.3 Hypothesis Tests for β

j

.......................... 54

2.8.4 Confidence Intervals for β

j

....................... 55

2.8.5 Confidence Intervals for μ ........................ 56

2.9 Analysis of Variance for Regression Models ................ 58

2.10 Comparing Nested Models .............................. 61

2.10.1 Analysis of Variance to Compare Two Nested

Models ........................................ 61

2.10.2 Sequential Analysis of Variance ................... 63

2.10.3 Parallel and Independent Regressions .............. 66

2.10.4 The Marginality Principle ........................ 70

2.11 Choosing Between Non-nested Models: AIC and BIC ....... 70

2.12 Tools to Assist in Model Selection........................ 72

2.12.1 Adding and Dropping Variables................... 72

2.12.2 Automated Methods for Model Selection ........... 73

2.12.3 Objections to Using Stepwise Procedures .......... 76

2.13 Case Study ........................................... 76

2.14 Using R for Fitting Linear Regression Models ............. 79

2.15 Summary ............................................. 82

Problems ................................................... 83

References .................................................. 90

3 Linear Regression Models: Diagnostics

and Model-Building ....................................... 93

3.1 Introduction and Overview .............................. 93

3.2 Assumptions from a Practical Point of View ............... 94

3.2.1 Types of Assumptions ........................... 94

3.2.2 The Linear Predictor ............................ 94

3.2.3 Constant Variance .............................. 94

3.2.4 Independence .................................. 95

3.2.5 Normality ..................................... 96

Contents xiii

3.2.6 Measurement Scales ............................. 96

3.2.7 Approximations and Consequences ................ 96

3.3 Residuals for Normal Linear Regression Models ............ 97

3.4 The Leverages for Linear Regression Models ............... 98

3.4.1 Leverage and Extreme Covariate Values ........... 98

* 3.4.2 The Leverages Using Matrix Algebra .............. 100

3.5 Residual Plots ......................................... 101

3.5.1 Plot Residuals Against x

j

: Linearity .............. 101

3.5.2 Partial Residual Plots ........................... 102

3.5.3 Plot Residuals Against ˆμ: Constant Variance ....... 104

3.5.4 Q–Q Plots and Normality ........................ 105

3.5.5 Lag Plots and Dependence over Time ............. 106

3.6 Outliers and Influential Observations ..................... 108

3.6.1 Introduction ................................... 108

3.6.2 Outliers and Studentized Residuals ............... 109

3.6.3 Influential Observations ......................... 110

3.7 Terminology for Residuals .............................. 115

3.8 Remedies: Fixing Identified Problems ..................... 115

3.9 Transforming the Response ............................. 116

3.9.1 Symmetry, Constraints and the Ladder of Powers ... 116

3.9.2 Variance-Stabilizing Transformations .............. 117

3.9.3 Box–Cox Transformations........................ 120

3.10 Simple Transformations of Covariates ..................... 121

3.11 Polynomial Trends ..................................... 127

3.12 Regression Splines ..................................... 131

3.13 Fixing Identified Outliers ............................... 134

3.14 Collinearity ........................................... 135

3.15 Case Studies .......................................... 138

3.15.1 Case Study 1 ................................... 138

3.15.2 Case Study 2 ................................... 141

3.16 Using R for Diagnostic Analysis of Linear Regression

Models ............................................... 146

3.17 Summary ............................................. 147

Problems ................................................... 149

References .................................................. 162

4 Beyond Linear Regression: The Method of Maximum

Likelihood ................................................ 165

4.1 Introduction and Overview .............................. 165

4.2 The Need for Non-normal Regression Models .............. 165

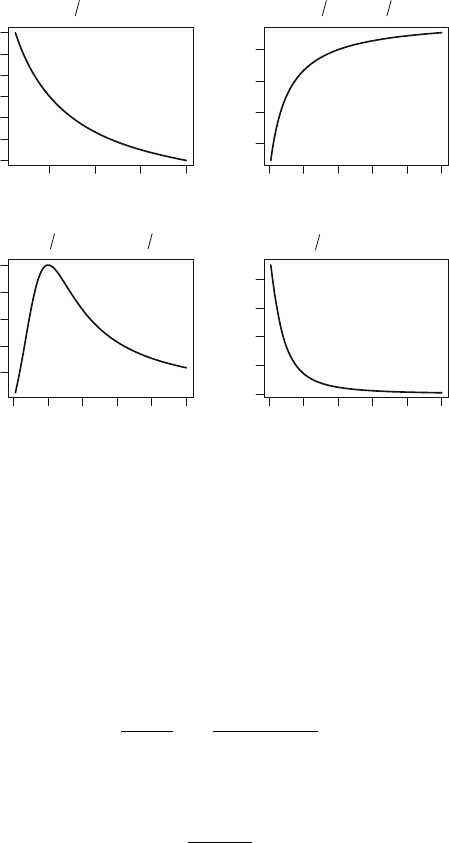

4.2.1 When Linear Models Are a Poor Choice ........... 165

4.2.2 Binary Outcomes and Binomial Counts ............ 166

4.2.3 Unrestricted Counts: Poisson or Negative Binomial . . 168

4.2.4 Continuous Positive Observations ................. 169

4.3 Generalizing the Normal Linear Model ................... 171

xiv Contents

4.4 The Idea of Likelihood Estimation ....................... 172

4.5 Maximum Likelihood for Estimating One Parameter ....... 176

4.5.1 Score Equations ................................ 176

4.5.2 Information: Observed and Expected .............. 177

4.5.3 Standard Errors of Parameters ................... 179

4.6 Maximum Likelihood for More Than One Parameter ....... 180

4.6.1 Score Equations ................................ 180

4.6.2 Information: Observed and Expected .............. 182

4.6.3 Standard Errors of Parameters ................... 183

* 4.7 Maximum Likelihood Using Matrix Algebra ............... 183

* 4.7.1 Notation ....................................... 183

* 4.7.2 Score Equations ................................ 183

* 4.7.3 Information: Observed and Expected .............. 184

* 4.7.4 Standard Errors of Parameters ................... 186

* 4.8 Fisher Scoring for Computing MLEs ..................... 186

4.9 Properties of MLEs .................................... 189

4.9.1 Introduction ................................... 189

4.9.2 Properties of MLEs for One Parameter ............ 189

* 4.9.3 Properties of MLEs for Many Parameters .......... 190

4.10 Hypothesis Testing: Large Sample Asymptotic Results ...... 191

4.10.1 Introduction ................................... 191

* 4.10.2 Global Tests ................................... 194

* 4.10.3 Tests About Subsets of Parameters ............... 196

4.10.4 Tests About One Parameter in a Set of Parameters . 197

4.10.5 Comparing the Three Methods ................... 199

4.11 Confidence Intervals .................................... 200

* 4.11.1 Confidence Regions for More Than One Parameter . . 200

4.11.2 Confidence Intervals for Single Parameters ......... 200

4.12 Comparing Non-nested Models: The AIC and BIC ......... 202

4.13 Summary ............................................. 204

* 4.14 Appendix: R Code to Fit Models to the Quilpie Rainfall

Data ................................................. 204

Problems ................................................... 206

References .................................................. 209

5 Generalized Linear Models: Structure ..................... 211

5.1 Introduction and Overview .............................. 211

5.2 The Two Components of Generalized Linear Models ........ 211

5.3 The Random Component: Exponential Dispersion Models . . . 212

5.3.1 Examples of EDMs ............................. 212

5.3.2 Definition of EDMs ............................. 212

5.3.3 Generating Functions ........................... 214

5.3.4 The Moment Generating and Cumulant Functions

for EDMs ...................................... 215

5.3.5 The Mean and Variance of an EDM ............... 216

Contents xv

5.3.6 The Variance Function .......................... 217

5.4 EDMs in Dispersion Model Form ........................ 218

5.4.1 The Unit Deviance and the Dispersion Model Form ... 218

5.4.2 The Saddlepoint Approximation .................. 223

5.4.3 The Distribution of the Unit Deviance ............. 224

5.4.4 Accuracy of the Saddlepoint Approximation ........ 225

5.4.5 Accuracy of the χ

2

1

Distribution for the Unit

Deviance ...................................... 226

5.5 The Systematic Component ............................. 229

5.5.1 Link Function .................................. 229

5.5.2 Offsets ........................................ 229

5.6 Generalized Linear Models Defined ....................... 230

5.7 The Total Deviance .................................... 231

5.8 Regression Transformations Approximate GLMs ........... 232

5.9 Summary ............................................. 234

Problems ................................................... 235

References .................................................. 240

6 Generalized Linear Models: Estimation ................... 243

6.1 Introduction and Overview .............................. 243

6.2 Likelihood Calculations for β ............................ 243

6.2.1 Differentiating the Probability Function ........... 243

6.2.2 Score Equations and Information for β ............ 244

6.3 Computing Estimates of β .............................. 245

6.4 The Residual Deviance ................................. 248

6.5 Standard Errors for

ˆ

β .................................. 250

* 6.6 Estimation of β: Matrix Formulation ..................... 250

6.7 Estimation of GLMs Is Locally Like Linear Regression ...... 252

6.8 Estimating φ .......................................... 252

6.8.1 Introduction ................................... 252

6.8.2 The Maximum Likelihood Estimator of φ .......... 253

6.8.3 Modified Profile Log-Likelihood Estimator of φ ..... 253

6.8.4 Mean Deviance Estimator of φ ................... 254

6.8.5 Pearson Estimator of φ .......................... 255

6.8.6 Which Estimator of φ Is Best? ................... 255

6.9 Using R to Fit GLMs .................................. 257

6.10 Summary ............................................. 259

Problems ................................................... 261

References .................................................. 262

7 Generalized Linear Models: Inference ..................... 265

7.1 Introduction and Overview .............................. 265

7.2 Inference for Coefficients When φ Is Known ............... 265

7.2.1 Wald Tests for Single Regression Coefficients ....... 265

7.2.2 Confidence Intervals for Individual Coefficients ..... 266

xvi Contents

7.2.3 Confidence Intervals for μ ........................ 267

7.2.4 Likelihood Ratio Tests to Compare Nested Models:

χ

2

Tests ....................................... 269

7.2.5 Analysis of Deviance Tables to Compare Nested

Models ........................................ 270

7.2.6 Score Tests .................................... 271

* 7.2.7 Score Tests Using Matrices ....................... 272

7.3 Large Sample Asymptotics .............................. 273

7.4 Goodness-of-Fit Tests with φ Known ..................... 274

7.4.1 The Idea of Goodness-of-Fit ...................... 274

7.4.2 Deviance Goodness-of-Fit Test ................... 275

7.4.3 Pearson Goodness-of-Fit Test .................... 275

7.5 Small Dispersion Asymptotics ........................... 276

7.6 Inference for Coefficients When φ Is Unknown ............. 278

7.6.1 Wald Tests for Single Regression Coefficients ....... 278

7.6.2 Confidence Intervals for Individual Coefficients ..... 280

* 7.6.3 Confidence Intervals for μ ........................ 281

7.6.4 Likelihood Ratio Tests to Compare Nested Models:

F -Tests ........................................ 282

7.6.5 Analysis of Deviance Tables to Compare Nested

Models ........................................ 284

7.6.6 Score Tests .................................... 286

7.7 Comparing Wald, Score and Likelihood Ratio Tests ........ 287

7.8 Choosing Between Non-nested GLMs: AIC and BIC ........ 288

7.9 Automated Methods for Model Selection .................. 289

7.10 Using R to Perform Tests ............................... 290

7.11 Summary ............................................. 292

Problems ................................................... 293

References .................................................. 296

8 Generalized Linear Models: Diagnostics ................... 297

8.1 Introduction and Overview .............................. 297

8.2 Assumptions of GLMs .................................. 297

8.3 Residuals for GLMs .................................... 298

8.3.1 Response Residuals Are Insufficient for GLMs ...... 298

8.3.2 Pearson Residuals .............................. 299

8.3.3 Deviance Residuals ............................. 300

8.3.4 Quantile Residuals .............................. 300

8.4 The Leverages in GLMs ................................ 304

8.4.1 Working Leverages .............................. 304

* 8.4.2 The Hat Matrix ................................ 304

8.5 Leverage Standardized Residuals for GLMs ............... 305

8.6 When to Use Which Type of Residual .................... 306

8.7 Checking the Model Assumptions ........................ 306

8.7.1 Introduction ................................... 306

Contents xvii

8.7.2 Independence: Plot Residuals Against Lagged

Residuals ...................................... 307

8.7.3 Plots to Check the Systematic Component ......... 307

8.7.4 Plots to Check the Random Component ........... 311

8.8 Outliers and Influential Observations ..................... 312

8.8.1 Introduction ................................... 312

8.8.2 Outliers and Studentized Residuals ............... 312

8.8.3 Influential Observations ......................... 313

8.9 Remedies: Fixing Identified Problems ..................... 315

8.10 Quasi-Likelihood and Extended Quasi-Likelihood .......... 318

8.11 Collinearity ........................................... 321

8.12 Case Study ........................................... 322

8.13 Using R for Diagnostic Analysis of GLMs ................. 325

8.14 Summary ............................................. 326

Problems ................................................... 327

References .................................................. 330

9 Models for Proportions: Binomial GLMs .................. 333

9.1 Introduction and Overview .............................. 333

9.2 Modelling Proportions .................................. 333

9.3 Link Functions ........................................ 336

9.4 Tolerance Distributions and the Probit Link ............... 338

9.5 Odds, Odds Ratios and the Logit Link .................... 340

9.6 Median Effective Dose, ED50 ............................ 343

9.7 The Complementary Log-Log Link in Assay Analysis ....... 344

9.8 Overdispersion ........................................ 347

9.9 When Wald Tests Fail .................................. 351

9.10 No Goodness-of-Fit for Binary Responses ................. 354

9.11 Case Study ........................................... 354

9.12 Using R to Fit GLMs to Proportion Data ................. 360

9.13 Summary ............................................. 360

Problems ................................................... 361

References .................................................. 367

10 Models for Counts: Poisson and Negative Binomial GLMs 371

10.1 Introduction and Overview .............................. 371

10.2 Summary of Poisson GLMs ............................. 371

10.3 Modelling Rates ....................................... 373

10.4 Contingency Tables: Log-Linear Models ................... 378

10.4.1 Introduction ................................... 378

10.4.2 Two Dimensional Tables: Systematic Component ... 378

10.4.3 Two-Dimensional Tables: Random Components ..... 380

10.4.4 Three-Dimensional Tables ....................... 385

10.4.5 Simpson’s Paradox .............................. 389

10.4.6 Equivalence of Binomial and Poisson GLMs ........ 392

xviii Contents

10.4.7 Higher-Order Tables ............................ 393

10.4.8 Structural Zeros in Contingency Tables ............ 395

10.5 Overdispersion ........................................ 397

10.5.1 Overdispersion for Poisson GLMs ................. 397

10.5.2 Negative Binomial GLMs ........................ 399

10.5.3 Quasi-Poisson Models ........................... 402

10.6 Case Study ........................................... 404

10.7 Using R to Fit GLMs to Count Data ..................... 411

10.8 Summary ............................................. 411

Problems ................................................... 412

References .................................................. 422

11 Positive Continuous Data: Gamma and Inverse Gaussian

GLMs ..................................................... 425

11.1 Introduction and Overview .............................. 425

11.2 Modelling Positive Continuous Data ...................... 425

11.3 The Gamma Distribution ............................... 427

11.4 The Inverse Gaussian Distribution ....................... 431

11.5 Link Functions ........................................ 433

11.6 Estimating the Dispersion Parameter ..................... 436

11.6.1 Estimating φ for the Gamma Distribution ......... 436

11.6.2 Estimating φ for the Inverse Gaussian Distribution . . 439

11.7 Case Studies .......................................... 440

11.7.1 Case Study 1 ................................... 440

11.7.2 Case Study 2 ................................... 442

11.8 Using R to Fit Gamma and Inverse Gaussian GLMS ....... 445

11.9 Summary ............................................. 445

Problems ................................................... 446

References .................................................. 454

12 Tweedie GLMs ............................................ 457

12.1 Introduction and Overview .............................. 457

12.2 The Tweedie EDMs .................................... 457

12.2.1 Introducing Tweedie Distributions ................ 457

12.2.2 The Structure of Tweedie EDMs .................. 460

12.2.3 Tweedie EDMs for Positive Continuous Data ....... 461

12.2.4 Tweedie EDMs for Positive Continuous Data with

Exact Zeros .................................... 463

12.3 Tweedie GLMs ........................................ 464

12.3.1 Introduction ................................... 464

12.3.2 Estimation of the Index Parameter ξ .............. 465

12.3.3 Fitting Tweedie GLMs .......................... 469

12.4 Case Studies .......................................... 473

12.4.1 Case Study 1 ................................... 473

12.4.2 Case Study 2 ................................... 475

Contents xix

12.5 Using R to Fit Tweedie GLMs ........................... 478

12.6 Summary ............................................. 479

Problems ................................................... 480

References .................................................. 488

13 Extra Problems ........................................... 491

13.1 Introduction and Overview .............................. 491

Problems ................................................... 491

References .................................................. 500

Using R for Data Analysis .................................... 503

A.1 Introduction and Overview .............................. 503

A.2 Preparing to Use R .................................... 503

A.2.1 Introduction to R ............................... 503

A.2.2 Important R Websites ........................... 504

A.2.3 Obtaining and Installing R ....................... 504

A.2.4 Downloading and Installing R Packages ............ 504

A.2.5 Using R Packages ............................... 505

A.2.6 The R Packages Used in This Book ............... 506

A.3 Introduction to Using R ................................ 506

A.3.1 Basic Use of R as an Advanced Calculator ......... 506

A.3.2 Quitting R ..................................... 508

A.3.3 Obtaining Help in R ............................ 508

A.3.4 Variable Names in R ............................ 508

A.3.5 Working with Vectors in R ....................... 509

A.3.6 Loading Data into R ............................ 511

A.3.7 Working with Data Frames in R .................. 513

A.3.8 Using Functions in R ............................ 514

A.3.9 Basic Statistical Functions in R ................... 515

A.3.10 Basic Plotting in R ............................. 516

A.3.11 Writing Functions in R .......................... 518

* A.3.12 Matrix Arithmetic in R .......................... 520

References .................................................. 523

The GLMsData package ....................................... 525

References .................................................. 527

Selected Solutions ............................................ 529

Solutions from Chap. 1 ....................................... 529

Solutions from Chap. 2 ....................................... 530

Solutions from Chap. 3 ....................................... 532

Solutions from Chap. 4 ....................................... 534

Solutions from Chap. 5 ....................................... 536

Solutions from Chap. 6 ....................................... 537

Solutions from Chap. 7 ....................................... 537

Solutions from Chap. 8 ....................................... 539

xx Contents

Solutions from Chap. 9 ....................................... 539

Solutions from Chap. 10 ...................................... 541

Solutions from Chap. 11 ...................................... 544

Solutions from Chap. 12 ...................................... 547

Solutions from Chap. 13 ...................................... 548

References .................................................. 550

Index: Data sets .............................................. 551

Index: R commands .......................................... 553

Index: General topics ......................................... 557

Chapter 1

Statistical Models

...all models are approximations. Essentially, all models

are wrong, but some are useful. However, the approximate

nature of the model must always be borne in mind.

Box and Draper [2, p. 424]

1.1 Introduction and Overview

This chapter introduces the concept of a statistical model. One particular

type of statistical model—the generalized linear model—is the focus of this

book, and so we begin with an introduction to statistical models in gen-

eral. This allows us to introduce the necessary language, notation, and other

important issues. We first discuss conventions for describing data mathemati-

cally (Sect. 1.2). We then highlight the importance of plotting data (Sect. 1.3),

and explain how to numerically code non-numerical variables (Sect. 1.4)so

that they can be used in mathematical models. We then introduce the two

components of a statistical model used for understanding data (Sect. 1.5):

the systematic and random components. The class of regression models is

then introduced (Sect. 1.6), which includes all models in this book. Model

interpretation is then considered (Sect. 1.7), followed by comparing physical

models and statistical models (Sect. 1.8) to highlight the similarities and dif-

ferences. The purpose of a statistical model is then given (Sect. 1.9), followed

by a description of the two criteria for evaluating statistical models: accuracy

and parsimony (Sect. 1.10). The importance of understanding the limitations

of statistical models is then addressed (Sect. 1.11), including the differences

between observational and experimental data. The generalizability of models

is then discussed (Sect. 1.12). Finally, we make some introductory comments

about using r for statistical modelling (Sect. 1.13).

1.2 Conventions for Describing Data

The concepts in this chapter are best introduced using an example.

Example 1.1. A study of 654 youths in East Boston [10, 18, 20] explored the

relationships between lung capacity (measured by forced expiratory volume,

© Springer Science+Business Media, LLC, part of Springer Nature 2018

P. K. Dunn, G. K. Smyth, Generalized Linear Models with Examples in R,

Springer Texts in Statistics, https://doi.org/10.1007/978-1-4419-0118-7_1

1

21StatisticalModels

or fev, in litres) and smoking status, age, height and gender (Table 1.1). The

data are available in r as the data frame lungcap (short for ‘lung capacity’),

part of the GLMsData package [4]. For information about this package, see

Appendix B; for more information about r, see Appendix A. Assuming the

GLMsData package is installed in r (see Sect. A.2.4), load the GLMsData

package and the lungcap data frame as follows:

> library(GLMsData) # Load the GLMsData package

> data(lungcap) # Make the data set lungcap available for use

> head(lungcap) # Show the first few lines of data

Age FEV Ht Gender Smoke

1 3 1.072 46 F 0

2 4 0.839 48 F 0

3 4 1.102 48 F 0

4 4 1.389 48 F 0

5 4 1.577 49 F 0

6 4 1.418 49 F 0

(The # character and all subsequent text is ignored by r.) The data frame

lungcap consist of five variables: Age, FEV, Ht, Gender and Smoke. Some

of these variables are numerical variables (such as Age), and some are non-

numerical variables (such as Gender). Any one of these can be accessed indi-

vidually using $ as follows:

> head(lungcap$Age) # Show first six values of Age

[1]344444

> tail(lungcap$Gender) # Show last six values of Gender

[1]MMMMMM

Levels: F M

Tabl e 1. 1 The forced expiratory volume (fev) of youths, sampled from East Boston

during the middle to late 1970s. fev is in L; age is in completed years; height is in inches.

The complete data set consists of 654 observations in total (Example 1.1)

Non-smokers Smokers

Females Males Females Males

fev Age Height fev Age Height fev Age Height fev Age Height

1.072 3 46.0 1.404 3 51.5 2.975 10 63.0 1.953 9 58.0

0.839 4 48.0 0.796 4 47.0 3.038 10 65.0 3.498 10 68.0

1.102 4 48.0 1.004 4 48.0 2.387 10 66.0 1.694 11 60.0

1.389 4 48.0 1.789 4 52.0 3.413 10 66.0 3.339 11 68.5

1.577 4 49.0 1.472 5 50.0 3.120 11 61.0 4.637 11 72.0

1.418 4 49.0 2.115 5 50.0 3.169 11 62.5 2.304 12 66.5

1.569 4 50.0 1.359 5 50.5 3.102 11 64.0 3.343 12 68.0

1.196 5 46.5 1.776 5 51.0 3.069 11 65.0 3.751 12 72.0

1.400 5 49.0 1.452 5 51.0 2.953 11 67.0 4.756 13 68.0

1.282 5 49.0 1.930 5 51.0 3.104 11 67.5 4.789 13 69.0

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

1.2 Conventions for Describing Data 3

The length of any one variable is found using length():

> length(lungcap$Age)

[1] 654

The dimension of the data set is:

> dim(lungcap)

[1] 654 5

That is, there are 654 cases and 5 variables.

For these data, the sample size, usually denoted as n,isn = 654. Each

youth’s information is recorded in one row of the r data frame. fev is called

the response variable (or the dependent variable) since fev is assumed to

change in response to (or depends on) the values of the other variables. The

response variable is usually denoted by y. In Example 1.1, y refers to ‘fev

(in litres)’. When necessary, y

i

refers to the ith value of the response. For

example, y

1

=1.072 in Table 1.1. Occasionally it is convenient to refer to all

the observations y

i

together instead of one at a time.

The other variables—age, height, gender and smoking status—can be

called candidate variables, carriers, exogenous variables, independent vari-

ables, input variables, predictors, or regressors. We call these variables ex-

planatory variables in this book. Explanatory variables are traditionally de-

noted by x. In Example 1.1,letx

1

refer to age (in completed years), and x

2

refer to height (in inches). When necessary, the value of, say, x

2

for Observa-

tion i is denoted x

2i

; for example, x

2,1

= 46.

Distinguishing between quantitative and qualitative explanatory variables

is essential. Explanatory variables that are qualitative, like gender, are called

factors. Gender is a factor with two levels: F (female) and M (male). Explana-

tory variables that are quantitative, like height and age, are called covariates.

Often, the key question of interest in an analysis concerns the relationship

between the response variable and one or more explanatory variables, though

other explanatory variables are present and may also influence the response.

Adjusting for the effects of other correlated variables is often necessary, so as

to understand the effect of the variable of key interest. These other variables

are sometimes called extraneous variables. For example, we may be inter-

ested in the relationship between fev (as the response variable) and smok-

ing status (as the explanatory variable), but acknowledge that age, height

and gender may also influence fev. Age, height and gender are extraneous

variables.

41StatisticalModels

Example 1.2. Viewing the structure of a data frame can be informative:

> str(lungcap) # Show the *structure* of the data frame

'data.frame': 654 obs. of 5 variables:

$Age :int 3444444555...

$ FEV : num 1.072 0.839 1.102 1.389 1.577 ...

$ Ht : num 46 48 48 48 49 49 50 46.5 49 49 ...

$ Gender: Factor w/ 2 levels "F","M": 1111111111...

$ Smoke : int 0000000000...

The size of the data frame is given, plus information about each variable: Age

and Smoke consists of integers, FEV and Ht are numerical, while Gender is a

factor with two levels. Each variable can be summarized numerically using

summary():

> summary(lungcap) # Summarize the data

Age FEV Ht Gender

Min. : 3.000 Min. :0.791 Min. :46.00 F:318

1st Qu.: 8.000 1st Qu.:1.981 1st Qu.:57.00 M:336

Median :10.000 Median :2.547 Median :61.50

Mean : 9.931 Mean :2.637 Mean :61.14

3rd Qu.:12.000 3rd Qu.:3.119 3rd Qu.:65.50

Max. :19.000 Max. :5.793 Max. :74.00

Smoke

Min. :0.00000

1st Qu.:0.00000

Median :0.00000

Mean :0.09939

3rd Qu.:0.00000

Max. :1.00000

Notice that quantitative variables are summarized differently to qualitative

variables. FEV, Age and Ht (all quantitative) are summarized with the mini-

mum and maximum values, the first and third quartiles, and the mean and

median. Gender (qualitative) is summarised by giving the number of males

and females in the data. The variable Smoke is qualitative, and numbers are

used to designate the levels of the variable. In this case, r has no way of

determining if the variable is a factor or not, and assumes the variable is

quantitative by default since it consists of numbers. To explicitly tell r that

Smoke is qualitative, use factor():

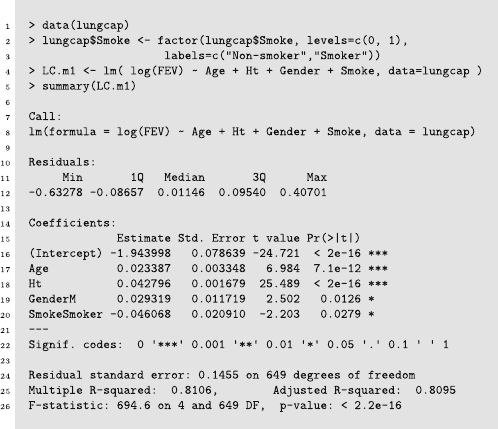

> lungcap$Smoke <- factor(lungcap$Smoke,

levels=c(0, 1), # The values of Smoke

labels=c("Non-smoker","Smoker")) # The labels

> summary(lungcap$Smoke) # Now, summarize the redefined variable Smoke

Non-smoker Smoker

589 65

(The information about the data set, accessed using ?lungcap, explains

that 0 represents non-smokers and 1 represents smokers.) We notice that

non-smokers outnumber smokers.

1.3 Plotting Data 5

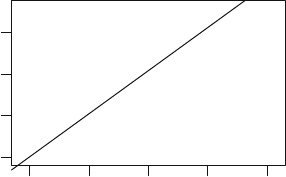

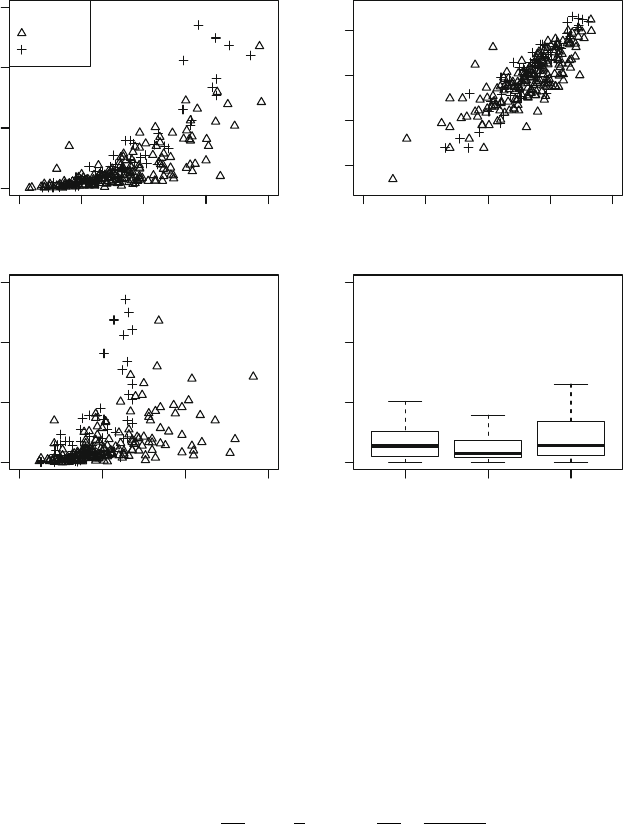

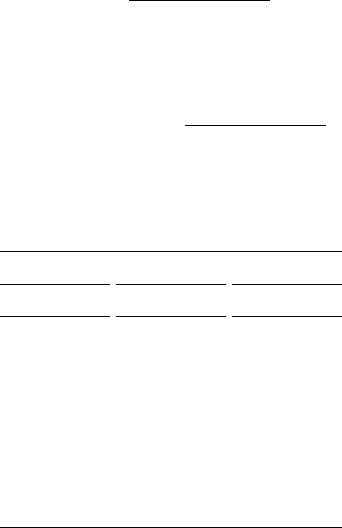

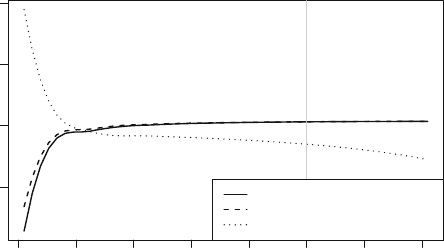

1.3 Plotting Data

Understanding the lung capacity data is difficult because there is so much

data. How can the impact of age, height, gender and smoking status on

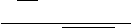

fev be understood? Plots (Fig. 1.1) may reveal many, but probably not all,

important features of the data:

> plot( FEV ~ Age, data=lungcap,

xlab="Age (in years)", # The x-axis label

ylab="FEV (in L)", # The y-axis label

main="FEV vs age", # The main title

xlim=c(0, 20), # Explicitly set x-axis limits

ylim=c(0, 6), # Explicitly set y-axis limits

las=1) # Makes axis labels horizontal

This r code uses the plot() command to produce plots of the data. (For more

information on plotting in r, see Sect. A.3.10.) The formula FEV ~ Age is read

as ‘FEV is modelled by Age’. The input data=lungcap indicates that lungcap

is the data frame in which to find the variables FEV and Age. Continue by

plotting FEV against the remaining variables:

> plot( FEV ~ Ht, data=lungcap, main="FEV vs height",

xlab="Height (in inches)", ylab="FEV (in L)",

las=1, ylim=c(0, 6) )

> plot( FEV ~ Gender, data=lungcap,

main="FEV vs gender", ylab="FEV (in L)",

las=1, ylim=c(0, 6))

> plot( FEV ~ Smoke, data=lungcap, main="FEV vs Smoking status",

ylab="FEV (in L)", xlab="Smoking status",

las=1, ylim=c(0, 6))

(Recall that Smoke was declared a factor in Example 1.2.) Notice that r

uses different types of displays for plotting fev against covariates (top pan-

els) than against factors (bottom panels). Boxplots are used (by default)

for plotting fev against factors: the solid horizontal centre line in each box

represents the median (not the mean), and the limits of the central box rep-

resent the upper and lower quartiles of the data (approximately 75% of the

observations are less than the upper quartile, and approximately 25% of the

observations are less than the lower quartile). The lines from the central box

extend to the largest and smallest values, except for outliers which are in-

dicated by individual points (such as a large fev for a few smokers). In r,

outliers are defined, by default, as observations more than 1.5 times the inter-

quartile range (the difference between the upper and lower quartiles) more

extreme than the upper or lower limits of the central box.

The plots (Fig. 1.1) show a moderate relationship (reasonably large vari-

ation) between fev and age, that is possibly linear (at least until about 15

years of age). However, a stronger relationship (less variation) is apparent

between fev and height, but this relationship does not appear to be linear.

61StatisticalModels

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

ll

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

ll

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

0 5 10 15 20

0

1

2

3

4

5

6

FEV vs age

Age (in years)

FEV (in L)

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

ll

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

ll

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

45 50 55 60 65 70 75

0

1

2

3

4

5

6

FEV vs height

Height (in inches)

FEV (in L)

FM

0

1

2

3

4

5

6

FEV vs gender

Gender

FEV (in L)

l

l

l

l

l

l

l

l

l

Non−smoker Smoker

0

1

2

3

4

5

6

FEV vs Smoking status

Smoking status

FEV (in L)

Fig. 1.1 Forced expiratory volume (fev ) plotted against age (top left), height (top

right), gender (bottom left) and smoking status (bottom right) for the data in Table 1.1

(Sect. 1.3)

The variation in fev appears to increase for larger values of fev also. In gen-

eral, it also appears that males have a slightly larger fev, and show greater

variation in fev, than females. Smokers appear to have a larger fev than

non-smokers.

While many of these statements are expected, the final statement is sur-

prising, and may suggest that more than one variable should be examined at

once. The plots in Fig. 1.1 only explore the relationships between fev and

each explanatory variable individually, so we continue by exploring relation-

ships involving more than two variables at a time.

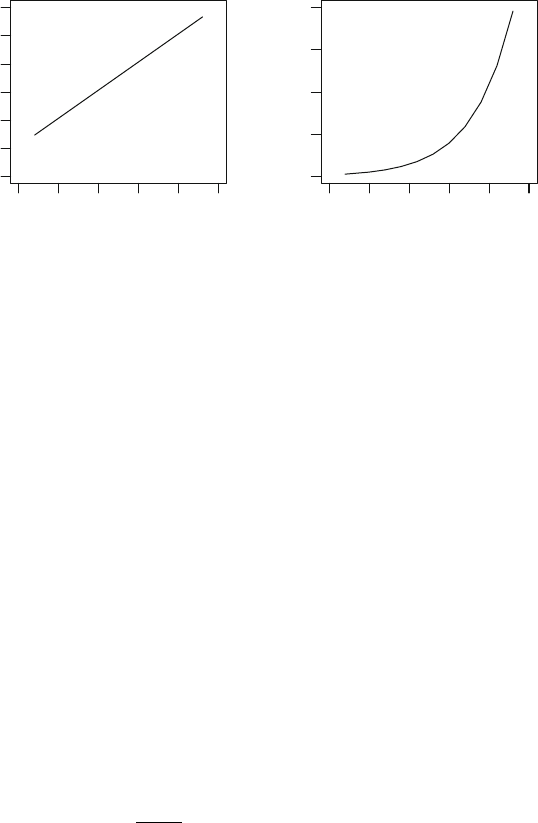

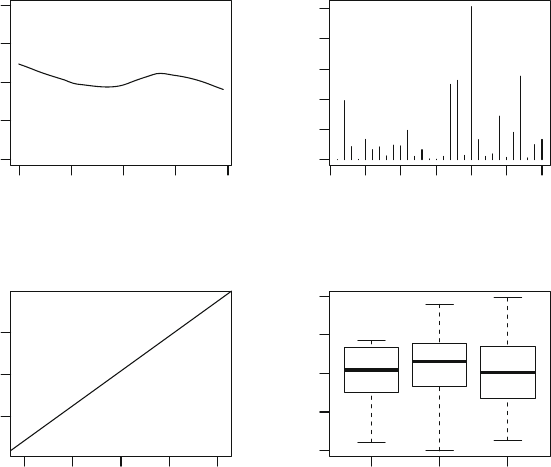

One way to do this is to plot the data separately for smokers and non-

smokers (Fig. 1.2), using similar scales on the axes to enable comparisons:

> plot( FEV ~ Age,

data=subset(lungcap, Smoke=="Smoker"), # Only select smokers

main="FEV vs age\nfor smokers", # \n means `new line'

ylab="FEV (in L)", xlab="Age (in years)",

ylim=c(0, 6), xlim=c(0, 20), las=1)

1.3 Plotting Data 7

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

ll

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

0 5 10 15 20

0

1

2

3

4

5

6

FEV vs age

for smokers

Age (in years)

FEV (in L)

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

ll

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

0 5 10 15 20

0

1

2

3

4

5

6

FEV vs age

for non−smokers

Age (in years)

FEV (in L)

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

ll

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

45 50 55 60 65 70 75

0

1

2

3

4

5

6

FEV vs height

for smokers

Height (in inches)

FEV (in L)

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

ll

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

l

45 50 55 60 65 70 75

0

1

2

3

4

5

6

FEV vs height

for non−smokers

Height (in inches)

FEV (in L)

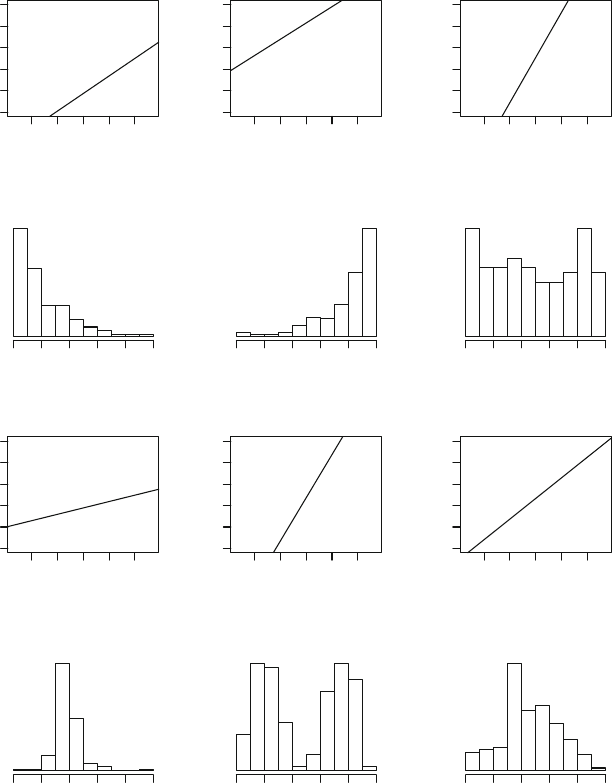

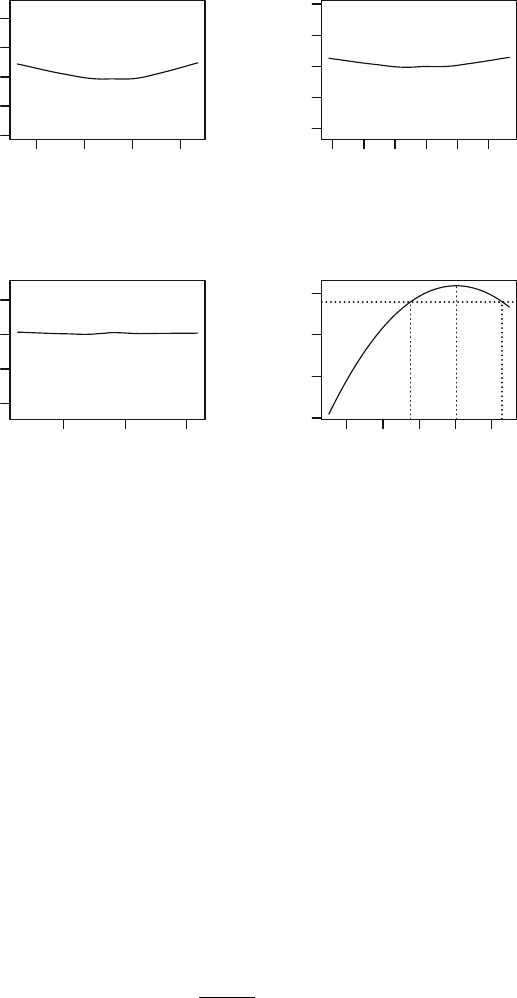

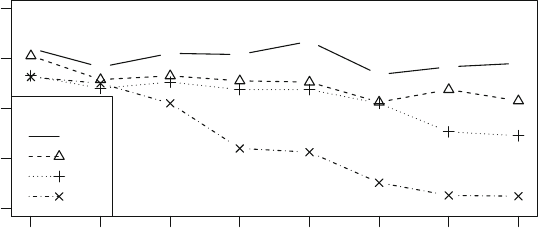

Fig. 1.2 Plots of the lung capacity data: the forced expiratory volume (fev) plotted

against age, for smokers (top left panel) and non-smokers (top right panel); and the

forced expiratory volume (fev ) plotted against height, for smokers (bottom left panel)

and non-smokers (bottom right panel) (Sect. 1.3)

> plot( FEV ~ Age,

data=subset(lungcap, Smoke=="Non-smoker"), # Only select non-smokers

main="FEV vs age\nfor non-smokers",

ylab="FEV (in L)", xlab="Age (in years)",

ylim=c(0, 6), xlim=c(0, 20), las=1)

> plot( FEV ~ Ht, data=subset(lungcap, Smoke=="Smoker"),

main="FEV vs height\nfor smokers",

ylab="FEV (in L)", xlab="Height (in inches)",

xlim=c(45, 75), ylim=c(0, 6), las=1)

> plot( FEV ~ Ht, data=subset(lungcap, Smoke=="Non-smoker"),

main="FEV vs height\nfor non-smokers",

ylab="FEV (in L)", xlab="Height (in inches)",

xlim=c(45, 75), ylim=c(0, 6), las=1)

Note that == is used to make logical comparisons. The plots show that smok-

ers tend to be older (and hence taller) than non-smokers and hence are likely

to have a larger fev.

81StatisticalModels

Another option is to distinguish between smokers and non-smokers when

plotting the FEV against Age. For these data, there are so many observa-

tions that distinguishing between smokers and non-smokers is difficult, so we

first adjust Age so that the values for smokers and non-smokers are slightly

separated:

> AgeAdjust <- lungcap$Age + ifelse(lungcap$Smoke=="Smoker", 0, 0.5)

The code ifelse( lungcap$Smoke=="Smoker", 0, 0.5) adds zero to the

value of Age for youth labelled with Smoker, and adds 0.5 to youth labelled

otherwise (that is, non-smokers). Then we plot fev against this variable:

(Fig. 1.3, top left panel):

> plot( FEV ~ AgeAdjust, data=lungcap,

pch = ifelse(Smoke=="Smoker", 3, 20),

xlab="Age (in years)", ylab="FEV (in L)", main="FEV vs age", las=1)

The input pch indicates the plotting character to use when plotting; then,

ifelse( Smoke=="Smoker", 3, 20) means to plot with plotting charac-

ter 3 (a ‘plus’ sign) if Smoke takes the value "Smoker", and otherwise to

plot with plotting character 20 (a filled circle). See ?points for an explana-

tion of the numerical codes used to define different plotting symbols. Recall

that in Example 1.2, Smoke was declared as a factor with two levels that

were labelled Smoker and Non-smoker.Thelegend() command produces

the legend:

> legend("topleft", pch=c(20, 3), legend=c("Non-smokers","Smokers") )

The first input specifies the location (such as "center" or "bottomright").

The second input gives the plotting notation to be explained (such as the

points, using pch, or the line types, using lty). The legend input provides

the explanatory text. Use ?legend for more information.

A boxplot can also be used to show relationships (Fig. 1.3, top right panel):

> boxplot(lungcap$FEV ~ lungcap$Smoke + lungcap$Gender,

ylab="FEV (in L)", main="FEV, by gender\n and smoking status",

las=2, # Keeps labels perpendicular to the axes

names=c("F:\nNon", "F:\nSmoker", "M:\nNon", "M:\nSmoker"))

Another way to show the relationship between three variables is to use

an interaction plot, which shows the relationship between the levels of two

factors and (by default) the mean response of a quantitative variable. The

appropriate r function is interaction.plot() (Fig. 1.3, bottom panels):

> interaction.plot( lungcap$Smoke, lungcap$Gender, lungcap$FEV,

xlab="Smoking status", ylab="FEV (in L)",

main="Mean FEV, by gender\n and smoking status",

trace.label="Gender", las=1)

> interaction.plot( lungcap$Smoke, lungcap$Gender, lungcap$Age,

xlab="Smoking status", ylab="Age (in years)",

main="Mean age, by gender\n and smoking status",

trace.label="Gender", las=1)

1.3 Plotting Data 9

l

l

l

l

l

l

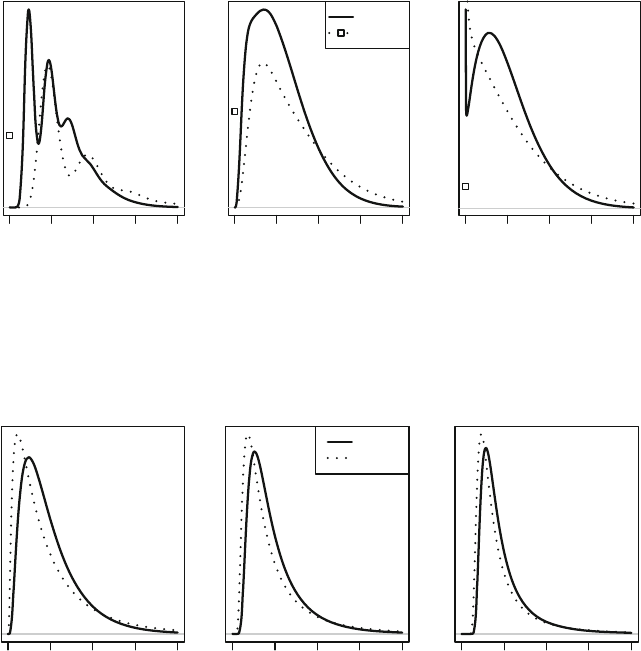

l