1

Are Current Task-oriented Dialogue Systems Able

to Satisfy Impolite Users?

Zhiqiang Hu

∗

, Roy Ka-Wei Lee

∗

, Nancy F. Chen

†

∗

Singapore University of Technology and Design, Singapore

†

Institute of Infocomm Research (I2R), A*STAR, Singapore

nfychen@i2r.a-star.edu.sg

Abstract—Task-oriented dialogue (TOD) systems have assisted

users on many tasks, including ticket booking and service

inquiries. While existing TOD systems have shown promising per-

formance in serving customer needs, these systems mostly assume

that users would interact with the dialogue agent politely. This

assumption is unrealistic as impatient or frustrated customers

may also interact with TOD systems impolitely. This paper aims

to address this research gap by investigating impolite users’

effects on TOD systems. Specifically, we constructed an impolite

dialogue corpus and conducted extensive experiments to evaluate

the state-of-the-art TOD systems on our impolite dialogue corpus.

Our experimental results show that existing TOD systems are

unable to handle impolite user utterances. We also present

a data augmentation method to improve TOD performance

in impolite dialogues. Nevertheless, handling impolite dialogues

remains a very challenging research task. We hope by releasing

the impolite dialogue corpus and establishing the benchmark

evaluations, more researchers are encouraged to investigate this

new challenging research task.

Index Terms—Task-oriented dialogue systems, impolite users,

data augmentation.

I. INTRODUCTION

Motivation. Task-oriented dialogue (TOD) systems play a

vital role in many businesses and service operations. Specif-

ically, these systems are deployed to assist users with spe-

cific tasks such as ticket booking and restaurant reservations

through natural language conversations. TOD systems are

usually built through a pipeline architecture that consists of

four sequential modules, including natural language under-

standing (NLU), dialogue state tracking (DST), policy learning

(POL), and natural language generation (NLG) [1]–[4]. More

recently, researchers have also explored leveraging large pre-

trained language models to improve the performance of TOD

systems [5]–[7]. These TOD systems have demonstrated their

effectiveness in understanding and responding to the users’

needs through conversations.

As most TOD systems are developed to serve and assist

humans in performing specific tasks, the politeness of the

TD systems remains a key design consideration. For instance,

Gupta et al. [8] presented POLLy (Politeness for Language

Learning), a system that combines a spoken language genera-

tor with an AI Planner to model Brown and Levinson’s theory

of politeness in TOD. Bothe et al. [9] developed a dialogue-

based navigation approach incorporating politeness and so-

ciolinguistic features for robotic behavioral modeling. More

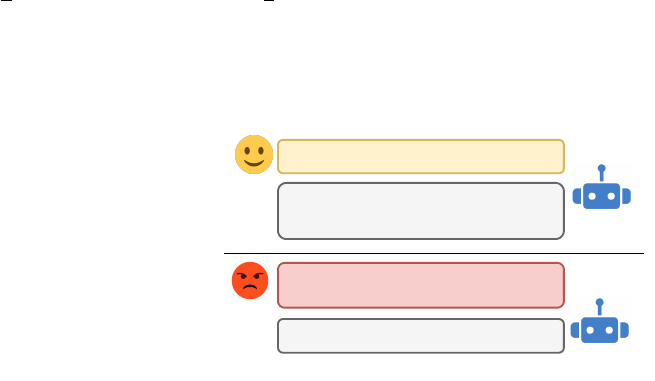

User

Bot

(PPTOD)

Impolite

User

Bot

(PPTOD)

What is the phone number and address?

The phone number for the Portuguese

restaurant is 01223 361355 and the address is

Cambridge Leisure Park Clifton Way.

phone number and address? Do I really have to

chase you for every single detail?

I'm sorry, I don't.

Fig. 1. Examples of two dialogue interactions between PPTOD and two types

of users: normal user (top) and impolite user (bottom).

recently, Mishra et al. [10] proposed a politeness adaptive

dialogue system (PADS) that can interact with users politely

and showcases empathy.

Nevertheless, the above studies have focused on generating

polite dialogues and ignored the users’ politeness (or impolite-

ness) in the conversation. Therefore it is unclear how the TOD

systems would respond when the users interact with the TOD

systems in an impolite manner, especially when the users are

in a rush to get information or frustrated when TOD systems

provide irrelevant responses. Consider the example in Figure 1,

we notice that the TOD system, PPOTD [11], is able to provide

a proper response to a user who presents the question in

a normal or polite manner. However, when encountering an

impolite user, PPOTD is not able to provide a proper and

relevant response. Ideally, the TOD systems should be robust

in handling user requests regardless of their politeness.

A straightforward approach to improving TOD systems’

ability to handle impolite users is to train the dialogue systems

with impolite user utterances. Unfortunately, most of the

existing TOD datasets [12]–[15] only capture user utterances

that are neural or polite. The lack of an impolite dialogue

dataset also limits the evaluation of TOD systems; to the

best of our knowledge, there are no existing studies on the

robustness of TOD systems in handling problematic users.

Research Objectives. To address the research gaps, we aim

to investigate the effects of impolite user utterances on TOD

systems. Working towards this goal, we collect and annotate

an impolite dialogue corpus by manually rewriting the user

utterances of the MultiWOZ 2.2 dataset [13]. Specifically,

arXiv:2210.12942v1 [cs.CL] 24 Oct 2022

2

human annotators are recruited to rewrite the user utterances

with role-playing scenarios that could encourage impolite user

utterances. For example, “imagine you are in a rush and

frustrated that the systems have given the wrong response for

the second time.”. In total, the human annotators have rewritten

over 10K impolite user utterances. Statistical and linguistic

analyses of the impolite user utterances are also performed to

understand the constructed dataset better.

The impolite dialogue corpus is subsequently used to eval-

uate the performance and limitations of state-of-the-art TOD

systems. Specifically, we have designed experiments to evalu-

ate the robustness of TOD systems in handling impolite users

and understand the effects of impolite user utterances on these

systems. We have also explored solutions to improve TOD

systems’ robustness in handling impolite users. A possible

solution is to train the TOD systems with more data. However,

the construction of a large-scale impolite dialogue corpus is a

laborious and expensive process. Therefore, we propose a data

augmentation method that utilizes text style transfer techniques

to improve TOD systems’ performance in impolite dialogues.

Contributions. We summarize our contributions as follows:

• We collect and annotate an impolite dialogue corpus

to support the evaluation of TOD systems performance

when handling impolite users. We hope that the impolite

dialogue dataset will encourage researchers to propose

TOD systems that are robust in handling users’ requests.

• We evaluate the performance of six state-of-the-art TOD

systems using our impolite dialogue corpus. The eval-

uation results showed that existing TOD systems have

difficulty handling impolite users’ requests.

• We propose a simple data augmentation method that

utilizes text style transfer techniques to improve TOD

systems’ performance in impolite dialogues.

II. RELATED WORK

1) Task Oriented Dialogue Systems: With the rapid ad-

vancement of deep learning techniques, TOD systems have

shown promising performance in handling user requests and

interactions. Recent studies have focused on end-to-end TOD

systems to train a general mapping from user utterance to the

system’s natural language response [16]–[20]. Yang et al. [5]

proposed UBAR by fine-tuning the large pre-trained unidirec-

tional language model GPT-2 [21] on the entire dialog session

sequence. The dialogue session consists of user utterances,

belief states, database results, system actions, and system re-

sponses of every dialog turn. Su et al. [11] proposed PPTOD to

effectively leverage pre-trained language models with a multi-

task pre-training strategy that increases the model’s ability

with heterogeneous dialogue corpora. Lin et al. [7] proposed

Minimalist Transfer Learning (MinTL) to plug-and-play large-

scale pre-trained models for domain transfer in dialogue task

completion. Zang et al. [13] proposed the LABES model,

which treated the dialogue states as discrete latent variables to

reduce the reliance on turn-level DST labels. Kulh

´

anek et al.

[22] proposed AuGPT with modified training objectives for

language model fine-tuning and data augmentation via back-

translation [23] to increase the diversity of the training data.

Existing studies have also leveraged knowledge bases to track

pivotal and critical information required in generating TOD

system agent’s responses [24]–[27]. For instance, Madotto

et al. [28] dynamically updated a knowledge base via fine-

tuning by directly embedding it into the model parameters.

Other studies have also explored reinforcement learning to

build TOD systems [29]–[31]. For instance, Zhao et al. [29]

utilized a Deep Recurrent Q-Networks (DRQN) for building

TOD systems.

2) Modeling Politeness in Dialogue Systems: Recent stud-

ies have also attempted to improve dialogue systems to gen-

erate responses in a more empathetic manner [32]–[35]. Yu et

al. [33] proposed to include user sentiment obtained through

multimodal information (acoustic, dialogic, and textual) in

the end-to-end learning framework to make TOD systems

more user-adaptive and effective. Feng et al. [34] constructed

a corpus containing task-oriented dialogues with emotion

labels for emotion recognition in TOD systems. However, the

impoliteness of users is not modeled as too few instances

exist in the MultiWOZ dataset. The lack of impolite dialogue

data motivates us to construct an impolite dialogue corpus to

facilitate downstream analysis.

Politeness is a human virtue and a crucial aspect of com-

munication [36], [37]. Danescu-Niculescu-Mizil et al. [37]

proposed a computational framework to identify the linguistic

aspects of politeness with application to social factors. Re-

searchers have also attempted to model and include politeness

in TOD systems [38]–[40]. For instance, Golchha et al. [38]

utilized a reinforced pointer generator network to transform

a generic response into a polite response. More recently,

Madaan et al. [40] adopted a text style transfer approach

to generate polite sentences while preserving the intended

content. Nevertheless, most of these studies have focused

on generating polite responses, neglecting the handling of

impolite inputs, i.e., impolite user utterances. This study aims

to fill this research gap by extensively evaluating state-of-the-

art TOD systems’ ability to handle impolite dialogues.

3) Data Augmentation in Dialogue Systems: Data augmen-

tation, which aims to enlarge training data size in machine

learning systems, is a common solution to the data scarcity

problem. Data augmentation methods has also been widely

used in dialogue systems [22], [41], [42]. For instance, Kurata

et al. [43] trained an encoder-decoder to reconstruct the utter-

ances in training data. To augment training data, the encoder’s

output hidden states are perturbed randomly to yield different

utterances. Hou et al. [41] proposed a sequence-to-sequence

generation-based data augmentation framework that models

relations between utterances of the same semantic frame in

the training data. Gritta et al. [42] proposed the Conversation

Graph (ConvGraph), which is a graph-based representation of

dialogues, to augment data volume and diversity by generating

dialogue paths. In this paper, we propose a simple data aug-

mentation method that utilizes text style transfer techniques to

generate impolite user utterances for training data to improve

TOD systems’ performance in dealing with impolite users.

3

III. IMPOLITE DIALOGUE CORPUS

We construct an impolite dialogue dataset to support our

evaluation of TOD systems’ ability to interpret and respond

to impolite users. Specifically, we recruited eight native En-

glish speakers to rewrite the user utterances in MultiWOZ

2.2 dataset [13] in an impolite manner. To the best of our

knowledge, this is the first impolite task-oriented dialogue

corpus. In the subsequent sections, we will discuss the corpus

construction process and provide a preliminary analysis of the

constructed impolite dialogue corpus.

A. Corpus Construction

MultiWOZ 2.2 [13] is a large-scale multi-domain task-

oriented dialogue benchmark that contains dialogues in seven

domains, including attraction, hotel, hospital, bus, restaurant,

train, and taxi. This dataset is also popular and commonly

used to evaluate existing TOD systems [5], [7], [11], [17],

[18], [22]. We performed a preliminary analysis using the

Stanford Politeness classifier trained on Wikipedia requests

data [37] to assign a politeness score to the user utterances in

MultiWOZ 2.2. We found that 99% of the user utterances are

classified as polite. Hence, we aim to rewrite the user utterance

in MultiWOZ 2.2 to create our impolite dialogue corpus.

Impolite Rewriting. The goal is to rewrite the user ut-

terance in the MultiWOZ 2.2 dataset and present the user

utterance in a rude and impolite manner. We randomly sampled

a subset of dialogues from MultiWOZ 2.2 for rewriting.

Next, we recruited eight native English speakers to rewrite

the user utterances. For each user utterance, the annotators

are presented with the entire dialogue history to have the

conversation’s overall context. The annotators are tasked to

rewrite the user utterances with three objectives: (i) the

rewritten sentences should be impolite, (ii) the content of

the rewritten sentence should be semantically close to the

original sentence, and (iii) the rewritten sentences should be

fluent. To further encourage the diversity of the impolite user

utterance, we also prescribed six role-playing scenarios to aid

the annotators in the rewriting tasks. For instance, we asked

the annotators to imagine they were customers in a bad mood

or impatient customers who wanted to get the information

quickly. The details of the role-playing scenarios are shown

in Table I, and the annotation system interface is included in

the Appendix A-A.

Annotation Quality Control. Impoliteness is subjective,

and the annotators may have different interpretations of im-

politeness. Therefore, we implement iterative checkpoints to

evaluate the quality of the rewritten user utterance. Specifi-

cally, we conducted peer evaluation at various checkpoints to

allow annotators to rate the quality of each other’s rewritten

sentences. The annotators are tasked to rate the rewritten user

utterance based on the following three criteria:

• Politeness. Rate the sentence politeness using a 5-point

Likert scale. 1: strongly opined that the sentence is

impolite, and 5: strongly opined that the sentence is

polite.

• Content Preservation. Compare the original user utter-

ance and rewrite sentence and rate the amount of content

TABLE I

Role-playing scenarios for impolite user annotation.

No. Scenario

1 Imagine that the customer is a sarcastic person in

a bad mood.

2 Imagine that the customer is an impatient customer

that wants to get the information fast.

3 Imagine that the customer is in a bad mood as

something bad has just happened (e.g., just had an

argument with friends or spouse).

4 Imagine that the customer is tired and hungry after

a long-haul flight and need to get this information

fast.

5 Imagine that the customer is a spoilt-brat with a

lot of money.

6 Imagine that the customer is getting help from

CSA for the third time and they didn’t get the

previous information right.

preserved in the rewrite sentence using a 5-point Likert

scale. 1: the original and rewritten sentences have very

different content, and 5: the original and rewritten sen-

tences have the same content.

• Fluency. Rate the fluency of rewritten sentences using

a 5-point Likert scale. 1: unreadable with too many

grammatical errors, 5: perfectly fluent sentence.

Each user utterance is evaluated by two annotators. Never-

theless, we recognize that it is unnatural for all utterances in a

dialogue to be impolite. Thus, we would consider a dialogue

impolite if 50% of the user utterances in a conversation are

rated as impolite (i.e., with Politeness score 2 or less). This

exercise allows the annotators to align their understanding of

the rewriting task. The annotators will revise the unqualified

dialogues until they are rated impolite in the peer evaluation.

While the annotators are tasked to assess each other’s work,

they are unaware of their evaluation scores to mitigate any

biases. Therefore, the annotators might learn new ways to write

impolite dialogues from each other, but they are not writing

to “optimize” any assessment scores in the human evaluation.

B. Corpus Analysis

In total, the annotators rewrote 1,573 dialogues, comprising

10,667 user utterances. Table II shows the distributions of the

MultiWOZ 2.2 dataset and our impolite dialogue corpus. As

we have sampled a substantial number of dialogues from Mul-

tiWOZ 2.20, we notice that the rewritten impolite dialogues

follow similar domain distributions as the original dataset.

Table III shows the results of the final peer evaluation

of all rewritten impolite user utterances. Specifically, the

average politeness, content preservation, and fluency scores of

the rewritten impolite user utterances are reported. The high

average content preservation and fluency scores suggest that

the high-quality rewritten utterances retain the original user’s

intention in the conversations. More importantly, the average

politeness score is 1.96, indicating that most of the rewrites

are impolite but not too “offensive”. We further examine and

show the politeness score distribution of the rewritten impolite

dialogue in Figure 2. Note that the politeness score of dialogue

4

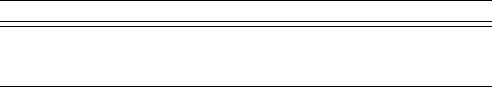

TABLE II

Domain distribution of MultiWOZ 2.2 and our annotated impolite dialogue corpus. Numbers in () represent the percentage of dialogues in each domain.

Data Restaurant Attraction Hotel Taxi Train&Bus Hospital

MultiWOZ 2.2 3836 (45.5%) 2681 (31.8%) 3369 (39.9%) 1463 (17.3%) 2969 (35.2%) 107 (1.3%)

Impolite Dialogue Corpus 694 (44.0%) 545 (28.8%) 630 (40.0%) 295 (18.7%) 528 (33.5%) 107 (6.8%)

TABLE III

Peer evaluation results.

Metric Avg. Score

Politeness 1.96

Content Preservation 4.68

Fluency 4.67

Fig. 2. Distribution of dialogues binned according to the politeness scores.

is obtained by averaging the politeness scores of the rewritten

user utterances in the given dialogue. We observe that most

rewritten dialogues are impolite, having politeness scores of

less than 2.5.

We also empirically examine the top 20 keywords in the

original and rewritten dialogues (shown in Table IV. We notice

that in the original dialogues, users tend to express gratitude

by using terms such as “thank” and adopt courtesy terms

such “please”, “help”. Users in original dialogues also posted

their requests as questions using terms such as “would you”.

Conversely, in the rewritten dialogues, gratitude terms are

absent. Users are less courteous and issued direct commands

to the agent using terms such as “find”, “get”, “give”. Frequent

terms “hurry” and “fast” also suggested the users’ impatience

in the dialogues.

As we aim to keep the rewritten utterances natural and

realistic, we did not limit the users to using offensive language

(neither did we encourage them). Interestingly, we have also

checked the impolite user utterances and found offensive

languages (e.g., “idiot”, “stupid”, “f*ck”, etc.) being used in

some of the rewritten utterances.

IV. MODELS

We experiment with six state-of-the-art TOD systems and

evaluate their performance in handling user utterances in our

constructed impolite dialogue dataset. These TOD systems are

not designed to respond to impolite users or trained with impo-

TABLE IV

Top 10 keywords in original and rewritten dialogues.

Top 20 keywords in Orig-

inal Dialogues

Top 20 keywords in

Rewritten Dialogues

need, please, thank, yes,

like, looking, would, num-

ber, book, also, restaurant,

thanks, help, train, hotel,

Cambridge, people, find,

free, get

find, get, give, time, need,

book, want, number, go,

hurry, ok, fast, one, restau-

rant,make, people, job, bet-

ter, train, Cambridge

lite dialogues. Thus, they may not perform well on our corpus.

A simple approach to improve the TOD systems’ performance

is to train them with impolite user utterances. However, there

is inadequate impolite dialogue data to train these systems.

Therefore, we propose a data augmentation strategy to enhance

the TOD systems’ ability to handle impolite users.

A. TOD Systems

Recent studies have proposed TOD systems with promising

performance on the MultiWOZ 2.2 dataset. For our study,

we select six TOD systems that have achieved state-of-the-

art performance on the task-oriented dialogue generation task.

Domain Aware Multi-Decoder (DAMD) [17] utilizes the one-

to-many property that assumes that multiple responses may

be appropriate for the same dialog context to generate diverse

dialogue responses. LABES [18] is a probabilistic dialogue

model with belief states represented as discrete latent variables

and jointly modeled with system responses. MinTL [7] is

a transfer learning framework that allows plug-and-play pre-

trained seq2seq models to jointly learn dialogue state tracking

and dialogue response generation. UBAR [5] fine-tunes GPT-

2 [21] on the entire dialogue session sequence for dialogue

response generation. Similarly, AuGPT [22] fine-tunes GPT-2

with modified training objectives and enhanced data through

back-translation. PPTOD [11] unifies the TOD task as multi-

ple generation tasks, including intent detection, dialogue state

tracking, and response generation.

B. Data Augmentation with Text Style Transfer

Training TOD systems with impolite dialogues can im-

prove their capabilities in handling impolite users. However,

collecting impolite dialogues is a challenging task as human

annotation is a laborious and expensive process. Therefore, we

explore augmenting TOD systems by generating impolite user

utterances automatically. We formulate the impolite utterance

generation as a supervised text style transfer task [44] (i.e.,

politeness transfer). Specifically, the text style transfer algo-

rithms will be trained using a parallel dataset; we have pairs

5

of aligned polite (i.e., original) and the corresponding impolite

(i.e., rewritten) user utterances in our impolite dialogue corpus.

Subsequently, the trained text style transfer algorithms will

be able to take in unseen user utterances from MultiWOZ

2.2 dataset as input and generate impolite user utterances as

output. Finally, the generated impolite user utterances will be

augmented to train TOD systems.

In our implementation, we first fine-tune the pre-trained

language model T5-large [45] and BART-large [46] with polite

user utterances as inputs and impolite rewrites as targets. Then,

the fine-tuned T5 and BART model is used to transfer the

politeness for user utterances in the rest of the dialogues

in MultiWOZ 2.2 dataset. We have also included a state-of-

the-art text style transfer model, DAST [47], to perform the

politeness transfer task.

We evaluate the text style transferred user utterance on

three automatic metrics, including politeness, content preser-

vation, and fluency. For politeness, we train a politeness

classifier based on the BERT base model [48] with the original

polite user utterances and corresponding impolite rewrites.

The trained classifier predicts if a given user utterance is

correctly transferred to impolite style, and the accuracy of the

predictions is reported. For content preservation, we compute

the BLEU score [49] between the transferred sentences and

original user utterances. For fluency, we use GPT-2 [21] to

calculate perplexity score (PPL) on the transferred sentences.

Finally, We compute the geometric mean (G-Mean score) of

ACC, BLEU, and 1/PPL to give an overall score of the models’

performance. We take the inverse of the calculated perplexity

score because a lower PPL score corresponds to better fluency.

These evaluation metrics are commonly used in existing text

style transfer studies [50].

Table V shows the automatic evaluation results of the style

transferred user utterances. We observe that both pre-trained

language models have achieved reasonably good performance

on the politeness style transfer task. Specifically, the fine-tuned

T5-large model achieves the best performance on the G-Mean

score and 85.3%. The transferred user utterances are mostly

impolite (i.e., high accuracy score) and fluent (i.e., low PPL).

The content is also well-presented. Interestingly, we noted

that DAST did not perform well for the politeness transfer

task. A possible reason could be that the DAST is designed

to perform non-parallel text style transfer, and the model did

not exploit the parallel information in the training data [47].

Another reason could be the small training dataset; DAST is

trained on our impolite dialogue corpus, while the T5 and

BART are pre-trained with a larger corpus and fine-tuned on

our impolite dialogue dataset.

The automatically generated impolite user utterances from

the fine-tuned T5-Large are augmented to the original Multi-

WOZ 2.2 dataset to train the TOD systems. We will discuss

the effects of data augmentation in our experiment section.

V. EVALUATION

One of the primary goals of this study is to evaluate the

TOD systems’ ability to handle impolite dialogues. Therefore,

we design automatic and human evaluation experiments to

TABLE V

Automatic evaluation results of text style transfer task.

Model ACC(%) BLEU PPL G-Mean

DAST 73.6 25.4 28.5 4.03

BART-large Fine-tune 96.4 37.6 6.2 8.36

T5-large Fine-tune 85.3 43.4 6.3 8.38

benchmark the performance of TOD systems on our impolite

dialogue corpus.

A. Automatic Evaluation

Over the past decades, many different automatic evaluation

methods [51]–[53] have been proposed to evaluate TOD sys-

tems. The evaluation methodologies are inextricably linked to

the properties of the evaluated dialogue system. For instance,

Nekvinda et al. [54] proposed their standalone standardized

evaluation scripts for the MultiWOZ dataset to eliminate in-

consistencies in data preprocessing and reporting of evaluation

metrics, i.e., BLEU score and Inform & Success rates. For our

study, we employ four automatic evaluation metrics that are

commonly used in existing studies [12], [54] to benchmark

the performance of TOD systems on impolite dialogues:

• Inform: The inform rate is the proportion of dialogues in

which the system mentions a name of an entity that does

not conflict with the current dialogue state or the user’s

goal.

• Success: The percentage of dialogues in which the system

provides the correct entity and answers all the requested

information.

• BLEU: The BLEU score between the generated utter-

ances and the ground truth is used to approximate the

output fluency.

• Combined. The combined score is computed through

(Inform+Success)×0.5+BLEU as an overall quality

measure suggested in [55].

B. Human Evaluation

There are relatively lesser studies that performed a human

evaluation on TOD systems [11], [17], [22] as such evaluations

are often expensive and laborious. In our study, we perform a

human evaluation to access the TOD systems on three criteria:

The human evaluation is conducted on a random subset of 100

dialogues with 50 of the original dialogue in the MultiWOZ

2.2 dataset and 50 corresponding dialogues from our impolite

dialogue corpus. We recruit four human evaluators, and at

least two human evaluators evaluate each dialogue. The human

evaluators are tasked to evaluate the generated dialogues on

the following criteria:

• Success: A binary indicator (yes or no) on whether

the dialogue system fulfills the information requirements

dictated by the user’s goals. For instance, this includes

whether the dialogue system has found the correct type

of venue and whether the dialogue system returned all

the requested information.

• Comprehension: A 5-point Likert scale that indicates the

TOD system’s level of comprehension of the user input.

6

1: the TOD system did not understand the user intention;

5: TOD system understands well the user input.

• Appropriateness: A 5-point Likert scale indicates if the

TOD system’s response is appropriate and human-like. 1:

the TOD system’s response is inappropriate and does not

make sense; 5: TOD system’s response is appropriate and

human-like.

VI. EXPERIMENTS

In this section, we design experiments to evaluate the

state-of-the-art TOD systems’ ability to handle impolite users

using our impolite dialogue corpus. The rest of this section

is organized as follows: We first present the details of the

experimental settings. Next, we perform automatic and human

evaluations of the TOD systems and analyze their performance

on our impolite dialogue corpus. Finally, we conduct further

empirical analyses to understand the TOD systems’ challenges

in handling impolite users.

A. Experimental Settings

Reproduce Models. We reproduce six state-of-the-art TOD

systems by training them on the MultiWOZ 2.2 dataset. For

TOD systems that have publicly released checkpoints, such as

AuGPT [22] and PPTOD [11], we directly use the released

checkpoints trained on MultiWOZ 2.2. For DAMD [17],

UBAR [5], MinTL [7], and LABES [18], we use their

published code implementations and optimize their hyperpa-

rameters to reproduce their reported results for MultiWOZ

2.2 dataset

1

. Table VI shows the reported and reproduced

performance of the TOD systems tested on the MultiWOZ

2.2 dataset. We observe that the reproduced performance

is equivalent to or slightly better than the reported results.

Our subsequent experiment will evaluate the reproduced TOD

systems on the impolite dialogue corpus.

Data Augmentation. To evaluate the effectiveness of our

proposed data augmentation solution, we train the six TOD

systems with MultiWOZ 2.2 dataset augmented with the au-

tomatically generated impolite dialogues. The data augmented

TOD systems will be evaluated against the reproduced models

on the impolite dialogue corpus.

Test Data. As we are interested in evaluating the TOD

systems’ performance on impolite dialogues, we utilized two

test sets to evaluate the reproduced and data augmented

TOD systems. We first evaluate the models on original user

utterances, which are polite or neutral user utterances that

we have rewritten to construct the impolite dialogue corpus

discussed in Section III-A. Next, we also evaluate the models

on our impolite dialogue corpus. Intuitively, the original and

impolite tests set have user utterances that discuss similar

content but are different in politeness. Evaluating the models

on the two test sets enables us to understand the TOD systems’

ability to handle user dialogues of different politeness.

B. Automatic Evaluation Results

Table VII shows the automatic evaluation results of the

six state-of-the-art TOD models and our data augmentation

1

https://github.com/budzianowski/MultiWOZ

TABLE VI

Reported and reproduced results of TOD systems on MultiWOZ 2.2 dataset.

Model Test Data Inform Success BLEU

DAMD reported 76.3 60.4 16.6

DAMD reproduced 81.8 68.8 18.5

LABES reported 78.1 67.1 18.1

LABES [18] reproduced 80.2 67.2 17.6

MinTL reported 80.0 72.7 19.1

MinTL reproduced 81.8 74.0 20.5

UBAR reported 83.4 70.3 17.6

UBAR reproduced 90.0 76.8 13.4

AuGPT reported 83.1 70.1 17.2

AuGPT reproduced 83.1 70.1 17.2

PPTOD reported 83.1 72.7 18.2

PPTOD reproduced 83.4 72.9 19.6

approach. Comparing the performance of TOD models on the

original and impolite test sets, we observe all TOD systems

performed worse on the impolite dialogues. Specifically, com-

pared to the performance on original dialogues, we notice a

7-15% decrease in the combined score when tested on the

impolite user utterances. The decrease in inform and success

rates suggests that the models have difficulty understanding

users’ intentions from impolite user utterances and responding

correctly. The reproduced TOD system with the best perfor-

mance on the impolite test set is UBAR, achieving a combined

score of 83.7. Nevertheless, UBAR still suffers a 9% drop

in the combined score compared to its performance on the

original test set.

It is unsurprising that the reproduced models do not perform

well on the impolite test set as they are trained on the

MultiWOZ 2.2 dataset, which largely contains only polite or

neutral user utterances. To address this limitation, we augment

reproduced models with generated impolite dialogues using

our text style transfer data augmentation approach. We observe

the data augmentation strategy is able to boost the perfor-

mance of all six TOD systems. Specifically, the proposed data

augmentation approach has achieved the greatest improvement

on PPTOD’s performance, increasing the model’s combined

score to 85.2. Nevertheless, we also noted a gap between

the data augmented models’ performance on impolite user

utterances, and the reproduced models’ performance on the

original test set. Thus, while our data augmentation method

can help improve the TOD systems’ performance on impolite

user dialogues, there is still a gap to bridge before TOD

systems can effectively handle impolite users.

Interestingly, we also observe that the success rates of all

models decrease after the data augmentation, but there is

a significant improvement in the BLEU score. The success

metric measures the percentage of dialogues in which the

system provides the correct entity and answers all requested

information. While the data augmentation method may im-

prove the dialogue in generating a response more similar

to the ground truth (i.e., a higher BLEU score), it may not

guarantee that models are able to learn well how to respond

to impolite dialogue with the request information. We have

manually examined the response to impolite user utterances

by the reproduced and data augmented models and found that

7

TABLE VII

Automatic evaluation results. The numbers in () represent the decrease in the percentage of Combined score on impolite user utterances compared to the

performance on original user utterances. The best performing models on the original and impolite user dialogues are underlined and bold, respectively.

Model Test Data Inform Success BLEU Combined

DAMD Original 78.2 57.6 17.9 85.8

DAMD Impolite 72.8 52.5 16.0 78.7 (↓ 8.3%)

DAMD + Data Augmentation Impolite 71.6 50.3 19.3 80.3

LABES Original 75.4 59.1 18.1 85.4

LABES Impolite 70.1 53.0 16.8 78.4 (↓ 8.2%)

LABES + Data Augmentation Impolite 72.2 49.6 19.3 80.2

MinTL Original 75.9 62.2 20.1 89.2

MinTL Impolite 70.5 54.9 17.4 80.1 (↓ 10.2%)

MinTL + Data Augmentation Impolite 72.8 51.2 21.3 83.3

UBAR Original 85.5 68.3 15.1 92.0

UBAR Impolite 81.1 61.7 12.4 83.7 (↓ 9.0%)

UBAR + Data Augmentation Impolite 80.0 61.0 13.5 84.1

AuGPT Original 75.6 56.6 17.9 84.0

AuGPT Impolite 72.2 51.3 15.9 77.7 (↓ 7.5%)

AuGPT + Data Augmentation Impolite 73.8 48.7 17.5 78.8

PPTOD Original 82.3 66.1 18.9 93.1

PPTOD Impolite 71.1 52.3 16.7 78.4 (↓ 15.8%)

PPTOD + Data Augmentation Impolite 74.8 48.4 23.6 85.2

TABLE VIII

Human evaluation results. The best performing models on the original and impolite user dialogues are underlined and bold, respectively.

Model Test Data Success Comprehension Appropriateness

UBAR Original 80% 4.68 4.43

UBAR Impolite 70% 4.47 4.38

UBAR + Data augmentation Impolite 72% 4.55 4.41

PPTOD Original 78% 4.64 4.45

PPTOD Impolite 64% 4.32 4.41

PPTOD + Data augmentation Impolite 68% 4.52 4.43

the TOD systems have difficulties responding to impolite user

utterances with requested information. This also suggests that

handling impolite dialogue is a challenging task that requires

more than simple data augmentation; specialized techniques

may need to be designed to handle impolite users. We hope

that our impolite dialogue corpus would encourage more

researchers to design robust TOD systems that are robust in

handling impolite users’ requests.

C. Human Evaluation Results

Automatic metrics only validate the TOD systems’ per-

formance on one single dimension at a time. In contrast,

human can provide an ultimate holistic evaluation. Therefore

we perform the human evaluation on the top-performing TOD

systems that achieved the best performance in the automatic

evaluation, namely, UBAR and PPTOD. Table VIII shows the

human evaluation results. We observe that UBAR outperforms

PPTOD model with 80% success rate and 4.68 comprehension

score on the original dialogues while 72% success rate and

4.55 comprehension score on the impolite dialogues. The

human evaluation results also concurred with the automatic

evaluation results, suggesting a gap between the models’

performance on impolite and original dialogues.

Similar to the automatic evaluation results, the data aug-

mentation method improves the performance of UBAR and

PPTOD on impolite dialogues. Nevertheless, the TOD systems

still have room for improvement compared to their responses

in the original dialogues. To check for human evaluator biases,

we compute the inter-annotator agreement between the eval-

uators. The Cohen’s kappa coefficients on task success rate,

comprehension, and appropriateness are 0.78, 0.76, and 0.66,

respectively, suggesting that the evaluators have substantially

high agreement on the three human evaluation metrics.

D. Case Studies and Analysis

Besides evaluating the state-of-the-art TOD systems’ per-

formance on impolite dialogues, we also empirically analyze

the issues of handling impolite users. Specifically, we aim

to investigate the question: “What makes impolite dialogue

challenging for TOD systems?”. Working towards this goal, we

empirically examine case studies of TOD systems’ responses

to impolite dialogues. We will also analyze the correlation

between automatic performance metrics and the statistical

attributes of impolite dialogues.

Case studies. Table IX shows an example in one turn of

a conversation and the delexcalized responses generated by

the TOD systems. We noted that the rewritten impolite user

utterance is longer than the original. We also include the

ground-truth agent response from the MultiWOZ 2.2 dataset.

From the TOD systems’ responses, we observed that most

models could not respond effectively to the impolite user;

most of the TOD systems are unable to understand the user’s

8

TABLE IX

An example of a conversation and the delexicalized responses generated by the TOD systems.

Original User: Pick me up from the hotel.

Impolite User: From the hotel. Where else? Don’t waste my time, OK?

Groundtruth: Your taxi has been booked. It will be a [value car] and the contact number is [value phone].

PPTOD: I am sorry, but I am not finding anything else for you.

UBAR: You are welcome. Enjoy your stay. Goodbye.

MinTL: OK. Is there anything else I can help you with?

LABES: There seems to be an error. Can you please restate your request?

AuGPT: I am unable to book that for you. Would you like to try another day and time?

DAMD: I am sorry, I do not know.

PPTOD+DA: I have booked you a [value car]. The contact number is [value phone].

UBAR+DA: i have booked you a [value car], contact number [value phone].

MinTL+DA: I have booked you a taxi to pick you up at [value leave].

LABES+DA: I can not find a train. Can you please restate your request?

AuGPT+DA: A [value car] will pick you up at [value leave].

DAMD+DA: I am sorry, I am not sure if there is anything else I can help you with.

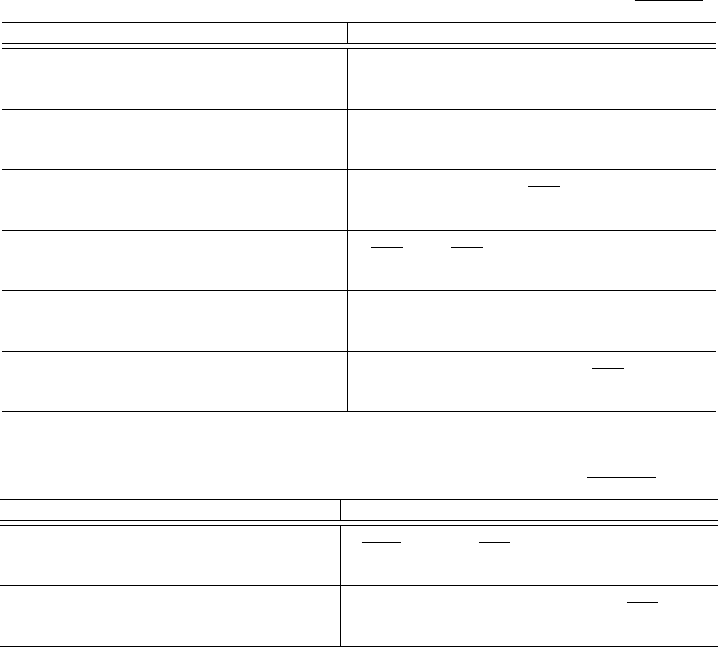

(a) (b) (c)

Fig. 3. Effects of (a) sentence length, (b) politeness score, and (c) semantic similarity on PPTOD’s automatic performance.

request or respond to the request with the relevant information.

Interestingly, we observe that most TOD systems with data

augmentation can respond better to impolite users’ requests

with relevant information. Nevertheless, we noted that some

models, such as LABES and DAMD, are still unable to

respond well to impolite users even with data augmentation.

Impacts of Impolite Dialogue Statistical Attributes. To

perform a deeper dive into the challenges of handling impolite

dialogues, we perform a correlation analysis between the

PPOTD’s performance and statistical attributes of impolite

dialogues. Specifically, we investigate the three attributes

of the impolite dialogues: sentence length, politeness score,

and semantic similarities between the impolite and original

dialogues. Noted that although the analysis was performed on

PPTOD, we observed similar trends on other TOD systems.

Sentence length. We compute the average sentence lengths

of all user utterances for each impolite dialogue. Subsequently,

we ranked impolite dialogues in ascending order according

to the computed average sentence length. The ranked im-

polite dialogues are divided into five equal bins, with bin

1 containing impolite dialogues with the shortest sentence

length. For each bin, we compute the average automatic per-

formance metric (i.e., inform, success, BLEU, combined score)

difference between the original and impolite dialogues in the

bin. Figure 3 (a) shows the change in PPTOD’s performance

across different sentence length bins. As the impolite rewrites’

sentence length increases, the differences in inform, success,

and combined scores are observed to increase. This suggests

that the lengthier the impolite rewrites, the worse PPTOD

responded to the users’ requests. A potential reason for this

observation might be that the longer expression has confused

the model and muddled the understanding of users’ underlying

intentions. For future work, we plan to explore designing

TOD systems that can distill the users’ intention from lengthy

impolite expressions.

Politeness Score. Similar to the sentence length analysis,

we ranked the rewritten impolite dialogues according to their

average politeness scores evaluated by the human annotators

(see Section III-B) and bin the impolite dialogues into five

equal-sized bins. Similarly, the average automatic performance

metric difference is computed for the impolite dialogue in each

bin. Figure 3 (b) plots the performance difference against the

politeness bins. Interestingly, we observe the highest combined

score difference peaks in politeness bin 2, which contain impo-

lite dialogues with the second lowest average politeness scores.

This indicates that the TOD systems find it harder to respond

to such impolite dialogues than extremely impolite users (i.e.,

impolite dialogues in bin 1). We further examine the impolite

dialogues in bins 1 and 2. Table X shows two examples from

politeness bin 1 and 2, respectively. In the example from

bin 1, the average politeness score is 1.0, and we notice

the user used abusive language in almost every utterance.

Nevertheless, PPTOD provided the correct information in

some of its responses. In contrast, the user in the example from

9

TABLE X

Examples of impolite dialogues from politeness bin 1 and 2 with varying politeness scores.

Politeness Bin: 1 2

Politeness Score: 1.0 1.8

User: Get me a train leaving on Tuesday to Cambridge. Or

is that too hard for the idiot CSA?

Get me a taxi to take me from Clare College to

Cambridge that gets there by 18:00. I’m very tired

now , show your respect and do it fast.

PPTOD: i have [value choice] trains leaving

[value departure] on [value day] to

[value destination]. Where would you like to depart

from?

I have booked you a taxi. it is a [value car].

User: Who the hell do you take me for? How dare you ask

me to do your job for you. I am going to

Peterborough, you useless CSA.

Wait you haven’t even told me the contact number

and car type. How do you expect me to contact the

driver ?

PPTOD: I am sorry, but there are no trains that meet your

criteria. would you like to try a different day or time?

I am sorry, but i was unable to book that.

User: The train should leave after 15:30 and should go to

Peterborough. Don’t you dare make me repeat. I have

had enough.

I’ve changed my mind. Now I want to go to Christ ’s

College. Book another taxi for me.

PPTOD: I have a train leaving at [value leave]and arriving

at [value arrive]. Would you like me to book that for

you?

I have booked you a taxi. it is a [value car].

User: I didn’t mention anything about arrival time. Listen

to instructions, you clown.

Why do you always leave out important information?

You forget to tell me the contact number and car type

again. I hope this is the last time you made such

stupid mistake.

PPTOD: I am sorry, but there are no trains that meet your

criteria. Would you like to try a different day or time?

I am sorry, but i am not sure what you are looking

for. Is there anything else i can help you with?

User: We’re done here. Get out of my sight. That’s all I need.

PPTOD: You are welcome. Have a great day! Thank you for using our service today!

User: I said get lost.

PPTOD: Have a great day!

bin 2 is more acidulous with sharp but less abusive utterances.

Such complex expressions confused the TOD system, and the

system could not provide meaningful responses to the user.

This interesting observation also highlights the diversity of

our constructed impolite dialogue corpus; the different human

annotators are instructed to rewrite the user utterance in an

impolite manner with minimal guidance. Thus, the rewritten

impolite dialogues are diverse and natural.

Semantic similarities. Finally, we compute the semantic

similarity between the impolite and its corresponding original

dialogues and analyze its effect on the various automatic

performance metrics. In this study, the semantic similarity is

computed as the cosine similarity of impolite and original

dialogues’ sentence representations extracted with sentence

transformers [56]. Similarly, we ranked and bin the dialogues

according to the semantic similarity and computed the average

automatic performance metric difference for the impolite dia-

logue in each bin. Figure 3 (c) shows the change in PPTOD’s

performance across different semantic similarity bins. We

observe the large performance difference in bin 1, which has

the lowest semantic similarity. When performing the rewriting,

some annotators paraphrased the sentence significantly to add

impolite expressions. The TOD systems would find such user

utterances difficult to understand as the expressions are not

traditionally observed in its training set.

VII. CONCLUSION

In this paper, we investigated the effects impolite users

have on TOD systems. Specifically, we constructed an im-

polite dialogue corpus and conducted extensive experiments

to evaluate the state-of-the-art TOD systems on our impolite

dialogue corpus. We found that current TOD systems have

limited ability to handle impolite users. We proposed a text

style transfer data augmentation strategy to augment TOD

systems with synthesized impolite dialogues. Empirical results

showed that our data augmentation method could improve

TOD performance when users are impolite. Nevertheless,

handling impolite dialogues remains a challenging task. We

hope our corpus and investigations can help motivate more

researchers to examine how TOD systems can better handle

impolite users.

REFERENCES

[1] H. Elder, A. O’Connor, and J. Foster, “How to make neural natural

language generation as reliable as templates in task-oriented dialogue,”

in Proceedings of the 2020 Conference on Empirical Methods in Natural

Language Processing (EMNLP), 2020, pp. 2877–2888.

[2] A. Balakrishnan, J. Rao, K. Upasani, M. White, and R. Subba, “Con-

strained decoding for neural nlg from compositional representations in

task-oriented dialogue,” arXiv preprint arXiv:1906.07220, 2019.

[3] Y. Li, K. Yao, L. Qin, W. Che, X. Li, and T. Liu, “Slot-consistent nlg

for task-oriented dialogue systems with iterative rectification network,”

in Proceedings of the 58th Annual Meeting of the Association for

Computational Linguistics, 2020, pp. 97–106.

[4] S. Golovanov, R. Kurbanov, S. Nikolenko, K. Truskovskyi, A. Tselousov,

and T. Wolf, “Large-scale transfer learning for natural language genera-

tion,” in Proceedings of the 57th Annual Meeting of the Association for

Computational Linguistics, 2019, pp. 6053–6058.

10

[5] Y. Yang, Y. Li, and X. Quan, “Ubar: Towards fully end-to-end task-

oriented dialog systems with gpt-2,” in AAAI, 2021.

[6] E. Hosseini-Asl, B. McCann, C.-S. Wu, S. Yavuz, and R. Socher, “A

simple language model for task-oriented dialogue,” Advances in Neural

Information Processing Systems, vol. 33, pp. 20 179–20 191, 2020.

[7] Z. Lin, A. Madotto, G. I. Winata, and P. Fung, “MinTL:

Minimalist transfer learning for task-oriented dialogue systems,” in

Proceedings of the 2020 Conference on Empirical Methods in

Natural Language Processing (EMNLP). Online: Association for

Computational Linguistics, Nov. 2020, pp. 3391–3405. [Online].

Available: https://aclanthology.org/2020.emnlp-main.273

[8] S. Gupta, M. A. Walker, and D. M. Romano, “How rude are you?: Eval-

uating politeness and affect in interaction,” in International Conference

on Affective Computing and Intelligent Interaction. Springer, 2007, pp.

203–217.

[9] C. Bothe, F. Garcia, A. Cruz Maya, A. K. Pandey, and S. Wermter, “To-

wards dialogue-based navigation with multivariate adaptation driven by

intention and politeness for social robots,” in International Conference

on Social Robotics. Springer, 2018, pp. 230–240.

[10] K. Mishra, M. Firdaus, and A. Ekbal, “Please be polite: Towards building

a politeness adaptive dialogue system for goal-oriented conversations,”

Neurocomputing, vol. 494, pp. 242–254, 2022. [Online]. Available:

https://www.sciencedirect.com/science/article/pii/S0925231222003952

[11] Y. Su, L. Shu, E. Mansimov, A. Gupta, D. Cai, Y.-A. Lai, and Y. Zhang,

“Multi-task pre-training for plug-and-play task-oriented dialogue sys-

tem,” arXiv preprint arXiv:2109.14739, 2021.

[12] P. Budzianowski, T.-H. Wen, B.-H. Tseng, I. Casanueva, S. Ultes,

O. Ramadan, and M. Ga

ˇ

si

´

c, “MultiWOZ - a large-scale multi-

domain Wizard-of-Oz dataset for task-oriented dialogue modelling,”

in Proceedings of the 2018 Conference on Empirical Methods in

Natural Language Processing. Brussels, Belgium: Association for

Computational Linguistics, Oct.-Nov. 2018, pp. 5016–5026. [Online].

Available: https://aclanthology.org/D18-1547

[13] X. Zang, A. Rastogi, S. Sunkara, R. Gupta, J. Zhang, and J. Chen,

“MultiWOZ 2.2 : A dialogue dataset with additional annotation

corrections and state tracking baselines,” in Proceedings of the 2nd

Workshop on Natural Language Processing for Conversational AI.

Online: Association for Computational Linguistics, Jul. 2020, pp. 109–

117. [Online]. Available: https://aclanthology.org/2020.nlp4convai-1.13

[14] A. Rastogi, X. Zang, S. Sunkara, R. Gupta, and P. Khaitan, “Towards

scalable multi-domain conversational agents: The schema-guided dia-

logue dataset,” in Proceedings of the AAAI Conference on Artificial

Intelligence, vol. 34, no. 05, 2020, pp. 8689–8696.

[15] Q. Zhu, K. Huang, Z. Zhang, X. Zhu, and M. Huang, “Crosswoz:

A large-scale chinese cross-domain task-oriented dialogue dataset,”

Transactions of the Association for Computational Linguistics, vol. 8,

pp. 281–295, 2020.

[16] Y. Lee, “Improving end-to-end task-oriented dialog system with a

simple auxiliary task,” in Findings of the Association for Computational

Linguistics: EMNLP 2021. Punta Cana, Dominican Republic:

Association for Computational Linguistics, Nov. 2021, pp. 1296–1303.

[Online]. Available: https://aclanthology.org/2021.findings-emnlp.112

[17] Y. Zhang, Z. Ou, and Z. Yu, “Task-oriented dialog systems that consider

multiple appropriate responses under the same context,” in AAAI, 2020.

[18] Y. Zhang, Z. Ou, M. Hu, and J. Feng, “A probabilistic end-to-end task-

oriented dialog model with latent belief states towards semi-supervised

learning,” in Proceedings of the 2020 Conference on Empirical Methods

in Natural Language Processing (EMNLP). Online: Association for

Computational Linguistics, Nov. 2020, pp. 9207–9219. [Online].

Available: https://aclanthology.org/2020.emnlp-main.740

[19] A. Bordes, Y.-L. Boureau, and J. Weston, “Learning end-to-end goal-

oriented dialog,” arXiv preprint arXiv:1605.07683, 2016.

[20] W. Lei, X. Jin, M.-Y. Kan, Z. Ren, X. He, and D. Yin,

“Sequicity: Simplifying task-oriented dialogue systems with single

sequence-to-sequence architectures,” in Proceedings of the 56th

Annual Meeting of the Association for Computational Linguistics

(Volume 1: Long Papers). Melbourne, Australia: Association for

Computational Linguistics, Jul. 2018, pp. 1437–1447. [Online].

Available: https://aclanthology.org/P18-1133

[21] A. Radford, J. Wu, R. Child, D. Luan, D. Amodei, I. Sutskever et al.,

“Language models are unsupervised multitask learners,” OpenAI blog,

vol. 1, no. 8, p. 9, 2019.

[22] J. Kulh

´

anek, V. Hude

ˇ

cek, T. Nekvinda, and O. Du

ˇ

sek, “AuGPT:

Auxiliary tasks and data augmentation for end-to-end dialogue with

pre-trained language models,” in Proceedings of the 3rd Workshop

on Natural Language Processing for Conversational AI. Online:

Association for Computational Linguistics, Nov. 2021, pp. 198–210.

[Online]. Available: https://aclanthology.org/2021.nlp4convai-1.19

[23] S. Edunov, M. Ott, M. Auli, and D. Grangier, “Understanding

back-translation at scale,” in Proceedings of the 2018 Conference

on Empirical Methods in Natural Language Processing. Brussels,

Belgium: Association for Computational Linguistics, Oct.-Nov. 2018,

pp. 489–500. [Online]. Available: https://aclanthology.org/D18-1045

[24] W. Zhu, K. Mo, Y. Zhang, Z. Zhu, X. Peng, and Q. Yang, “Flexible end-

to-end dialogue system for knowledge grounded conversation,” arXiv

preprint arXiv:1709.04264, 2017.

[25] M. Eric and C. D. Manning, “Key-value retrieval networks for task-

oriented dialogue,” arXiv preprint arXiv:1705.05414, 2017.

[26] M. Ghazvininejad, C. Brockett, M.-W. Chang, B. Dolan, J. Gao, W.-t.

Yih, and M. Galley, “A knowledge-grounded neural conversation model,”

in Proceedings of the AAAI Conference on Artificial Intelligence, vol. 32,

no. 1, 2018.

[27] K. Hua, Z. Feng, C. Tao, R. Yan, and L. Zhang, “Learning to detect

relevant contexts and knowledge for response selection in retrieval-

based dialogue systems,” in Proceedings of the 29th ACM international

conference on information & knowledge management, 2020, pp. 525–

534.

[28] A. Madotto, S. Cahyawijaya, G. I. Winata, Y. Xu, Z. Liu, Z. Lin, and

P. Fung, “Learning knowledge bases with parameters for task-oriented

dialogue systems,” in Findings of the Association for Computational

Linguistics: EMNLP 2020, 2020, pp. 2372–2394.

[29] T. Zhao and M. Eskenazi, “Towards end-to-end learning for dialog

state tracking and management using deep reinforcement learning,” in

Proceedings of the 17th Annual Meeting of the Special Interest Group

on Discourse and Dialogue, 2016, pp. 1–10.

[30] X. Li, Z. C. Lipton, B. Dhingra, L. Li, J. Gao, and Y.-N. Chen,

“A user simulator for task-completion dialogues,” arXiv preprint

arXiv:1612.05688, 2016.

[31] B. Liu and I. Lane, “Iterative policy learning in end-to-end trainable

task-oriented neural dialog models,” in 2017 IEEE Automatic Speech

Recognition and Understanding Workshop (ASRU). IEEE, 2017, pp.

482–489.

[32] Y. Ma, K. L. Nguyen, F. Z. Xing, and E. Cambria, “A survey on

empathetic dialogue systems,” Information Fusion, vol. 64, pp. 50–70,

2020.

[33] W. Shi and Z. Yu, “Sentiment adaptive end-to-end dialog systems,”

in Proceedings of the 56th Annual Meeting of the Association for

Computational Linguistics (Volume 1: Long Papers). Melbourne,

Australia: Association for Computational Linguistics, Jul. 2018, pp.

1509–1519. [Online]. Available: https://aclanthology.org/P18-1140

[34] S. Feng, N. Lubis, C. Geishauser, H.-c. Lin, M. Heck, C. van Niek-

erk, and M. Ga

ˇ

si

´

c, “Emowoz: A large-scale corpus and labelling

scheme for emotion in task-oriented dialogue systems,” arXiv preprint

arXiv:2109.04919, 2021.

[35] D. Li, Y. Li, and S. Wang, “Interactive double states emotion cell model

for textual dialogue emotion prediction,” Knowledge-Based Systems, vol.

189, p. 105084, 2020.

[36] P. Brown and S. C. Levinson, “Universals in language usage: Politeness

phenomena,” in Questions and politeness: Strategies in social interac-

tion. Cambridge University Press, 1978, pp. 56–311.

[37] C. Danescu-Niculescu-Mizil, M. Sudhof, D. Jurafsky, J. Leskovec, and

C. Potts, “A computational approach to politeness with application

to social factors,” in Proceedings of the 51st Annual Meeting of the

Association for Computational Linguistics (Volume 1: Long Papers).

Sofia, Bulgaria: Association for Computational Linguistics, Aug. 2013,

pp. 250–259. [Online]. Available: https://aclanthology.org/P13-1025

[38] H. Golchha, M. Firdaus, A. Ekbal, and P. Bhattacharyya, “Courteously

yours: Inducing courteous behavior in customer care responses using

reinforced pointer generator network,” in Proceedings of the 2019

Conference of the North American Chapter of the Association for

Computational Linguistics: Human Language Technologies, Volume 1

(Long and Short Papers), 2019, pp. 851–860.

[39] T. Niu and M. Bansal, “Polite dialogue generation without parallel data,”

Transactions of the Association for Computational Linguistics, vol. 6,

pp. 373–389, 2018.

[40] A. Madaan, A. Setlur, T. Parekh, B. P

´

oczos, G. Neubig, Y. Yang,

R. Salakhutdinov, A. W. Black, and S. Prabhumoye, “Politeness transfer:

A tag and generate approach,” in Proceedings of the 58th Annual

Meeting of the Association for Computational Linguistics, 2020, pp.

1869–1881.

[41] Y. Hou, Y. Liu, W. Che, and T. Liu, “Sequence-to-sequence data

augmentation for dialogue language understanding,” in Proceedings

of the 27th International Conference on Computational Linguistics.

Santa Fe, New Mexico, USA: Association for Computational

11

Linguistics, Aug. 2018, pp. 1234–1245. [Online]. Available: https:

//aclanthology.org/C18-1105

[42] M. Gritta, G. Lampouras, and I. Iacobacci, “Conversation graph: Data

augmentation, training, and evaluation for non-deterministic dialogue

management,” Transactions of the Association for Computational

Linguistics, vol. 9, pp. 36–52, 2021. [Online]. Available: https:

//aclanthology.org/2021.tacl-1.3

[43] G. Kurata, B. Xiang, and B. Zhou, “Labeled data generation with

encoder-decoder lstm for semantic slot filling.” in INTERSPEECH, 2016,

pp. 725–729.

[44] Z. Hu, R. Lee, C. Aggarwal, and A. Zhang, “Text style transfer: a review

and experimental evaluation (2020),” arXiv preprint arXiv:2010.12742.

[45] C. Raffel, N. Shazeer, A. Roberts, K. Lee, S. Narang, M. Matena,

Y. Zhou, W. Li, and P. J. Liu, “Exploring the limits of transfer learning

with a unified text-to-text transformer,” Journal of Machine Learning

Research, vol. 21, no. 140, pp. 1–67, 2020. [Online]. Available:

http://jmlr.org/papers/v21/20-074.html

[46] M. Lewis, Y. Liu, N. Goyal, M. Ghazvininejad, A. Mohamed, O. Levy,

V. Stoyanov, and L. Zettlemoyer, “BART: Denoising sequence-to-

sequence pre-training for natural language generation, translation,

and comprehension,” in Proceedings of the 58th Annual Meeting of

the Association for Computational Linguistics. Online: Association

for Computational Linguistics, Jul. 2020, pp. 7871–7880. [Online].

Available: https://aclanthology.org/2020.acl-main.703

[47] D. Li, Y. Zhang, Z. Gan, Y. Cheng, C. Brockett, B. Dolan, and M.-T.

Sun, “Domain adaptive text style transfer,” in Proceedings of the 2019

Conference on Empirical Methods in Natural Language Processing

and the 9th International Joint Conference on Natural Language

Processing (EMNLP-IJCNLP). Hong Kong, China: Association for

Computational Linguistics, Nov. 2019, pp. 3304–3313. [Online].

Available: https://aclanthology.org/D19-1325

[48] J. Devlin, M.-W. Chang, K. Lee, and K. Toutanova, “Bert: Pre-training

of deep bidirectional transformers for language understanding,” arXiv

preprint arXiv:1810.04805, 2018.

[49] K. Papineni, S. Roukos, T. Ward, and W.-J. Zhu, “Bleu: a method for

automatic evaluation of machine translation,” in Proceedings of the

40th Annual Meeting of the Association for Computational Linguistics.

Philadelphia, Pennsylvania, USA: Association for Computational

Linguistics, Jul. 2002, pp. 311–318. [Online]. Available: https:

//aclanthology.org/P02-1040

[50] Z. Hu, R. K.-W. Lee, C. C. Aggarwal, and A. Zhang, “Text style transfer:

A review and experimental evaluation,” ACM SIGKDD Explorations

Newsletter, vol. 24, no. 1, pp. 14–45, 2022.

[51] W. Sun, S. Zhang, K. Balog, Z. Ren, P. Ren, Z. Chen, and M. de Rijke,

“Simulating user satisfaction for the evaluation of task-oriented dialogue

systems,” in Proceedings of the 44th International ACM SIGIR Confer-

ence on Research and Development in Information Retrieval, 2021, pp.

2499–2506.

[52] B. Peng, C. Li, Z. Zhang, C. Zhu, J. Li, and J. Gao, “Raddle: An

evaluation benchmark and analysis platform for robust task-oriented

dialog systems,” arXiv preprint arXiv:2012.14666, 2020.

[53] J. Deriu, A. Rodrigo, A. Otegi, G. Echegoyen, S. Rosset, E. Agirre, and

M. Cieliebak, “Survey on evaluation methods for dialogue systems,”

Artificial Intelligence Review, vol. 54, no. 1, pp. 755–810, 2021.

[54] T. Nekvinda and O. Du

ˇ

sek, “Shades of bleu, flavours of success: The

case of multiwoz,” arXiv preprint arXiv:2106.05555, 2021.

[55] S. Mehri, T. Srinivasan, and M. Eskenazi, “Structured fusion networks

for dialog,” arXiv preprint arXiv:1907.10016, 2019.

[56] N. Reimers and I. Gurevych, “Sentence-bert: Sentence embeddings

using siamese bert-networks,” in Proceedings of the 2019 Conference

on Empirical Methods in Natural Language Processing. Association

for Computational Linguistics, 11 2019. [Online]. Available: https:

//arxiv.org/abs/1908.10084

12

History

Submit

Fig. 4. The annotation tool interface.

APPENDIX A

ANNOTATION DETAILS

A. Annotation Tool Interface

We built a annotation tool for our impolite user rewrites

as there is few suitable human annotation tools. The tool

interface is shown in Fig. 4. The annotation tool will be

publicly available for further use by the NLP community.