NBER WORKING PAPER SERIES

SITE SELECTION BIAS IN PROGRAM EVALUATION

Hunt Allcott

Working Paper 18373

http://www.nber.org/papers/w18373

NATIONAL BUREAU OF ECONOMIC RESEARCH

1050 Massachusetts Avenue

Cambridge, MA 02138

September 2012

This paper is a substantial revision of a manuscript titled "External Validity and Partner Selection

Bias" on which Sendhil Mullainathan was a co-author. Although he is no longer a co-author, this

project has benefited enormously from his insights. I thank Josh Angrist, Amitabh Chandra, Lucas

Davis, Kyle Dropp, Meredith Fowlie, Xavier Gine, Chuck Goldman, Matt Harding, Joe Hotz, Guido

Imbens, Larry Katz, Chris Knittel, Dan Levy, Jens Ludwig, Konrad Menzel, Emily Oster, Rohini

Pande, Todd Rogers, Piyush Tantia, Ed Vytlacil, Heidi Williams, and seminar participants at the ASSA

meetings, Berkeley, Columbia, Harvard, MIT, NBER Labor Studies, NBER Energy and Environmental

Economics, NEUDC, the UCSB/UCLA Conference on Field Experiments, and the World Bank for

insights and helpful advice. Thanks also to Tyler Curtis, Marc Laitin, Alex Laskey, Alessandro Orfei,

Nate Srinivas, Dan Yates, and others at Opower for fruitful discussions. Christina Larkin provided

timely research assistance. The views expressed herein are those of the authors and do not necessarily

reflect the views of the National Bureau of Economic Research.

NBER working papers are circulated for discussion and comment purposes. They have not been peer-

reviewed or been subject to the review by the NBER Board of Directors that accompanies official

NBER publications.

© 2012 by Hunt Allcott. All rights reserved. Short sections of text, not to exceed two paragraphs, may

be quoted without explicit permission provided that full credit, including © notice, is given to the source.

Site Selection Bias in Program Evaluation

Hunt Allcott

NBER Working Paper No. 18373

September 2012, Revised March 2014

JEL No. C93,D12,L94,O12,Q41

ABSTRACT

“Site selection bias” occurs when the probability that partners adopt or evaluate a program is correlated

with treatment effects. I test for site selection bias in the context of the Opower energy conservation

programs, using 111 randomized control trials (RCTs) involving 8.6 million households across the

United States. Predictions based on rich microdata from the first ten replications substantially overstate

efficacy in the next 101 sites. There is evidence of two positive selection mechanisms. First, local

populations with stronger preferences for environmental conservation both encourage utilities to adopt

the program and are more responsive to the treatment. Second, program managers initially target treatment

at the most responsive consumer sub-populations, meaning that efficacy drops when utilities expand

the program. While it may be optimal to initially target an intervention toward the most responsive

populations, these results show how analysts can be systematically biased when extrapolating experimental

results, even after many replications. I augment the Opower results by showing that microfinance institutions

(MFIs) that run RCTs differ from the global population of MFIs and that hospitals that host clinical

trials differ from the national population of hospitals.

Hunt Allcott

Department of Economics

New York University

19 W. 4th Street, 6th Floor

New York, NY 10012

and NBER

1 Introduction

Program evaluation using randomized control trials (RCTs) has long been a important part of

economics, from the Negative Income Tax experiments to the RAND health insurance experiment,

Moving to Opportunity, the Job Training Partnership Act, and a wave of recent RCTs in devel-

opment, health, labor economics, and other fields. Program evaluation is often used to inform a

policy decision: should a treatment be implemented in some “target” population? Typically, an

evaluation is carried out at one or more sample sites, and the results are generalized to make an

implementation decision in a different and often larger set of target sites. This raises questions of

external validity: how well do parameter estimates generalize across sites?

When generalizing empirical results, we either implicitly or explicitly make an assumption I call

external unconfoundedness: that there are no unobservables that moderate the treatment effect and

differ between sample and target. As formalized by Hotz, Imbens, and Mortimer (2005) and Hotz,

Imbens, and Klerman (2006), this type of assumption mirrors the unconfoundedness assumption

required for internal validity (Rosenbaum and Rubin 1983). When we have results from only one

site, this assumption amounts to assuming away the possibility of unexplained site-level treatment

effect heterogeneity. Because this is often unrealistic, we value replication in additional sites. After

enough replications, external unconfoundedness implies only that the distribution of treatment

effects in sample sites can predict the distribution of effects in target sites. Put simply, if an

intervention works well in enough different trials, we might advocate that it be scaled up. Formally,

this logic requires that sample sites are as good as randomly selected from the population of target

sites.

In practice, there are many reasons why sites are selected for empirical study. For example,

because RCTs require an implementing partner with managerial ability and operational expertise,

the set of actual partners may be able to run more effective programs than the typical potential

partner. As another example, partners that are already running programs that they know are

effective are more likely to be open to independent impact estimates (Pritchett 2002). Both of these

mechanisms would cause a positive site selection bias: treatment effects from sample sites would

be larger than in the full set of targets. Alternatively, partners that are particularly innovative and

willing to test new programs may also be running many other effective programs in the same area.

If there are diminishing returns, the new program with an actual partner might have lower impact

than with a typical potential partner. This would cause negative site selection bias. Site selection

bias implies that even with a large number of internally valid impact estimates, policymakers could

still draw systematically biased inference about a program’s impact at full scale.

While site selection bias is both intuitive and potentially important, there is little empirical

evidence on this issue or the mechanisms through which it might act. The reason is simple: since

this type of selection operates at the level of the site instead of the individual unit, one needs a

3

statistically large sample of sites with internally valid estimates of the effect of the same treatment.

Then, one must define a population of potential partner sites and somehow infer treatment effects

in sites where evaluations have not yet been carried out. Given the cost of RCTs, it is unusual for

the same intervention to be rigorously evaluated at more than a small handful of sites. By contrast,

as in LaLonde (1986), Dehejia and Wahba (1999), Heckman, Ichimura, Smith, and Todd (1998),

Smith and Todd (2004), and many other studies, providing evidence on individual-level selection

bias and an estimator’s internal validity is much less onerous, as it simply requires a large sample

of individuals.

The Opower energy conservation program provides an exceptional opportunity to study the

site selection process. The treatment is to mail “Home Energy Reports” to residential electricity

consumers that provide energy conservation tips and compare their energy use to that of their neigh-

bors. Electric and natural gas utilities have partnered with Opower largely because the program

helps to comply with state-level energy conservation mandates. As of February 2013, the program

had been implemented using 111 randomized control trials involving 8.6 million households at 58

utilities across the United States.

This paper’s organizing question is, what would be the effects if the Opower program were scaled

nationwide? Aside from providing a case study of an important methodological issue, this out-of-

sample prediction problem is also particularly policy-relevant. In recent years, “behavioral” energy

efficiency programs have received increasing attention as an alternative to traditional policies such

as subsidies and standards for energy efficient capital stock. While Opower’s home energy reports

are perhaps the most prominent example of such interventions, the American Council for an Energy

Efficient Economy reports that 281 different behavior-based programs were run in the U.S. between

2008 and 2013 (Mazur-Stommen and Farley 2013). Consultancy McKinsey & Co. recently released

a study predicting “immense” potential for behavioral energy efficiency in the U.S., with potential

savings from Opower and many other opportunities amounting to 16 to 20 percent of current

residential energy consumption (Heck and Tai 2013). Policymakers use such predictions to help

determine the stringency of energy conservation mandates as part of an effort to reduce energy use

externalities and other inefficiencies that may increase energy use.

1

I begin by using microdata from Opower’s first ten replications to predict aggregate nation-

wide effects. This is a highly promising opportunity for out-of-sample prediction: there are very

large samples, with 512,000 households in treatment and control, ten different replications spread

throughout the country, internally-valid estimates, and a useful set of individual level covariates

to adjust for differences between sample and target populations. Using the non-parametric test

1

ENERNOC (2013), KEMA (2013), and Quackenbush (2013) are examples of state-level energy efficiency potential

assessments that include predictions for behavioral energy efficiency programs based partially on results from RCTs in

pilot locations. The studies were commissioned by utilities and state public utilities commissions as part of the process

of setting Energy Efficiency Resource Standards. Allcott and Greenstone (2012) discuss the economic rationale for

these types of policies.

4

of treatment effect heterogeneity introduced by Crump, Hotz, Imbens, and Mitnik (2008), I show

that treatment effects are larger for households that use more electricity and also vary in intuitive

ways conditional on four other features of a home’s physical capital stock. I then use two standard

“off-the-shelf” approaches to extrapolation: linear prediction and the re-weighting procedure in-

troduced by Hellerstein and Imbens (1999). Results from these first ten replications predict retail

electricity cost savings of about 1.7 percent, or $2.3 billion, in the first year of a nationally-scaled

program.

Aside from the microdata, I also have Opower’s “metadata”: impact estimates and standard

errors from all 111 RCTs at all 58 different utility partners that began before February 2013. As an

“in-sample” test of external unconfoundedness, I use microdata from the initial ten sites to predict

first-year effects at the 101 later sites. The microdata over-predict efficacy by approximately 0.5

percentage points, or $690 million worth of retail electricity. In other words, early sites were strongly

positively selected through mechanisms associated with the treatment effect.

I then focus on the metadata to further document site selection bias and provide suggestive evi-

dence on underlying mechanisms. I use the “site selection probability,” or the conditional probabil-

ity that a utility partners with Opower. There is a close analogy between this site-level propensity

score and the familiar individual-level propensity score, but the “dimension-reduction” property of

propensity scores is particularly useful in meta-analysis with a limited number of site-level observa-

tions. The site selection probability allows a straightforward test of site selection bias: I show that

within the 111 existing RCTs, site selection probabilities are positively associated with treatment

effects. While this association is documented using utility-level observables, I also show that it is

“selection on unobservables” in the sense that it would not have been predicted using the microdata

from the first ten sites.

I also provide suggestive evidence on four specific mechanisms that could both induce a site

to be included in the sample and moderate the treatment effect. To do this, I define “mechanism

scores,” which are site selection probabilities constructed only with a subset of site-level covariates

that proxy for the mechanism. I then test whether these four mechanism scores are conditionally

associated with treatment effects. There is statistically and economically significant evidence of

selection through what one might call “population preferences”: consumers in areas with high

income, high education, and stronger preferences for environmental conservation both encourage

utilities to adopt the Opower program and are more responsive to the intervention once it is

implemented.

Along with using the binary selection decision, another way to study site selection bias is to

exploit the timing of selection. The same observables that predict the binary selection decision from

the population of potential partner utilities also predict earlier selection within the set of actual

partners. A simple scatterplot of first-year treatment effects against site start date shows clearly

declining efficacy for later sites. This downward trend exists both between utilities that partner

5

earlier vs. later and within utility at earlier vs. later customer sub-populations. A substantial

portion of the within-utility trend is explained by the fact that a utility’s earlier sites involve

higher-usage consumers who are more responsive. Typically, if the program works well in an initial

customer sub-population, the utility will contract with Opower to implement at additional sites

within their service territory. Conditioning on site level observables in the form of the mechanism

scores explains just over one-quarter of the between-utility trend. The remaining portion reflects

selection mechanisms that are unexplained even with data from 111 replications.

These results reflect successful targeting by Opower and its partners: beginning with the most

responsive populations maximizes cost effectiveness if there is limited scaling capacity or uncer-

tainty over efficacy. Thus, the paper does not argue that site selection reflects sub-optimal behavior:

just as individual-level endogenous selection into a job training program reflects rational choices

by potential participants, site-level endogenous selection also reflects rational choices by potential

partners. Instead, the point of the paper is that site-level endogenous selection can systematically

bias inference and policy decisions, just as individual-level endogenous selection can. Furthermore,

I show how site-level selection mechanisms can be systematically categorized and quantified, just as

we have become familiar with labeling and measuring individual-level mechanisms such as ability

bias. This paper also does not argue that RCTs are not useful and important. In the Opower con-

text, this would be particularly untrue: as shown by Allcott (2011), non-experimental approaches

to evaluating Opower programs give highly misleading estimates.

Another incorrect conclusion would be that behavioral programs will necessarily not be cost

effective at additional sites. In reality, statistical predictions of efficacy at new sites are imprecise,

and there are a good number of recent Opower programs that have performed as well as the first

ten. Furthermore, although for this analysis it is more natural to extrapolate when measuring

effects as a percent of counterfactual usage, cost effectiveness depends on the absolute quantity of

electricity conserved. Because utilities in higher-usage regions such as the southeastern U.S. have

been less likely to partner with Opower, the program might actually have better cost effectiveness

if scaled nationwide even if there were smaller savings in percent terms.

2

Although the Opower experiments provide a powerful case study, they are only one example in

one context. An appendix to the paper proposes a set of site selection mechanisms that may apply

more generally. The appendix also provides brief suggestive evidence from two other domains on

how RCT sites differ systematically from policy-relevant target sites. First, I study microfinance

institutions (MFIs) that have partnered to carry out randomized trials with three academic groups:

the Jameel Poverty Action Lab, Innovations for Poverty Action, and the Financial Access Initia-

2

Opower also has its own prediction model. Their estimates are not comparable to mine, because while I focus on

effects at full scale (“technical potential”), Opower reports effects if scaled only to a smaller group of households where

the intervention is cost effective (“economic potential”). I do not calculate economic potential because it increases

complexity while adding nothing to the discussion of site selection bias. However, because their methodology is

partially based off of the earlier working paper version of this study, our results would be unlikely to conflict if we

did calculate comparable statistics.

6

tive. I show that partner MFIs differ from the global population of MFIs on characteristics that

might moderate effects of various interventions, including correlates of default rates, organizational

structure, and monitoring and implementation ability. Second, I study hospitals that are the sites

of clinical trials for new drugs and surgical procedures. I show that clinical trial sites tend to be

larger, more experienced in surgical procedures, offer a wider range of technologies and patient

services, and are generally higher-quality than the average US hospital. Because both microfinance

RCTs and clinical trials test a variety of different interventions, it is not possible to correlate selec-

tion probability with consistently-defined treatment effects as one can for the Opower experiments.

However, these additional examples suggest that site selection bias is probably not unique to energy

conservation RCTs.

3

The paper proceeds as follows. The remainder of this section discusses related literature. Section

2 introduces the Opower interventions and data. Section 3 carries out extrapolation using microdata

from the first ten replications. Section 4 studies site selection bias using the metadata. Section 5

concludes by suggesting several steps that can partially address site selection bias when designing

and analyzing RCTs. Appendix II broadens the discussion to contexts other than Opower, providing

additional evidence from microfinance and clinical trials.

1.1 Related Literature

There are several areas of related literature. The Job Training Partnership Act of 1982 (JTPA)

provides closely-related evidence; see Bloom et al. (1993), Doolittle and Traeger (1990), and others.

The JTPA initiated job training programs at 600 sites, of which 200 were approached to do RCTs

and 16 eventually agreed. Hotz (1992), Heckman (1992), Heckman and Vytlacil (2007b), and

others discuss the fact that these sites were non-randomly selected and propose that this could lead

experimental estimates to differ from the true nationwide effects. Non-random site selection is part

of what Heckman (1992) calls “randomization bias,” although his discussion focuses also on other

issues, such as how operational demands of RCTs could cause program performance to decline and

how the need for control groups requires expansion of the pool of eligible individuals.

While arguing that randomization bias could be important, Heckman (1992) writes that the

evidence from JTPA is “indirect” and “hardly decisive.” Furthermore, given average sample sizes

of 270 people per site, Heckman and Smith (1997) show that it is not even possible to reject that

the JTPA treatment effects are homogeneous across sites. With much larger samples of microdata

and many more sites, the Opower experiments allow a clearer analysis of ideas proposed in the

discussion of JTPA.

3

This approach of comparing RCT partner sites to non-partner sites has also been implemented by Blair, Iyengar,

and Shapiro (2013), who show that field experiments in economics and political science are more likely to be carried

out in wealthy, democratic countries that spend more on citizen welfare. The MFI results are related to Brigham et

al. (2013), who experimentally test the willingness of MFIs to partner on RCTs.

7

This paper is also related to other multi-site program evaluations in a variety of domains. Among

others, this includes Abdulkadiroglu, Angrist, Dynarski, Kane, and Pathak (2009), Angrist, Pathak,

and Walters (2011), Hoxby and Rockoff (2004), and Walters (2013) on charter schools, as well as a

growing number of multi-site development interventions such as Banerjee, Cole, Duflo, and Linden

(2007) and the pair of de-worming studies by Miguel and Kremer (2004) and Bobonis, Miguel,

and Sharma (2006). Dehejia (2003), Hotz, Imbens, and Mortimer (2005), and Hotz, Imbens, and

Klerman (2006) study the WIN and GAIN job training programs, focusing on methodological issues

related to extrapolation across sites. In the context of this growing interest in multi-site evaluations,

this paper adds the insight that impact estimates extrapolated even from many sample sites may

be systematically different from true target effects due to site selection bias.

There is also a theoretical and empirical literature on selection of individual units into random-

ized trials, including Belot and James (2013), Gautier and van der Klaauw (2012), Gross, Mallory,

Heiat, and Krumholz (2002), Heckman and Vytlacil (2007b), Kline and Tamer (2011), Malani

(2008), Manski (1996), Steg et al. (2007), and others. Site selection bias is mathematically similar,

except that the agents deciding whether or not to select into the sample is not the individuals

themselves. Instead, they are organizations, businesses, or government agencies that control sets

of individuals to be potentially treated. This implies a different set of economic selection mecha-

nisms applicable to potential partner organizations instead of potentially-treated individuals. Also

important but less directly related is the work on selective trials (Chassang, Padro I Miquel, and

Snowberg 2012).

Most immediately related are studies of the Opower programs. Independent of the discussion

of site selection bias, this paper is an important contribution in that it is the only comprehensive

meta-analysis of these programs. Costa and Kahn (2013) show that correlates of environmentalism

moderate treatment effects at one site. This is broadly consistent with one of the key site selection

mechanisms I discuss. Allcott and Rogers (2014) study long term effects at the three longest-

running sites, and Ayres, Raseman, and Shih (2013) also evaluate two early programs. A large

number of consulting reports evaluate individual programs for regulatory accounting purposes,

including Integral Analytics (2012), KEMA (2012), Opinion Dynamics (2012), Perry and Woehleke

(2013), Violette, Provencher, and Klos (2009). Allcott (2011) presents effects from the first ten

sites. The academic studies of Opower’s early programs have more than 500 citations on google

scholar, many of which point to them as evidence that behavioral energy efficiency interventions

have large effects while giving little attention to the site selection process. This paper argues that

extrapolating results beyond these early samples should be done carefully.

Nolan et al. (2008) and Schultz et al. (2007) provided the academic “proof of concept” for

the Opower program. Although their experiment is not part of my meta-analysis, it is strikingly

consistent with site selection bias. Their treatment was to hand-deliver door-hangers with energy

use neighbor comparisons to about 300 homes in a wealthy California suburb. The treatment effects

8

are three to six times larger than the first ten Opower programs.

Finally, there is a recent discussion of broader issues related to external validity, including

Angrist and Pischke (2010), Cartwright (2007), Deaton (2010a, 2010b), Duflo, Glennerster, and

Kremer (2007), Imbens (2010), Ludwig, Kling, and Mullainathan (2011), Manski (2011), Rodrik

(2008), Rothwell (2005), and Worrall (2007). This literature discusses a series of threats to external

validity, including variation in populations in economic environments, general equilibrium effects,

“gold plating,” the use of short-term measurement and surrogate outcomes, treatment fidelity, and

other issues. Some of the more recent additions to this literature, such as Pritchett and Sandefur

(2013), discuss site selection bias as one additional threat to external validity, citing these Opower

results as a case study.

2 Overview: Experimental Design and Data

2.1 Experimental Design

Opower is a private company that partners with utilities to mail Home Energy Reports to resi-

dential electricity and natural gas consumers. Utilities partner with Opower and run other energy

conservation programs for several reasons. Most importantly, there are 27 states with Energy Effi-

ciency Resource Standards (EERS), which require utilities to reduce energy use by a given amount,

typically about one percent per year. In the absence of an EERS or other regulatory mechanism,

for-profit investor-owned utilities (IOUs) have little incentive to reduce demand for the product they

sell. Rural electric cooperatives and other utilities owned by municipalities or other government

entities should maximize welfare instead of profits, so they often run energy efficiency programs

if they believe the programs benefit customers. Aside from conserving energy, some utilities also

have found that the home energy report program can help improve consumers’ positive perception

of the utility brand.

To implement a program, Opower and the partner utility first identify a set of residential

consumers to target. Some small utilities choose to target the entire residential consumer base,

while others target heavy users who might be most responsive to the intervention, and others

target local geographic areas where conservation could help to delay costly upgrades to distribution

infrastructure. To be eligible for the program, a customer must have at least one year of valid

pre-experiment energy use data and satisfy some additional technical conditions.

4

The resulting

4

Typically, households in Opower’s experimental populations need to have valid names and addresses, no negative

electricity meter reads, at least one meter read in the last three months, no significant gaps in usage history, exactly

one account per customer per location, and a sufficient number of neighbors to construct the neighbor comparisons.

Households that have special medical rates or photovoltaic panels are sometimes also excluded. Utility staff and

“VIPs” are sometimes automatically enrolled in the reports, and I exclude these non-randomized report recipients

from any analysis. These technical exclusions eliminate only a small portion of the potential population. These

exclusions do not contribute to site selection bias if one believes that the excluded households would never receive

9

site-level population is then randomized into treatment and control groups.

Figure 1 shows an example report. The two-page letter has two key components. On the

first page, the Neighbor Comparison Module compares the household’s energy use to its 100

geographically-nearest neighbors in similar house sizes. The Action Steps Module, which is typi-

cally on the second page, includes energy conservation tips targeted to the household based on its

historical energy use patterns and observed characteristics.

The treatment group is sent reports at frequencies that vary within and between households

and sites. For example, of the first ten programs, two randomized households between monthly and

quarterly frequencies, while three other programs targeted heavier users with monthly reports and

lighter users with quarterly. One common pattern is three consecutive monthly reports followed by

bimonthly reports for at least another two years.

The basic structure of the reports is highly consistent: two pages of neighbor comparisons,

additional personalized energy use information, and energy conservation tips. However, the reports

do vary. The envelope and report are branded with the partner utility’s name, and the information

and tips are updated each month to reflect the customer’s most recent energy bills and seasonal

factors; for example, customers are more likely to see information about air conditioners in the

summer. Despite this variation, there is a remarkably high degree of treatment fidelity compared to

other treatments of interest in economics. For example, “job training” often takes different forms

at different sites (Dehejia 2003, Hotz, Imbens, and Klerman 2006), and the effects of “contract

teachers” could depend markedly on the teacher’s ability and even who employs them (Bold et al.

2013).

Aside from treatment fidelity, there are two other useful features of the Opower experiments.

First, in the taxonomy of Levitt and List (2009), these are “natural field experiments,” meaning

that people are in general not aware that they are being studied. Therefore, there are no Hawthorne

effects. Second, these are “opt-out” experiments, and opting out requires actively calling the utility

and canceling. In the average program, only about 0.6 percent of the treatment group opts out

over the first year. Thus, there is no need to model essential heterogeneity or household-level

selection into the treatment (Heckman, Urzua, and Vytlacil 2006), and the treatment effect is a

Policy-Relevant Treatment Effect in the sense of Heckman and Vytlacil (2001).

2.2 Data

I use three kinds of data: characteristics of the population of potential utility partners, household-

level microdata from the first ten Opower sites through the end of 2010, and site-level metadata

from all Opower sites through February 2014.

the intervention and are thus not part of a Target population.

10

2.2.1 Utility-Level Characteristics

Several parts of the paper use utility-level characteristics for all or part of Opower’s population of

potential partner utilities. I define this population to be all 882 large electric utilities in the United

States, excluding small utilities with fewer than 10,000 residential consumers and power marketers

in states with deregulated retail markets, as Opower has no clients in these two categories. About

five percent of utilities operate in multiple states. In order to model how state and local policies

affect utilities’ decisions, a utility is defined as a separate observation for each state in which it

operates.

The primary data source is the Energy Information Administration (EIA) Form 861 for cal-

endar year 2007 (U.S. EIA 2013), the year before the first Opower programs began. From these

data, I construct each utility’s ownership structure (Investor-Owned, Municipality-Owned, or other,

which includes rural electric cooperatives and other government entities such as the Tennessee Val-

ley Authority), number of residential consumers, mean residential electricity usage, and the share of

consumers that have voluntarily enrolled in “green pricing programs” that sell renewably-generated

energy at a premium price. I also construct two measures of the extent of other existing energy

efficiency programs: the ratio of estimated electricity conserved in residential energy conserva-

tion programs to total residential electricity sold (“Residential Conservation/Sales”) and the ratio

of total spending on energy conservation programs to total revenues (“Conservation Cost/Total

Revenues”).

Form 861 includes a list of the counties in each utility’s service territory, which can be matched

to county-level demographic information. In a handful of cases (primarily in Alaska) where counties

could not be matched between datasets, I substituted state averages for county-level data. Using the

county-level U.S. Election Atlas (Leip 2013), I construct the share of all votes in the 2004 and 2008

presidential elections that were for the Green party candidate (“Green Vote Share”), as well as the

share of Democratic and Republican votes in those elections that were for the Democratic candidate

(“Democrat Vote Share”). County mean household income and the share of people 25 years and

older that have a college degree are from the 2000 Census. I also include whether the state in which

the utility operates has an Energy Efficiency Resource Standard (EERS), using information from

the Pew Center (2011). Finally, I gather data on physical characteristics of housing stock: mean

square footage and share of homes with pools are from the American Housing Survey state-level

averages, and share using electric heat, mean house age, share rented instead of owner-occupied,

and share single family are from the county-level American Community Survey 5-year estimates

for 2005-2009.

11

2.2.2 Site-Level Metadata

Due to contractual restrictions, Opower cannot share microdata from many of their recent part-

ners. Instead, they have provided their site-level metadata, including average treatment effects

and standard errors, number of reports sent, and attrition for each post-treatment month of each

RCT. Consistent with the theoretical framework in Section 3, I define a “site” as a group of house-

holds where one experiment takes place. Some utilities have multiple “sites,” because they began

with one customer sub-population and then added other sub-populations in separate randomized

control trials at a later date. As of February 2014, there were 111 sites with at least one year of

post-treatment data at 58 different utilities.

I study ATEs from the first 12 post-treatment months at each site, for several reasons. Consid-

ering full instead of partial years averages over seasonal variation in effect sizes, whereas comparing

programs that have been in effect over different seasons would require location-specific seasonal

adjustments. Comparing programs that have been in effect for different durations would also re-

quire duration controls, given that effect sizes tend to grow over time (Allcott and Rogers 2014).

This gradual strengthening of effects as treatment continues means that the ATEs studied here

are smaller than the ATEs that would be realized after a longer treatment period. Using one year

instead of two or more full years, however, allows the analysis to include the largest number of

sites. This comes at little cost in terms of precision: although the standard errors are somewhat

wider, the one-year ATE explains 92 percent of the variation in the two-year ATE.

Opower’s analysts estimated the ATEs using mutually-agreed procedures and code. I define

m

0

as the month when the first home energy reports are generated. The 12 months before m

0

are

the “baseline” period, while the “post-treatment” period begins the first day of the month after

m

0

. The month m

0

is excluded from the analysis, as it often will include days both before and

after the first reports arrive. Y

ist

is daily average electricity usage (in kilowatt-hours per day) for

household i in site s for the period ending in date t, divided by the control group’s mean usage

over the post-treatment period and multiplied by 100. This comes from meter reads, which for

most households occur about once per month. Y

0is

is a vector of three baseline usage controls:

average daily usage over the entire baseline period, the baseline winter (December-March), and the

baseline summer (June-September). π

sm

is a set of month-of-sample indicators. The first year ATE

is estimated using the following equation:

Y

ist

= −τ

s

T

is

+ φ

sm

Y

0is

+ π

sm

+ ε

ist

(1)

The intervention causes a decrease in energy use. By convention, I multiply τ T by -1, so that

reported τ are positive and larger values imply higher efficacy. Standard errors are robust and

clustered by household.

5

5

Due to various contractual and computational issues, Opower has not been able to provide the clustered standard

12

Because Y

ist

is normalized by control group mean usage, τ

r

can be interpreted as the percentage

point effect on electricity use. For example, τ

s

= 1 would reflect a one percent effect. Of course, this

approach is also equivalent to regressing in levels (with Y in kWh/day) and dividing the coefficients

and standard errors by control group mean usage. There are at least two other potential ways to

normalize usage. One is to directly use kilowatt-hours per day instead of normalizing into a percent.

Across the 111 sites, however, treatment effects in kWh/day are closely associated with control

group usage in kWh/day (t = 11.35, R

2

= 0.54). Because this association is well-understood, it

is more informative to compare and extrapolate in percent terms. By contrast, treatment effects

in percent are not statistically associated with control group usage. When sharing results publicly,

Opower typically reports in percent terms.

A second alternative approach would be to use the natural log of Y . However, the outcome of

policy interest is site-level or national-level electricity usage reduction in levels, e.g. kilowatt-hours

per day or per year. This is correctly calculated by differences in means of usage levels. Regressing

instead in logs and multiplying by the control group level tends to understate the quantity of energy

conserved, because regressing in logs gives higher weight to lower-usage households with smaller

effect sizes. Other practical reasons to prefer logs are less important in this context: there is very

little measurement error because these are administrative records, and the estimated bτ under my

normalization are not affected by dropping outlying high-usage observations.

Table 1 presents descriptive statistics for the metadata. The 110 site-level populations average

about 77,200 households, of which an average of 53,300 are assigned to treatment. The total

underlying sample size for the meta-analysis is thus approximately 8.57 million households, or

about one in every 12 in the United States. Control group post-treatment average usage ranges

from 12.0 to 90.1 kilowatt-hours (kWh) per day. For context, one kilowatt-hour is enough electricity

to run either a typical new refrigerator or a standard 60-watt incandescent lightbulb for about 17

hours. The average U.S. household consumes 11,280 kWh/year, or 30.9 kWh/day (U.S. EIA 2011).

The ATEs also vary substantially, both in levels and in percent.

There are two types of attrition. First, an average of 10 percent of households move and close

their utility accounts each year. The site with the highest one-year move rates (42 percent) is at

a utility in college town where most households are rentals that change hands each academic year.

After an account closes, Opower ceases to send reports and no longer observes electricity bills for

the physical location or the former occupant, so the unit attrits from the sample.

The second type of attrition is when a household actively calls the utility and asks to opt out

of the program. An average of 0.5 percent of households opt out during the first year. These

households’ utility bills are observed, and they remain in the sample. I define the “treatment”

errors for five of the 111 sites. Notwithstanding, I observe non-clustered standard errors for all sites. For the five

sites where clustered standard errors are not available, I have predicted them based on a regression of clustered on

non-clustered standard errors in the other 96 sites. Because intra-household correlations of electricity use are similar

across sites, the prediction has an R

2

of 0.87, so this approximation seems highly unlikely to affect the results.

13

as “being mailed a Home Energy Report or opting out.” This definition of “treatment” gives a

treatment effect of policy interest: the effect of attempting to mail Home Energy Reports to an

entire site-level population. In practice, because opt-out rates are so low, the ATE is the almost

exactly the same when the “treatment” is defined as “being mailed a Home Energy Report.” One

might also consider defining “treatment” as “opening and reading the Home Energy Report,” but

this is both unobserved and less useful for policy.

Opower also works with utilities that sell only natural gas and other “dual fuel” utilities that sell

both natural gas and electricity. Instead of studying effects on electricity use only, one alternative

approach would be to combine effects on natural gas and electricity consumption. There are two

reasons why I do not do this. First, there is no equivalent of the EIA form 861 database for natural

gas utilities, so it would be difficult to construct a dataset with characteristics of potential partner

utilities. Second, while the treatment presumably affects natural gas and oil use in all sites where

households use these fuels, Opower only observes these effects if their partner utility is the company

that sells the other fuels. In many sites, the natural gas and oil retailers are separate companies

from the electricity retailer. I prefer a consistently-observed measure of the effects on electricity

use instead of an inconsistently-observed measure of the effects on total energy use.

2.2.3 Microdata

In addition to the metadata, I have household-level microdata through the end of 2010 for each of

the ten Opower programs that began before December 2009. Table 2 provides an overview. Due to

confidentiality restrictions, utility names and locations are masked and the sites are numbered from

one to ten. The dataset includes 21.3 million electricity meter reads from 512,000 households, of

which 5.4 million meter reads occur in the first year post-treatment. The rightmost column shows

that treatment and control groups at nine sites are statistically balanced on baseline usage, while

there is mild imbalance at site 5. Placebo tests using pre-treatment data suggest that controlling

for lagged electricity use eliminates the potential bias from this imbalance, and the overall results

are effectively the same when excluding site 5, which is unsurprising given that it is only a small

share of the ten-site sample.

6

Opower and their partner utilities gather customer demographic data from public records and

private-sector marketing data providers such as Acxiom. I also have each household’s Census tract,

which I can map to Census data. For analysis of the microdata, I consider four mechanisms that

theory suggests could moderate the treatment effects, that are observed in the microdata, and that

vary both within and potentially between sites. Columns 1 and 2 of Table 3 present the means and

standard deviations of these variables in the ten-site sample.

6

Since these early programs, Opower has institutionalized a re-randomization algorithm to ensure covariate balance

before implementation.

14

The first category is social norms: social inference, conditional cooperation, and conformity

suggest that households who learn that they use more energy than the norm should conserve more

energy than those who learn that they use less. This is measured by “First Comparison,” the

usage difference in kWh/day between a household and its mean neighbor, as reported in the Social

Comparison Module on the first report. While I also observe the comparisons on later reports,

only the first comparison is unaffected by treatment. This variable is also measured for the control

group because Opower’s computers generate “placebo” reports for control households.

The second category measures population preferences. Households that are more environmen-

tally conscious or have a taste for conservation should be more responsive to the intervention. For

example, Costa and Kahn (2013) show that households in Democratic or better educated neigh-

borhoods and households that donate to environmental groups have stronger treatment effects at

one early Opower site. Measures in this category include Census tract mean household income and

the share of population over 25 years old that holds a college degree, both from the 2000 Census.

A potentially important correlate of interest in energy conservation is “Hybrid Share,” the share

of all registered vehicles in the Census tract in 2013 that are hybrid-electric. Finally, this category

also includes “Green Pricing,” an indicator variable for whether the household participates in a

green pricing program.

The third category is increasing marginal cost of conservation. Households that have already

participated in other energy efficiency programs may have already exploited the lowest-cost energy

conservation opportunities, and any additional opportunities may be more costly. For example,

a natural way that consumers might respond to the Opower treatment is to be more assiduous

about turning off lights when not in use. Over the past few years, many utilities also ran programs

to replace standard incandescent lightbulbs with energy efficient Compact Fluorescent Lightbulbs

(CFLs). Because CFLs use one-fourth the electricity of an incandescent, a household that has

participated in one of these programs and then responds to the Opower treatment by turning off

the lights would save one-fourth the electricity of a household that still had incandescents. “EE

Program Participant” is an indicator for whether the household had received a loan or rebate for an

energy efficient appliance, insulation, or a heating, ventilation, and air conditioning system through

another utility program before the Opower program began. Of course, this variable should also be

correlated with a preferences for conservation, and thus the association with the treatment effect

is theoretically ambiguous.

The fourth category is physical house characteristics, as measured by an Electric Heat indicator,

House Age, indicators for Has Pool, Rental (vs. owner-occupied), and Single Family buildings, and

Square Feet. With the possible exception of having a pool, there are straightforward theoretical

reasons why each of these six characteristics could moderate the treatment effect. One natural way

for consumers to respond to the intervention is to lower thermostat temperatures in the winter,

and having electric heat (instead of gas or oil heat) implies that this action would reduce electricity

15

use. Because building codes have been progressively tightened over the past 30 years, older homes

are less energy efficient and offer more low-cost opportunities to conserve. Although little is known

about how treatment groups respond to the reports because surveys have not been very informative

(Allcott and Rogers 2014), correlations between treatment effects and characteristics such as having

a pool may provide some insight. Renters have less ability and incentive to invest in energy efficient

capital stock in their apartments. Occupants of single family dwellings have more control over their

electricity use. Larger homes use more energy, but square footage could moderate treatment effects

even conditional on electricity use: it may be more or less difficult to conserve if electricity is used

more intensively in a small house vs. less intensively in a large house.

Section 3 uses these variables to extrapolate effects out of the microdata sample, and columns

3 and 4 of Table 3 present the target population means to which the effects are fitted. Column 3 is

the national mean across all 882 potential partner utilities, weighted by the number of consumers

in each utility. This weighting means that the extrapolated effect will reflect the total potential

savings if the treatment were scaled nationwide. Column 4 is the unweighted mean across the

“later sites,” which refers to the 101 Opower programs that started after the 10 programs in the

microdata sample. Weighting the 101 sites equally means that the predicted effect is the mean of

the site-level ATEs.

Table 3 shows that the microdata sample differ on observable proxies for population preferences:

they have higher income, more college graduates, own more hybrid vehicles, and are more likely

to participate in green pricing programs. Their houses also have slightly different physical charac-

teristics, with less electric heat, fewer rentals, and more single-family homes. Appendix Table A1

presents the means and standard deviations of each variable at each specific site. Some variables

are not available for all sites, and Green Pricing and EE Program Participant are only available in

site 10.

Because treatment frequency varies, I will typically adjust for frequency when comparing ATEs

across sites. A “frequency-adjusted” treatment effect eτ is adjusted to match F , the average treat-

ment frequency across all 111 sites in the metadata. As reported in Table 1, this is approximately

0.58 reports per month. Denoting the frequency at site s as F

s

, the adjustment is:

eτ

s

= bτ

s

+ bυ(F − F

s

) (2)

Standard errors are calculated using the Delta method. The bυ is estimated using microdata from

sites 2 and 7, where frequency was randomly assigned between monthly and quarterly. As shown

in Appendix Table A2, the estimated bυ is 0.515 percent of electricity use per report/month, and

the estimates from each of the two sites alone are economically and statistically similar. The point

estimate implies that a one-standard deviation change in reports per month across the 111 sites

(0.11 reports/month) would change the ATE by 0.515×0.11=0.056 percentage points. Frequency

16

adjustment does not meaningfully impact any of the analyses, both because the adjustment is

very small relative to the variation in effect sizes and because frequency is uncorrelated with other

factors. Appendix Figure A1 plots ATEs vs. frequency-adjusted ATEs; the R

2

is 0.98.

2.3 Economic Significance of Site-Level Heterogeneity

Is the heterogeneity across sites statistically and economically significant? If there is no true

variation in effects between sites and the variation in point estimates is simply due to sampling

error, then there is no possibility for site selection bias. Even if there is statistically significant

heterogeneity, there would be little reason to worry about site selection bias if the variation is not

economically important.

Data in Table 1 suggest that the variation in effects across sites is larger than can be explained

by sampling error: the standard deviation of the 111 site-level ATEs is 0.45 percent of electricity

usage, while the average standard error is only 0.18 percent. More formally, Cochran’s Q test rejects

that the effects are homogeneous with a p-value of less than 0.001. The I

2

statistic (Higgins and

Thompson 2002) shows that 86.6 percent of the variation in effect sizes is due to true heterogeneity

instead of sampling variation. Effectively none of this variation is due to variation in treatment

intensity as measured by frequency: the standard deviation of frequency-adjusted ATEs and their

mean standard error are 0.44 percent and 0.18 percent, respectively, and the I

2

is 85.6 percent.

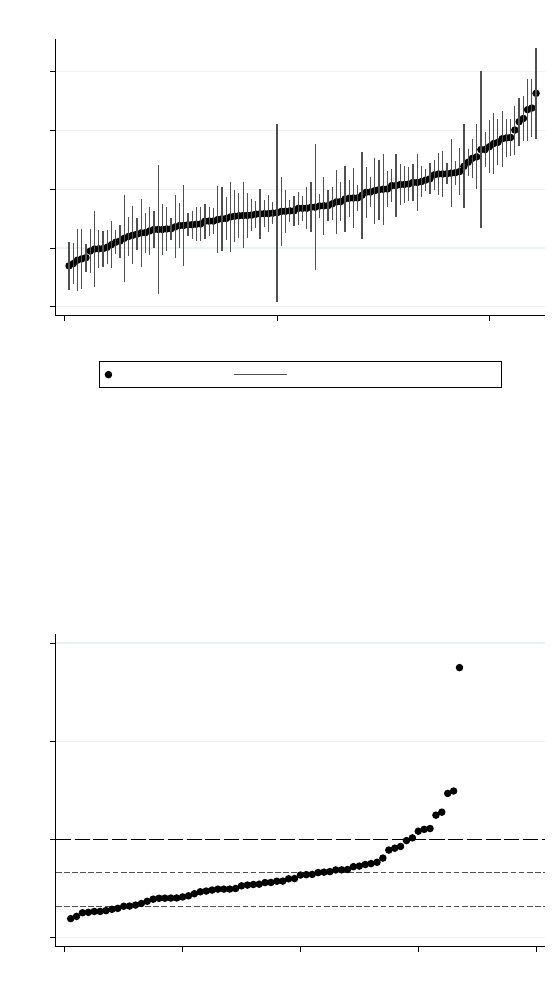

One measure of economic significance is the dollar magnitude of the variation in predicted

effects at scale. Figure 2 presents a horizontally-oriented forest plot of the predicted electricity cost

savings in the first year of a program that is expanded “nationally,” i.e. to all households at all

potential partner utilities. Each dot reflects the prediction using the percent ATE from each site,

multiplied by national annual electricity costs. The point estimates of first-year savings vary by a

factor of 5.2, from $695 million to $3.62 billion, and the standard deviation is $618 million.

A second measure of economic significance is the variation in cost effectiveness, as presented

in Figure 3. While there are many ways to calculate cost effectiveness, I present the simplest:

the ratio of program cost to kilowatt-hours conserved during the first two years.

7

As Allcott and

Rogers (2014) point out, cost effectiveness improves substantially when evaluating over longer time

horizons; I use two years here to strike a balance between using longer time horizons to calculate

more realistic levels vs. using shorter time horizons to include more sites with sufficient post-

treatment data. I make a boilerplate cost assumption of $1 per report.

The variation is again quite substantial. The most cost effective (0.88 cents/kWh) is 14 times

better than the least cost effective, and the 10th percentile is four times better than the 90th

7

Cost effectiveness would be further improved if natural gas savings were included. Of course, cost effectiveness is

not a measure of welfare. The welfare effects of non-price interventions such as the Opower program are an important

issue, but this is certainly distinct from this paper’s argument about site selection bias.

17

percentile. The site on the right of the figure with outlying poor cost effectiveness is a small

program with extremely weak ATE and high cost due to frequent reports.

This variation is economically significant in the sense that it can cause program adoption errors:

program managers at a target site might make the wrong decision if they extrapolate cost effective-

ness from another site in order to decide whether to implement the program. Alternative energy

conservation programs have been estimated to cost approximately five cents per kilowatt-hour

(Arimura, Li, Newell, and Palmer 2011) or between 1.6 and 3.3 cents per kilowatt-hour (Friedrich

et al. 2009). These three values are plotted as horizontal lines on Figure 3. Whether an Opower

program at a new site has cost effectiveness at the lower or upper end of the range illustrated in

Figure 3 therefore could change whether a manager would or would not want to adopt. Extrap-

olating cost effectiveness from other sample sites could lead a target to implement when it is in

fact not cost effective, or fail to implement when it would be cost effective. As a concrete example,

I note that in one early site with a small ATE and therefore poor cost effectiveness, the partner

utility ended the program.

3 Extrapolation Under External Unconfoundedness

3.1 Model

3.1.1 Individuals and Potential Outcomes

There is a population of individual units indexed by i: in this case, household electricity accounts.

The outcome of interest is electricity use, denoted Y

i

. T

i

∈ {1, 0} is the treatment indicator variable.

Following the Rubin (1974) Causal Model, each individual unit has two potential outcomes, Y

i

(1)

if exposed to treatment and Y

i

(0) if not. These potential outcomes can be written as additively-

separable functions of vectors of observed and unobserved characteristics X

i

and U

i

:

Y

i

(0) = β(X

i

) + ζ(U

i

) (3)

Y

i

(1) = α(X

i

) + β(X

i

) + γ(U

i

) + ζ(U

i

)

The X variables are the set of individually-varying characteristics in Table 3. Notice that X

will not include characteristics that are “observed” at the site level but do not vary within the

sample, as α(X) cannot be estimated without variation in X. For example, the unemployment rate

near a job training center may be known, but local unemployment is an element of U unless the

sample includes individuals facing different local unemployment rates.

Individual i’s treatment effect is the difference in Y

i

between the treated and untreated states:

τ

i

= Y

i

(1) − Y

i

(0) = α(X

i

) + γ(U

i

) (4)

18

3.1.2 Sites

When considering replication and external validity, it is useful to introduce the concept of a “site”:

a set of individual units, often grouped by geography, where one program might be carried out. In

this case, because electric utilities typically contract individually with Opower, a site will typically

be either the set of residential customers at one utility or a subset thereof. In several states,

however, a quasi-governmental energy efficiency agency can contract with Opower, in which case a

site could comprise customers of different utilities within the same state. In other contexts, a “site”

might be a school or school district, a job training center, a microfinance institution, or a hospital.

The population of individual units is divided mutually exclusively and exhaustively into these

“sites.” I index sites by s and use an integer variable S

i

to indicate the site of which individual i is

a member. Within each site, Opower randomizes individual units into treatment and control with

constant probability. Thus, the ATE at site s is simply:

τ

s

= E[α(X

i

)|S

i

= s] + E[γ(U

i

)|S

i

= s] (5)

To economize on notation, I define X

s

≡ E[α(X

i

)|S

i

= s] and U

s

≡ E[γ(U

i

)|S

i

= s].

3.1.3 External Unconfoundedness

Because T is randomly assigned, Rosenbaum and Rubin’s (1983) unconfoundedness assumption

holds: T

i

⊥ (Y

i

(1), Y

i

(0)) |X

i

. It is therefore possible to consistently estimate τ

s

in “sample” sites

where experiments have been carried out. Instead of simply estimating τ

s

in sample, however, this

paper’s objective is to estimate τ in non-sample “target” sites. Denote D

i

∈ {1, 0} as an indicator

variable for whether individual i is a member of a sample site. The treatment effect in a target

population is:

E[τ

i

|D = 0] = [τ

i

|D = 1] (6)

+ E[α(X

i

)|D

i

= 0] − E[α(X

i

)|D

i

= 1]

+ E[γ(U

i

)|D

i

= 0] − E[γ(U

i

)|D

i

= 1]

The right side of the first line is the sample treatment effect, while the second line is the

adjustment for observable moderators. The third line reflects unobservable differences between

sample and target. Given that this cannot be controlled for, a non-zero third line implies a biased

estimate of the target treatment effect.

One might formally think of an estimator as “externally valid” if one can use sample data to

consistently estimate parameters in other target sites. External validity requires an assumption

which resembles the unconfoundedness assumption required for internal validity: D

i

must be inde-

19

pendent of the difference in potential outcomes conditional on observables. I call this assumption

external unconfoundedness:

External Unconfoundedness: D

i

⊥ (Y

i

(1) − Y

i

(0))|X

i

External unconfoundedness implies that the third line of Equation (6) is zero. This assumption

is conceptually analogous to assumptions in previous work, although technically slightly different:

it is a binary version of assumption (A2’) in Hotz, Imbens, and Klerman (2006), and it is a weaker

version of unconfounded location (Hotz, Imbens, and Mortimer 2005). I define and name it here

because it is precisely the assumption needed for this analysis and for many similar exercises in

other contexts. Furthermore, the word “external” is more descriptively accurate than “location” in

the Opower setting, where because samples often do not saturate the households in a geographic

area, effects may be extrapolated to target households in the same location as the sample.

3.1.4 “Site Assignment Mechanisms”

A key ingredient of internal validity the Rubin Causal Model is the assignment mechanism: how

individuals are assigned between treatment and control. Analogously, a key ingredient of external

validity is how individuals are assigned between sample and target.

Building on a discussion in Hotz, Imbens, and Mortimer (2005, page 246), I formalize three

relevant classes of “site assignment mechanisms.” The first class involves unconfounded individual

assignment to sites: S

i

⊥τ

i

|X

i

. This implies that unobservables do not vary across sites, so there

is no site-level treatment effect heterogeneity. Under this assignment mechanism, external uncon-

foundedness holds in a large sample of individuals when extrapolating between any pair of sample

and target sites. This would arise if individuals were randomly assigned to sites.

As an example of how this first class has been assumed, consider analyses of the GAIN job

training program that attribute differences in outcomes between Riverside County and other sites

only to an emphasis on Labor Force Attachment (LFA) (Dehejia 2003, Hotz, Imbens, and Klerman

2006). These analyses require that there are no unobservable factors other than the use of LFA that

moderate the treatment effect and differ across sites. More broadly, this assumption is required any

time an analyst argues that results from one site generalize to some different or broader population.

Of course, random assignment of individuals to sites is unlikely. In the Opower context, for

example, households are obviously not randomly assigned to utility service areas. Furthermore,

Figure 2 shows that unconditional ATEs vary substantially across sites, and the working paper ver-

sion of this paper showed that conditioning on observables explains very little of this heterogeneity.

In many other contexts, we also expect unobservables to vary across sites.

The second class of mechanisms involves unconfounded site assignment to sample: D

s

⊥τ

s

|X

s

.

Under this class of mechanisms, unobservables may vary in expectation across sites, there may

be site-level treatment effect heterogeneity, and external unconfoundedness need not hold when

20

extrapolating between a pair of sites. However, external unconfoundedness would hold when ex-

trapolating from a large sample of sample sites to a large sample of target sites. This would arise

if sites were randomly assigned to being in the sample.

The distinction between these two classes of site assignment mechanisms motivates the impor-

tance of replication, for two reasons. First, replication allows the econometrician to turn more U ’s

into X’s: adding sites can add variation in moderators that allows α(X) to be estimated. Second,

even if some moderators remain unobserved in U , replication gives a sense of the distribution of

unobservable moderators across sites.

It may be reasonable to assume unconfounded site assignment to sample in multi-site program

evaluations where the researcher can choose sample sites without restriction and does so to maximize

external validity. For example, the JTPA evaluation initially hoped to randomly select sites for

evaluations within 20 strata defined by size, region, and a measure of program quality (Hotz

1992). The Moving to Opportunity experiment (Sanbonmatsu et al. 2011) was implemented in five

cities chosen for size and geographic diversity. Similarly, the RAND Health Insurance Experiment

(Manning et al. 1988) was implemented in six sites that were chosen for diversity in geographic

location, city size, and physician availability.

A third class of assignment mechanisms is when external unconfoundedness does not hold even

with a large number of sites. In the absence of random assignment of individuals to sites or sites

to sample, there are economic processes that drive selection into partnership. In these cases, there

may be “site selection bias,” under which sample ATEs provide systematically biased estimates of

target ATEs. Of course, site selection bias does not mean that the estimated sample ATEs are

biased away from the true sample ATEs. The model simply captures heterogeneous Conditional

Average Treatment Effects (CATEs) that could vary across sites. The reason to use the phrase

“site selection bias” is that it underscores that the Sample CATEs could be systematically different

from Target CATEs. Furthermore, these potential systematic differences arise from site selection

processes that can be theoretically understood and observed in practice.

The reason to carefully specify these site assignment mechanisms is that this clarifies the point of

the paper. Replication is intuitively appealing because even if there is site level heterogeneity, with

an increasing number of replications it might seem reasonable to assume external unconfoundedness

due to unconfounded site assignment to sample. This section tests that appealing assumption in

what approaches a “best-case scenario,” with ten large-sample replications of essentially the same

treatment with high-quality microdata. Section 4, the core of the paper, then explores why this

assumption breaks down, exploring the third class of site selection mechanisms.

21

3.2 Empirical Approach

I now extrapolate using sample microdata from the first ten Opower replications. I first predict the

program’s nationwide “technical potential”: the effect that would be expected if the program were

scaled nationwide, assuming external unconfoundedness. I then test the external unconfoundedness

assumption “in sample” by extrapolating from the microdata to the remainder of the sites in the

metadata.

The techniques that can be used for extrapolation are limited by the fact that I observe only the

means of X in the target populations. For example, because I do not have microdata for the sites

in the metadata, I cannot extrapolate to these sites by estimating propensity scores and weighting

by inverse probability weights. Instead, I use two other simple off-the-shelf approaches commonly

used in applied work: linear extrapolation and re-weighting. Before carrying out these procedures,

I first determine the subset of X variables that statistically significantly moderate the treatment

effect.

3.2.1 Testing for Treatment Effect Heterogeneity

The empirical analysis combines microdata from the first ten sites. The variable Y

1is

is the mean

post-treatment energy use, as a percent of mean control group post-treatment usage in site s; it is

simply a collapse of Y

ist

to the household daily average.

e

X

is

is the vector of K covariates summarized

in Table 3, net of the sample means. Some X variables are not observed at all households. Indexing

the X variables by k, if X

k

is the mean of X

kis

over all households in all sites where X

kis

is non-

missing,

e

X

kis

= (X

kis

− X

k

) if X

kis

is non-missing, and 0 if missing. M

kis

is an indicator that

takes value 1 if X

kis

is missing, 0 if non-missing.

f

M

is

is analogously a vector of length K, where

f

M

kis

= (M

kis

− M

k

).

Heterogeneous treatment effects are estimated using the following equation:

Y

1is

= −(α

e

X

is

+ µ

f

M

is

+ τ)T

is

+ β

s

e

X

is

+ $

s

f

M

is

+ φ

s

Y

0is

+ π

s

+ ε

is

(7)

As in the model, the α parameters capture how observables X moderate the treatment effect.

The first term is pre-multiplied by −1 to maintain the convention that more positive effects imply

better efficacy. Because

e

X and

f

M are normalized to have mean zero in the sample, in expectation

the constant term τ equals the sample ATE that would be estimated if

e

X and

f

M were not included

in the regression. The s sub-indices on β, $, and φ represent the fact that the equation includes

site-specific controls for the main effects of

e

X,

f

M, and Y

0

.

Standard errors are robust and clustered by the unit of randomization. In sites 1-9, random-

ization was at the household level. In site 10, however, households were grouped into 952 groups,

which were then randomized between treatment and control.

22

The test for treatment effect heterogeneity follows the “top-down” procedure of Crump, Hotz,

Imbens, and Mitnik (2008). I start with the full set of

e

X, estimate Equation (7), drop the one

e

X

k

with the smallest t-statistic along with its corresponding

f

M, and continue estimating and dropping

until all remaining covariates have t-statistic greater than or equal to 2 in absolute value. I denote

this set of remaining covariates as

e

X

het

, with corresponding missing indicators

f

M

het

.

3.2.2 Linear Prediction

Linear prediction is unbiased under the assumption that the treatment effect scales linearly in

X: α(X) = αX, where α is now a vector of scalar parameters. Denote the vectors of sample and

target means as X

het

m

and X

het

g

, respectively. To predict target treatment effect τ

g

, I simply combine

Equation (6) with the external unconfoundedness and linearity assumptions. The prediction with

sample data is:

bτ

g

= bτ

m

+ bα(X

het

g

− X

het

m

) (8)

Standard errors are calculated using the Delta method.

3.2.3 Re-Weighting Estimator

A second approach to prediction is to re-weight the sample population to approximate the target.

To do this, I apply the approach of Hellerstein and Imbens (1999), who show more generally how

samples can be re-weighted to match moment conditions from auxiliary data. Given that only the

target means of X are observed, I assume that the target distribution of observables f

g

(x) is simply

a rescaled version of the sample distribution f

m

(x), so f

m

(x) = f

g

(x) · (1 + λ(x − X

g

)), where λ is a

vector of scaling parameters. Under this assumption, observation weights w

is

= 1/(1+λ(X

is

−X

g

))

re-weight the sample to exactly equal the target distribution. Following Hellerstein and Imbens

(1999), I estimate w

is

using empirical likelihood, which is equivalent to maximizing

P

i

ln w

is

subject

to the constraints that

P

i

w

is

= 1 and

P

i

w

is

X

is

= X

het

g

. In words, the second constraint is that

the re-weighted sample mean of X equals the target mean. Given that the sum of the weights is

constrained to 1, Jensen’s inequality implies that maximizing the sum of ln w

is

penalizes variation

in w from the mean. Thus, the Hellerstein and Imbens (1999) procedure amounts to finding

observation weights that are as similar as possible while still matching the target means.

3.3 Results

Table 4 presents heterogeneous treatment effects using combined microdata from the first ten sites.

Column 1 presents the unconditional treatment effect, estimated using Equation (7) without any

23

of the

e

X or

f

M variables. The ATE across the first ten sites is 1.707 percent of energy use. The R

2

of 0.86 reflects that fact that the lagged outcome Y

0is

explains much of the variation in Y

1is

.

Column 2 presents estimates of Equation (7) including all

e

X and

f

M variables and their in-

teractions with T . Column 3 presents the results from the last regression of the Crump, Hotz,

Imbens, and Mitnik (2008) “top-down” procedure, including only the

e

X

het

and

f

M

het

that statis-

tically moderate the treatment effect. Column 4 adds a set of 10 site indicators interacted with

T . This identifies the α parameters only off of within-site variation, not between-site variation.

Column 5 repeats column 4 after adding the interaction between T and Y

0is

. This tests whether

the α

k

coefficients reflect an omitted association between X

k

and baseline usage.

The α

k

coefficients in columns 2-5 are remarkably similar. Furthermore, Appendix Table A3

presents estimates of column 2 specific to each of the 10 sites. None of the coefficients are solely

driven by any one site. There is only one case where the bα from one site is statistically significant

and has a sign opposite the bα in the combined data: in site 2, households with electric heat have an

imprecisely-estimated zero treatment effect, while in the combined data, homes with electric heat

tend to have statistically larger effects than non-electric heat homes.

The signs and magnitudes are also sensible. The first social comparison interaction is positive:

informing a household that it uses ten kilowatt-hours per day more than its neighbors is associated

with just less than a one percentage point larger treatment effect. Electric heat and single family

homes conserve more, as do households with more square feet. Having a pool is also associated with

a 1 to 1.2 percentage point larger effect. Because these estimates condition on First Comparison,

as well as baseline electricity use in column 5, the α parameters for physical characteristics reflect

the extent to which the characteristic is associated with the treatment effect relative to some other

household characteristic that would use the same amount of electricity.

Estimates like those in Table 4 should be interpreted cautiously for two reasons. First, X is not

randomly assigned, meaning that the α parameters may not be causal. For example, the α for First

Comparison cannot be interpreted as the causal impact of normative information on the treatment

effect, as this association could also be moderated by other factors that both increase electricity

use and make households more responsive. Similarly, giving pools to randomly-assigned households

may not increase treatment effects. However, as long as the α parameters are stable within and

between sites, they are useful for prediction even if they do not reflect causal relationships.