Title stata.com

contrast — Contrasts and linear hypothesis tests after estimation

Description Quick start Menu Syntax

Options Remarks and examples Stored results Methods and formulas

References Also see

Description

contrast tests linear hypotheses and forms contrasts involving factor variables and their interactions

from the most recently fit model. The tests include ANOVA-style tests of main effects, simple effects,

interactions, and nested effects. contrast can use named contrasts to decompose these effects into

comparisons against reference categories, comparisons of adjacent levels, comparisons against the

grand mean, orthogonal polynomials, and such. Custom contrasts may also be specified.

contrast can be used with svy estimation results; see [SVY] svy postestimation.

Contrasts can also be computed for margins of linear and nonlinear responses; see [R] margins,

contrast.

Quick start

Contrasts for one-way models

Test the main effect of categorical variable a after regress y i.a or anova y a

contrast a

Reference category contrasts of cell means of y with the smallest value of a as the base category

contrast r.a

Same as above, but specify a = 3 as the base category for comparisons

contrast rb3.a

Report tests instead of confidence intervals for each contrast

contrast r.a, pveffects

Report tests and confidence intervals for each contrast

contrast r.a, effects

Contrasts of the cell mean of y for each level of a with the grand mean of y

contrast g.a

Same as above, but compute grand mean as a weighted average of cell means with weights based on

the number of observations for each level of a

contrast gw.a

User-defined contrast comparing the cell mean of y for a = 1 with the average of the cell means for

a = 3 and a = 4

contrast {a -1 0 .5 .5}

1

2 contrast — Contrasts and linear hypothesis tests after estimation

Contrasts for two-way models

Test of the interaction effect after regress y a##b or anova y a##b

contrast a#b

Test of the main and interaction effects

contrast a b a#b

Same as above

contrast a##b

Individual reference category contrasts for the interaction of a and b

contrast r.a#r.b

Joint tests of the simple effects of a within each level of b

contrast a@b

Individual reference category contrasts for the simple effects of a within each level of b

contrast r.a@b

Orthogonal polynomial contrasts for a within each level of b

contrast p.a@b

Reference category contrasts of the marginal means of y for levels of a

contrast r.a

Same as above, but with marginal means for a computed as a weighted average of cell means, using

the marginal frequencies of b rather than equal weights for each level

contrast r.a, asobserved

Contrasts of the marginal mean of y for each level of a with the previous level—reverse-adjacent

contrasts

contrast ar.a

Contrasts for models with continuous covariates

Test of the interaction effect after regress y a##c.x or anova y a##c.x

contrast a#c.x

Reference category effects of a on the slope of x

contrast r.a#c.x

Reference category effects of a on the intercept

contrast r.a

Contrasts for nonlinear models

Orthogonal polynomial contrasts of log odds across levels of a after logit y i.a

contrast p.a

Test the main and interaction effects after logit y a##b

contrast a##b

contrast — Contrasts and linear hypothesis tests after estimation 3

Simple reference category effects for a within each level of b

contrast r.a@b

Contrasts for multiple-equation models

Test the main and interaction effects in the equation for y2 after mvreg y1 y2 y3 = a##b

contrast a##b, equation(y2)

Reference category contrasts of estimated marginal means of y3 for levels of a

contrast r.a, equation(y3)

Test for a difference in the overall estimated marginal means of y1, y2, and y3

contrast _eqns

Contrasts of estimated marginal means of y2 and y3 with y1

contrast r._eqns

Test whether interaction effects differ across equations

contrast a#b#_eqns

Menu

Statistics > Postestimation

4 contrast — Contrasts and linear hypothesis tests after estimation

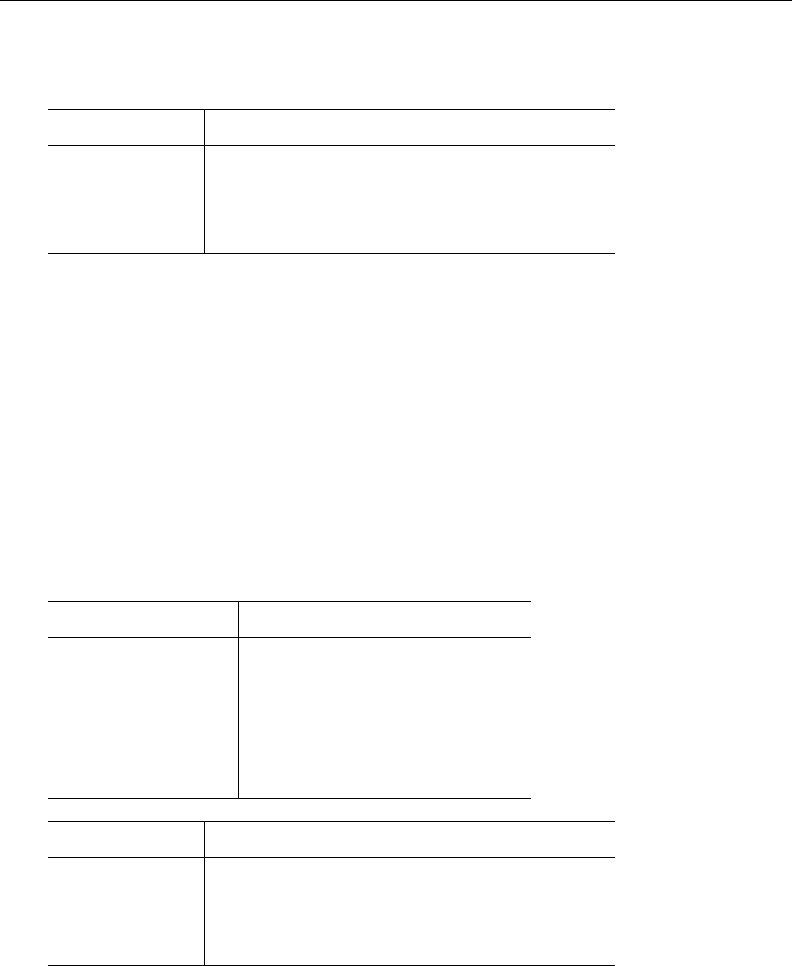

Syntax

contrast termlist

, options

where termlist is a list of factor variables or interactions that appear in the current estimation results.

The variables may be typed with or without contrast operators, and you may use any factor-variable

syntax:

See the operators (op.) table below for the list of contrast operators.

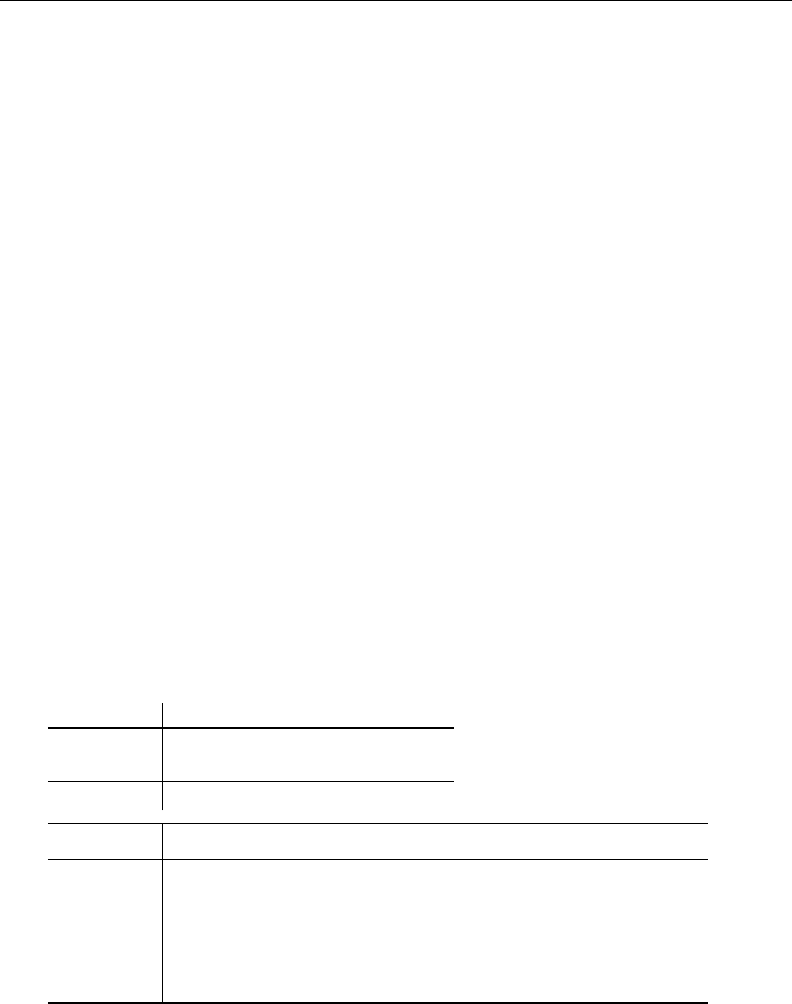

options Description

Main

overall add a joint hypothesis test for all specified contrasts

asobserved treat all factor variables as observed

lincom treat user-defined contrasts as linear combinations

Equations

equation(eqspec) perform contrasts in termlist for equation eqspec

atequations perform contrasts in termlist within each equation

Advanced

emptycells(empspec) treatment of empty cells for balanced factors

noestimcheck suppress estimability checks

Reporting

level(#) confidence level; default is level(95)

mcompare(method ) adjust for multiple comparisons; default is mcompare(noadjust)

noeffects suppress table of individual contrasts

cieffects show effects table with confidence intervals

pveffects show effects table with p-values

effects show effects table with confidence intervals and p-values

nowald suppress table of Wald tests

noatlevels report only the overall Wald test for terms that use the within @

or nested | operator

nosvyadjust compute unadjusted Wald tests for survey results

sort sort the individual contrast values in each term

post post contrasts and their VCEs as estimation results

display options control column formats, row spacing, line width, and factor-variable labeling

eform option report exponentiated contrasts

df(#) use t distribution with # degrees of freedom for computing p-values

and confidence intervals

collect is allowed; see [U] 11.1.10 Prefix commands.

df(#) does not appear in the dialog box.

contrast — Contrasts and linear hypothesis tests after estimation 5

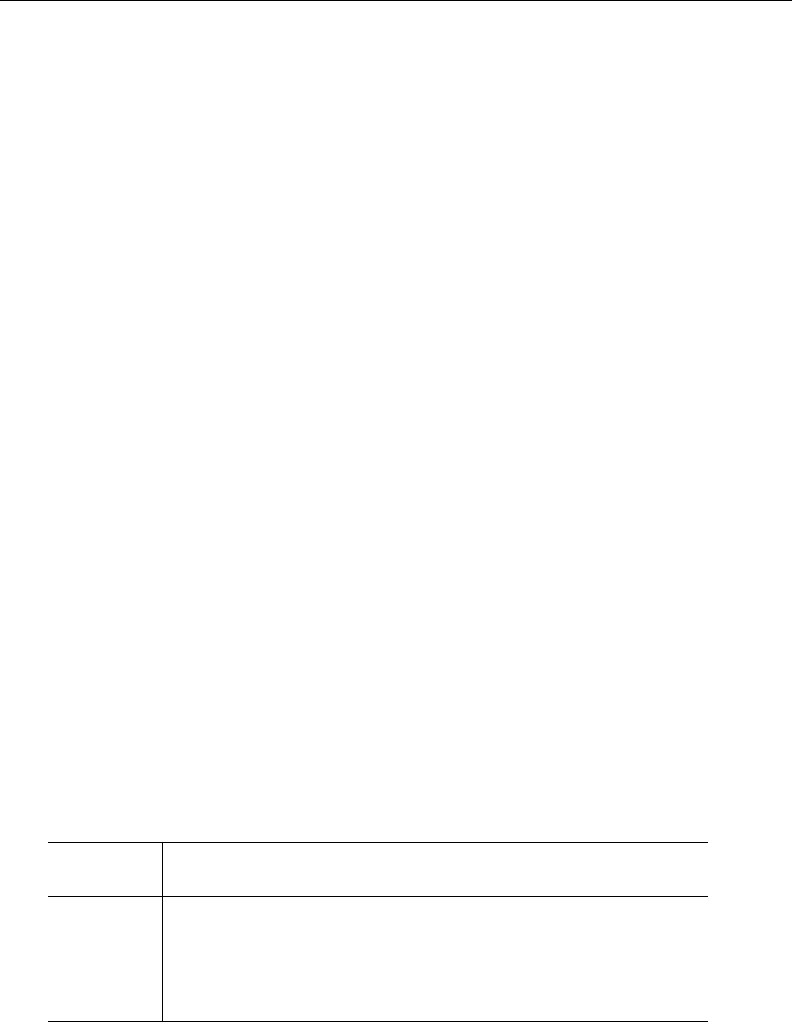

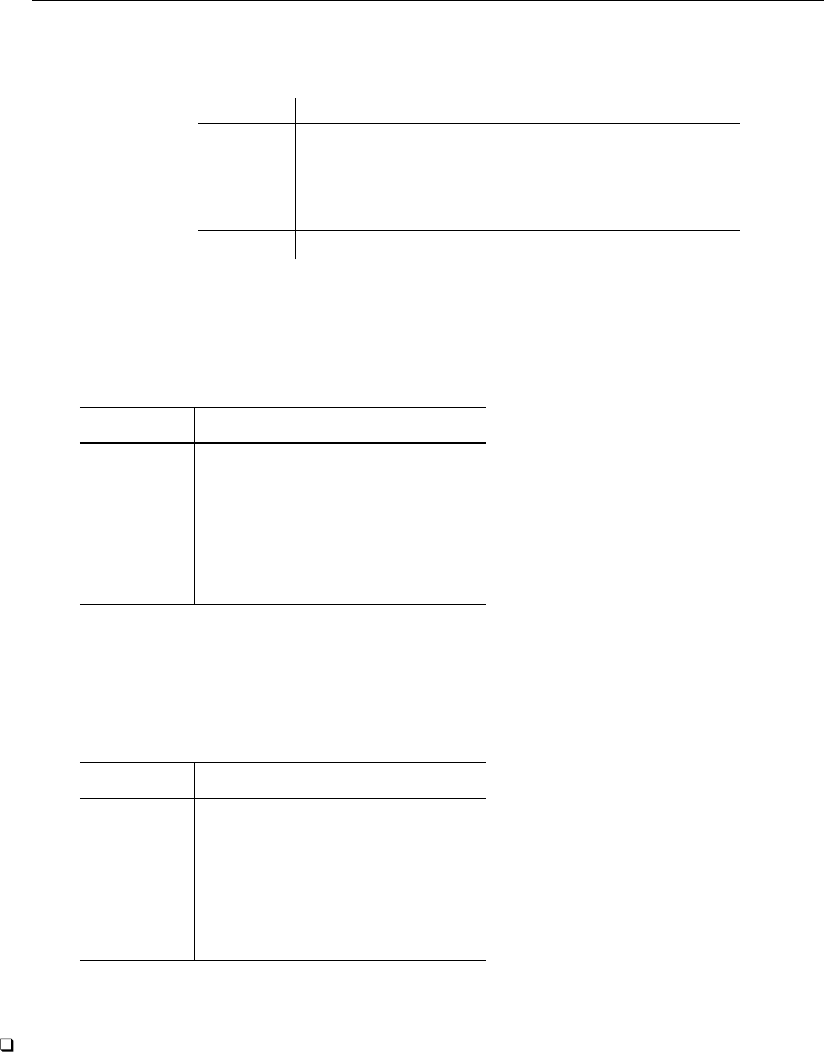

Term Description

Main effects

A joint test of the main effects of A

r.A individual contrasts that decompose A using r.

Interaction effects

A#B joint test of the two-way interaction effects of A and B

A#B#C joint test of the three-way interaction effects of A, B, and C

r.A#g.B individual contrasts for each interaction of A and B defined by r. and g.

Par tial interaction effects

r.A#B joint tests of interactions of A and B within each contrast defined by r.A

A#r.B joint tests of interactions of A and B within each contrast defined by r.B

Simple effects

A@B joint tests of the effects of A within each level of B

A@B#C joint tests of the effects of A within each combination of the levels of B and C

r.A@B individual contrasts of A that decompose A@B using r.

r.A@B#C individual contrasts of A that decompose A@B#C using r.

Other conditional effects

A#B@C joint tests of the interaction effects of A and B within each level of C

A#B@C#D joint tests of the interaction effects of A and B within each combination of

the levels of C and D

r.A#g.B@C individual contrasts for each interaction of A and B that decompose A#B@C

using r. and g.

Nested effects

A|B joint tests of the effects of A nested in each level of B

A|B#C joint tests of the effects of A nested in each combination of the levels of B and C

A#B|C joint tests of the interaction effects of A and B nested in each level of C

A#B|C#D joint tests of the interaction effects of A and B nested in each

combination of the levels of C and D

r.A|B individual contrasts of A that decompose A|B using r.

r.A|B#C individual contrasts of A that decompose A|B#C using r.

r.A#g.B|C individual contrasts for each interaction of A and B defined by r. and g.

nested in each level of C

Slope effects

A#c.x joint test of the effects of A on the slopes of x

A#c.x#c.y joint test of the effects of A on the slopes of the product (interaction) of x and y

A#B#c.x joint test of the interaction effects of A and B on the slopes of x

A#B#c.x#c.y joint test of the interaction effects of A and B on the slopes of the product

(interaction) of x and y

r.A#c.x individual contrasts of A’s effects on the slopes of x using r.

Denominators

... / term2 use term2 as the denominator in the F tests of the preceding terms

... / use the residual as the denominator in the F tests of the preceding terms

(the default if no other /s are specified)

6 contrast — Contrasts and linear hypothesis tests after estimation

A, B, C, and D represent any factor variable in the current estimation results.

x and y represent any continuous variable in the current estimation results.

r. and g. represent any contrast operator. See the table below.

c. specifies that a variable be treated as continuous; see [U] 11.4.3 Factor variables.

Operators are allowed on any factor variable that does not appear to the right of @ or |. Operators

decompose the effects of the associated factor variable into one-degree-of-freedom effects (contrasts).

Higher-level interactions are allowed anywhere an interaction operator (#) appears in the table.

Time-series operators are allowed if they were used in the estimation.

eqns designates the equations in manova, mlogit, mprobit, and mvreg and can be specified

anywhere a factor variable appears.

/ is allowed only after anova, cnsreg, manova, mvreg, or regress.

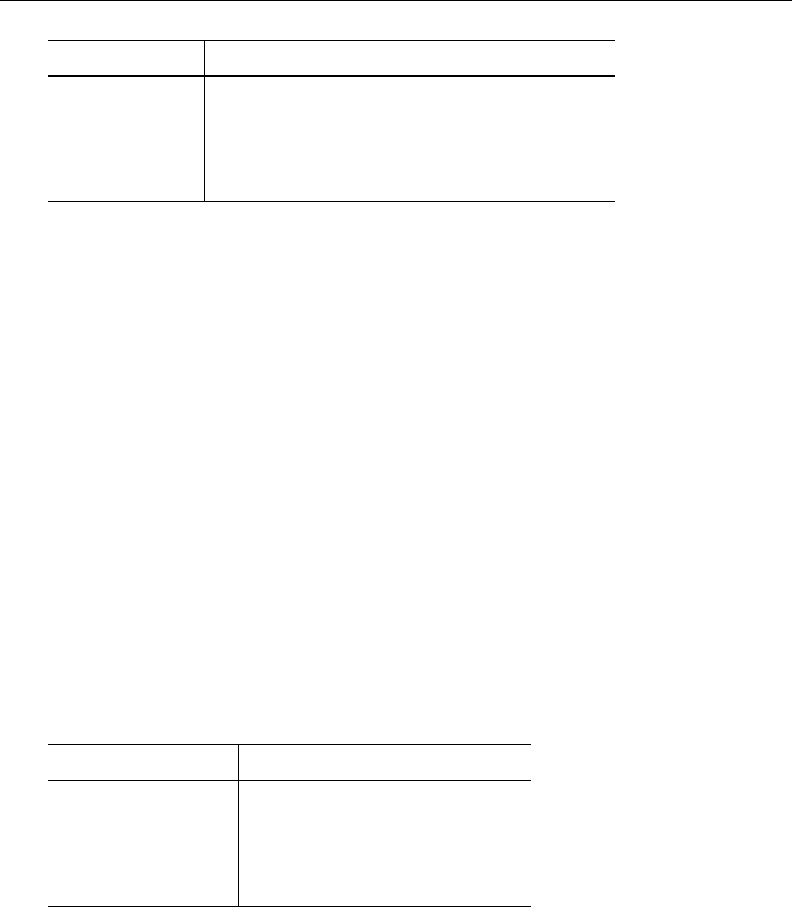

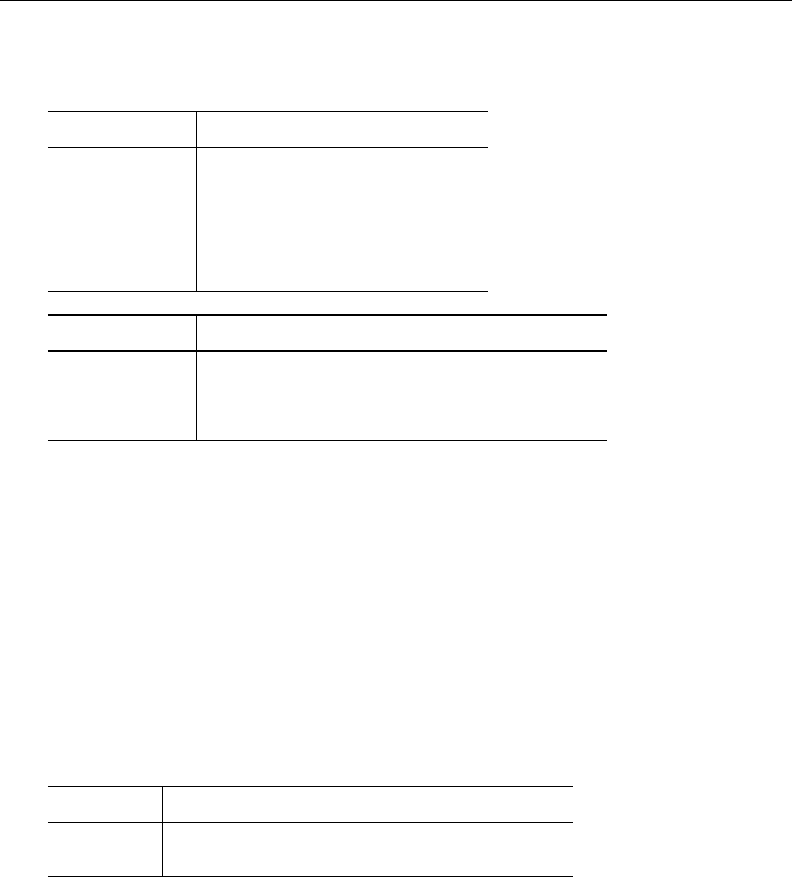

operators (op.) Description

r. differences from the reference (base) level; the default

a. differences from the next level (adjacent contrasts)

ar. differences from the previous level (reverse adjacent contrasts)

As-balanced operators

g. differences from the balanced grand mean

h. differences from the balanced mean of subsequent levels (Helmert contrasts)

j. differences from the balanced mean of previous levels (reverse Helmert

contrasts)

p. orthogonal polynomial in the level values

q. orthogonal polynomial in the level sequence

As-observed operators

gw. differences from the observation-weighted grand mean

hw. differences from the observation-weighted mean of subsequent levels

jw. differences from the observation-weighted mean of previous levels

pw. observation-weighted orthogonal polynomial in the level values

qw. observation-weighted orthogonal polynomial in the level sequence

One or more individual contrasts may be selected by using the op#. or op(numlist). syntax. For

example, a3.A selects the adjacent contrast for level 3 of A, and p(1/2).B selects the linear and

quadratic effects of B. Also see Orthogonal polynomial contrasts and Beyond linear models.

Custom contrasts Description

{A numlist} user-defined contrast on the levels of factor A

{A#B numlist} user-defined contrast on the levels of interaction between A and B

Custom contrasts may be part of a term, such as {A numlist}#B, {A numlist}@B, {A numlist}|B, {A#B

numlist}, and {A numlist}#{B numlist}. The same is true of higher-order custom contrasts, such

as {A#B numlist}@C, {A#B numlist}#r.C, and {A#B numlist}#c.x.

Higher-order interactions with at most eight factor variables are allowed with custom contrasts.

contrast — Contrasts and linear hypothesis tests after estimation 7

method Description

noadjust do not adjust for multiple comparisons; the default

bonferroni

adjustall

Bonferroni’s method; adjust across all terms

sidak

adjustall

ˇ

Sid

´

ak’s method; adjust across all terms

scheffe Scheff

´

e’s method

Options

Main

overall specifies that a joint hypothesis test over all terms be performed.

asobserved specifies that factor covariates be evaluated using the cell frequencies observed in the

estimation sample. The default is to treat all factor covariates as though there were an equal number

of observations in each level.

lincom specifies that user-defined contrasts be treated as linear combinations. The default is to require

that all user-defined contrasts sum to zero. (Summing to zero is part of the definition of a contrast.)

Equations

equation(eqspec) specifies the equation from which contrasts are to be computed. The default is

to compute contrasts from the first equation.

atequations specifies that the contrasts be computed within each equation.

Advanced

emptycells(empspec) specifies how empty cells are handled in interactions involving factor variables

that are being treated as balanced.

emptycells(strict) is the default; it specifies that contrasts involving empty cells be treated

as not estimable.

emptycells(reweight) specifies that the effects of the observed cells be increased to accommodate

any missing cells. This makes the contrast estimable but changes its interpretation.

noestimcheck specifies that contrast not check for estimability. By default, the requested contrasts

are checked and those found not estimable are reported as such. Nonestimability is usually caused

by empty cells. If noestimcheck is specified, estimates are computed in the usual way and

reported even though the resulting estimates are manipulable, which is to say they can differ across

equivalent models having different parameterizations.

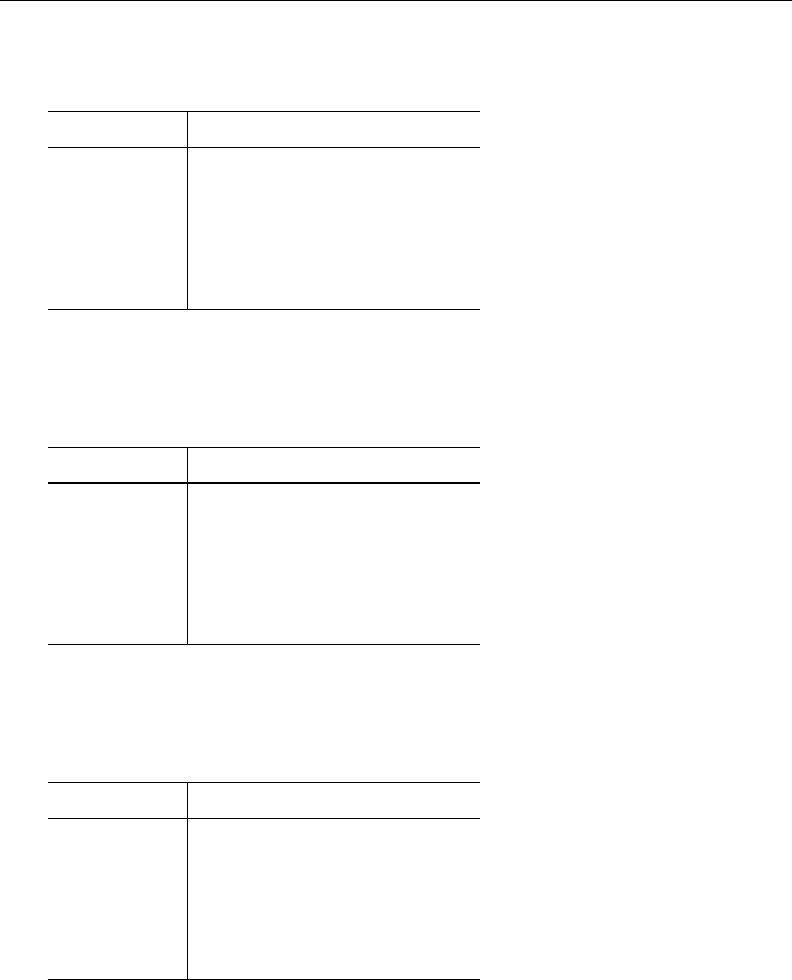

Reporting

level(#) specifies the confidence level, as a percentage, for confidence intervals. The default is

level(95) or as set by set level; see [U] 20.8 Specifying the width of confidence intervals.

mcompare(method ) specifies the method for computing p-values and confidence intervals that account

for multiple comparisons within a factor-variable term.

Most methods adjust the comparisonwise error rate, α

c

, to achieve a prespecified experimentwise

error rate, α

e

.

mcompare(noadjust) is the default; it specifies no adjustment.

α

c

= α

e

8 contrast — Contrasts and linear hypothesis tests after estimation

mcompare(bonferroni) adjusts the comparisonwise error rate based on the upper limit of the

Bonferroni inequality

α

e

≤mα

c

where m is the number of comparisons within the term.

The adjusted comparisonwise error rate is

α

c

= α

e

/m

mcompare(sidak) adjusts the comparisonwise error rate based on the upper limit of the probability

inequality

α

e

≤ 1 − (1 − α

c

)

m

where m is the number of comparisons within the term.

The adjusted comparisonwise error rate is

α

c

= 1 − (1 − α

e

)

1/m

This adjustment is exact when the m comparisons are independent.

mcompare(scheffe) controls the experimentwise error rate using the F or χ

2

distribution with

degrees of freedom equal to the rank of the term.

mcompare(method adjustall) specifies that the multiple-comparison adjustments count all

comparisons across all terms rather than performing multiple comparisons term by term. This

leads to more conservative adjustments when multiple variables or terms are specified in

marginslist. This option is compatible only with the bonferroni and sidak methods.

noeffects suppresses the table of individual contrasts with confidence intervals. This table is

produced by default when the mcompare() option is specified or when a term in termlist implies

all individual contrasts.

cieffects specifies that a table containing a confidence interval for each individual contrast be

reported.

pveffects specifies that a table containing a p-value for each individual contrast be reported.

effects specifies that a single table containing a confidence interval and p-value for each individual

contrast be reported.

nowald suppresses the table of Wald tests.

noatlevels indicates that only the overall Wald test be reported for each term containing within or

nested (@ or |) operators.

nosvyadjust is for use with svy estimation commands. It specifies that the Wald test be carried out

without the default adjustment for the design degrees of freedom. That is to say the test is carried

out as W/k ∼ F (k, d) rather than as (d − k + 1)W/(kd) ∼ F (k, d − k + 1), where k is the

dimension of the test and d is the total number of sampled PSUs minus the total number of strata.

sort specifies that the table of individual contrasts be sorted by the contrast values within each term.

post causes contrast to behave like a Stata estimation (e-class) command. contrast posts the

vector of estimated contrasts along with the estimated variance–covariance matrix to e(), so you

can treat the estimated contrasts just as you would results from any other estimation command.

For example, you could use test to perform simultaneous tests of hypotheses on the contrasts,

or you could use lincom to create linear combinations.

display options: vsquish, nofvlabel, fvwrap(#), fvwrapon(style), cformat(% fmt),

pformat(% fmt), sformat(% fmt), and nolstretch.

contrast — Contrasts and linear hypothesis tests after estimation 9

vsquish specifies that the blank space separating factor-variable terms or time-series–operated

variables from other variables in the model be suppressed.

nofvlabel displays factor-variable level values rather than attached value labels. This option

overrides the fvlabel setting; see [R] set showbaselevels.

fvwrap(#) specifies how many lines to allow when long value labels must be wrapped. Labels

requiring more than # lines are truncated. This option overrides the fvwrap setting; see [R] set

showbaselevels.

fvwrapon(style) specifies whether value labels that wrap will break at word boundaries or break

based on available space.

fvwrapon(word), the default, specifies that value labels break at word boundaries.

fvwrapon(width) specifies that value labels break based on available space.

This option overrides the fvwrapon setting; see [R] set showbaselevels.

cformat(% fmt) specifies how to format contrasts, standard errors, and confidence limits in the

table of estimated contrasts.

pformat(% fmt) specifies how to format p-values in the table of estimated contrasts.

sformat(% fmt) specifies how to format test statistics in the table of estimated contrasts.

nolstretch specifies that the width of the table of estimated contrasts not be automatically

widened to accommodate longer variable names. The default, lstretch, is to automatically

widen the table of estimated contrasts up to the width of the Results window. Specifying

lstretch or nolstretch overrides the setting given by set lstretch. If set lstretch

has not been set, the default is lstretch. nolstretch is not shown in the dialog box.

eform option specifies that the contrasts table be displayed in exponentiated form. e

contrast

is

displayed rather than contrast. Standard errors and confidence intervals are also transformed. See

[R] eform option for the list of available options.

The following option is available with contrast but is not shown in the dialog box:

df(#) specifies that the t distribution with # degrees of freedom be used for computing p-values and

confidence intervals. The default is to use e(df r) degrees of freedom or the standard normal

distribution if e(df r) is missing.

10 contrast — Contrasts and linear hypothesis tests after estimation

Remarks and examples stata.com

Remarks are presented under the following headings:

Introduction

One-way models

Estimated cell means

Testing equality of cell means

Reference category contrasts

Reverse adjacent contrasts

Orthogonal polynomial contrasts

Two-way models

Estimated interaction cell means

Simple effects

Interaction effects

Main effects

Partial interaction effects

Three-way and higher-order models

Contrast operators

Differences from a reference level (r.)

Differences from the next level (a.)

Differences from the previous level (ar.)

Differences from the grand mean (g.)

Differences from the mean of subsequent levels (h.)

Differences from the mean of previous levels (j.)

Orthogonal polynomials (p. and q.)

User-defined contrasts

Empty cells

Empty cells, ANOVA style

Nested effects

Multiple comparisons

Unbalanced data

Using observed cell frequencies

Weighted contrast operators

Testing factor effects on slopes

Chow tests

Beyond linear models

Multiple equations

Video example

Introduction

contrast performs ANOVA-style tests of main effects, interactions, simple effects, and nested

effects. It can easily decompose these tests into constituent contrasts using either named contrasts

(codings) or user-specified contrasts. Comparing levels of factor variables—whether as main effects,

interactions, or simple effects—is as easy as adding a contrast operator to the variable. The operators

can compare each level with the previous level, each level with a reference level, each level with the

mean of previous levels, and more.

contrast tests and estimates contrasts. A contrast of the parameters µ

1

, µ

2

, . . . , µ

p

is a linear

combination

P

i

c

i

µ

i

whose c

i

sum to zero. A difference of population means such as µ

1

− µ

2

is

a contrast, as are most other comparisons of population or model quantities (Coster 2005). Some

contrasts may be estimated with lincom, but contrast is much more powerful. contrast can

handle multiple contrasts simultaneously, and the command’s contrast operators make it easy to

specify complicated linear combinations.

Both the contrast operation and the creation of the margins for comparison can be performed as

though the data were balanced (typical for experimental designs) or using the observed frequencies

in the estimation sample (typical for observational studies). contrast can perform these analyses on

the results of almost all of Stata’s estimators, not just the linear-models estimators.

contrast — Contrasts and linear hypothesis tests after estimation 11

Most of contrast’s computations can be considered comparisons of estimated cell means from

a model fit. Tests of interactions are tests of whether the cell means for the interaction are all equal.

Tests of main effects are tests of whether the marginal cell means for the factor are all equal. More

focused comparisons of cell means (for example, is level 2 equal to level 1) are specified using

contrast operators. More formally, all of contrast’s computations are comparisons of conditional

expectations; cell means are one type of conditional expectation.

All contrasts can also easily be graphed; see [R] marginsplot.

For a discussion of contrasts and testing for linear models, see Searle and Gruber (2017) and

Searle (1997). For discussions specifically related to experimental design, see Winer, Brown, and

Michels (1991) and Milliken and Johnson (2009). Rosenthal, Rosnow, and Rubin (2000) focus on

contrasts with applications in behavioral sciences. Mitchell (2021, 2015) and Baldwin (2019) focus

on contrasts in Stata.

contrast is a flexible tool for understanding the effects of categorical covariates. If your model

contains categorical covariates, and especially if it contains interactions, you will want to use contrast.

One-way models

Suppose we have collected data on cholesterol levels for individuals from five age groups. To study

the effect of age group on cholesterol, we can begin by fitting a one-way model using regress:

. use https://www.stata-press.com/data/r18/cholesterol

(Artificial cholesterol data)

. label list ages

ages:

1 10--19

2 20--29

3 30--39

4 40--59

5 60--79

. regress chol i.agegrp

Source SS df MS Number of obs = 75

F(4, 70) = 35.02

Model 14943.3997 4 3735.84993 Prob > F = 0.0000

Residual 7468.21971 70 106.688853 R-squared = 0.6668

Adj R-squared = 0.6477

Total 22411.6194 74 302.859722 Root MSE = 10.329

chol Coefficient Std. err. t P>|t| [95% conf. interval]

agegrp

20--29 8.203575 3.771628 2.18 0.033 .6812991 15.72585

30--39 21.54105 3.771628 5.71 0.000 14.01878 29.06333

40--59 30.15067 3.771628 7.99 0.000 22.6284 37.67295

60--79 38.76221 3.771628 10.28 0.000 31.23993 46.28448

_cons 180.5198 2.666944 67.69 0.000 175.2007 185.8388

12 contrast — Contrasts and linear hypothesis tests after estimation

Estimated cell means

margins will show us the estimated cell means for each age group based on our fitted model:

. margins agegrp

Adjusted predictions Number of obs = 75

Model VCE: OLS

Expression: Linear prediction, predict()

Delta-method

Margin std. err. t P>|t| [95% conf. interval]

agegrp

10--19 180.5198 2.666944 67.69 0.000 175.2007 185.8388

20--29 188.7233 2.666944 70.76 0.000 183.4043 194.0424

30--39 202.0608 2.666944 75.76 0.000 196.7418 207.3799

40--59 210.6704 2.666944 78.99 0.000 205.3514 215.9895

60--79 219.282 2.666944 82.22 0.000 213.9629 224.601

We can graph those means with marginsplot:

. marginsplot

Variables that uniquely identify margins:

180

200

220

Linear prediction

10–19 20–29 30–39 40–59 60–79

Age group

Adjusted predictions of agegrp with 95% CIs

contrast — Contrasts and linear hypothesis tests after estimation 13

Testing equality of cell means

Are all the means equal? That is to say is there an effect of age group on cholesterol level? We can

answer that by asking contrast to test whether the means of the age groups are identical.

. contrast agegrp

Contrasts of marginal linear predictions

Margins: asbalanced

df F P>F

agegrp 4 35.02 0.0000

Denominator 70

The means are clearly different. We could have obtained this same test directly had we fit our model

using anova rather than regress.

. anova chol agegrp

Number of obs = 75 R-squared = 0.6668

Root MSE = 10.329 Adj R-squared = 0.6477

Source Partial SS df MS F Prob>F

Model 14943.4 4 3735.8499 35.02 0.0000

agegrp 14943.4 4 3735.8499 35.02 0.0000

Residual 7468.2197 70 106.68885

Total 22411.619 74 302.85972

Achieving a more direct test result is why we recommend using anova instead of regress for

models where our focus is on the categorical covariates. The models fit by anova and regress are

identical; they merely parameterize the effects differently. The results of contrast will be identical

regardless of which command is used to fit the model. If, however, we were fitting models whose

responses are nonlinear functions of the covariates, such as logistic regression, then there would be

no analogue to anova, and we would appreciate contrast’s ability to quickly test main effects and

interactions.

14 contrast — Contrasts and linear hypothesis tests after estimation

Reference category contrasts

Now that we know that the overall effect of age group is statistically significant, we can explore

the effects of each age group. One way to do that is to use the reference category operator, r.:

. contrast r.agegrp

Contrasts of marginal linear predictions

Margins: asbalanced

df F P>F

agegrp

(20--29 vs 10--19) 1 4.73 0.0330

(30--39 vs 10--19) 1 32.62 0.0000

(40--59 vs 10--19) 1 63.91 0.0000

(60--79 vs 10--19) 1 105.62 0.0000

Joint 4 35.02 0.0000

Denominator 70

Contrast Std. err. [95% conf. interval]

agegrp

(20--29 vs 10--19) 8.203575 3.771628 .6812991 15.72585

(30--39 vs 10--19) 21.54105 3.771628 14.01878 29.06333

(40--59 vs 10--19) 30.15067 3.771628 22.6284 37.67295

(60--79 vs 10--19) 38.76221 3.771628 31.23993 46.28448

The cell mean of each age group is compared against the base age group (ages 10–19). The first

table shows that each difference is significant. The second table gives an estimate and confidence

interval for each contrast. These are the comparisons that linear regression gives with a factor covariate

and no interactions. The contrasts are identical to the coefficients from our linear regression.

Reverse adjacent contrasts

We have far more flexibility with contrast. Age group is ordinal, so it is interesting to compare

each age group with the preceding age group (rather than against one reference group). We specify

that analysis by using the reverse adjacent operator, ar.:

. contrast ar.agegrp

Contrasts of marginal linear predictions

Margins: asbalanced

df F P>F

agegrp

(20--29 vs 10--19) 1 4.73 0.0330

(30--39 vs 20--29) 1 12.51 0.0007

(40--59 vs 30--39) 1 5.21 0.0255

(60--79 vs 40--59) 1 5.21 0.0255

Joint 4 35.02 0.0000

Denominator 70

contrast — Contrasts and linear hypothesis tests after estimation 15

Contrast Std. err. [95% conf. interval]

agegrp

(20--29 vs 10--19) 8.203575 3.771628 .6812991 15.72585

(30--39 vs 20--29) 13.33748 3.771628 5.815204 20.85976

(40--59 vs 30--39) 8.60962 3.771628 1.087345 16.1319

(60--79 vs 40--59) 8.611533 3.771628 1.089257 16.13381

The 20–29 age group’s cholesterol level is 8.2 points higher than the 10–19 age group’s cholesterol

level; the 30–39 age group’s level is 13.3 points higher than the 20–29 age group’s level; and so on.

Each age group is statistically different from the preceding age group at the 5% level.

Orthogonal polynomial contrasts

The relationship between age group and cholesterol level looked almost linear in our graph. We

can examine that relationship further by using the orthogonal polynomial operator, p.:

. contrast p.agegrp, noeffects

Contrasts of marginal linear predictions

Margins: asbalanced

df F P>F

agegrp

(linear) 1 139.11 0.0000

(quadratic) 1 0.15 0.6962

(cubic) 1 0.37 0.5448

(quartic) 1 0.43 0.5153

Joint 4 35.02 0.0000

Denominator 70

Only the linear effect is statistically significant.

We can even perform the joint test that all effects beyond linear are zero. We do that by selecting

all polynomial contrasts above linear—that is, polynomial contrasts 2, 3, and 4.

. contrast p(2 3 4).agegrp, noeffects

Contrasts of marginal linear predictions

Margins: asbalanced

df F P>F

agegrp

(quadratic) 1 0.15 0.6962

(cubic) 1 0.37 0.5448

(quartic) 1 0.43 0.5153

Joint 3 0.32 0.8129

Denominator 70

The joint test has three degrees of freedom and is clearly insignificant. A linear effect of age group

seems adequate for this model.

16 contrast — Contrasts and linear hypothesis tests after estimation

Two-way models

Suppose we are investigating the effects of different dosages of a blood pressure medication and

believe that the effects may be different for men and women. We can fit the following ANOVA model

for bpchange, the change in diastolic blood pressure. Change is defined as the after measurement

minus the before measurement, so that negative values of bpchange correspond to decreases in blood

pressure.

. use https://www.stata-press.com/data/r18/bpchange

(Artificial blood pressure data)

. label list gender

gender:

1 Male

2 Female

. anova bpchange dose##gender

Number of obs = 30 R-squared = 0.9647

Root MSE = 1.4677 Adj R-squared = 0.9573

Source Partial SS df MS F Prob>F

Model 1411.9087 5 282.38174 131.09 0.0000

dose 963.48179 2 481.7409 223.64 0.0000

gender 355.11882 1 355.11882 164.85 0.0000

dose#gender 93.308093 2 46.654046 21.66 0.0000

Residual 51.699253 24 2.1541355

Total 1463.608 29 50.46924

Estimated interaction cell means

Everything is significant, including the interaction. So increasing dosage is effective and differs by

gender. Let’s explore the effects. First, let’s look at the estimated cell mean of blood pressure change

for each combination of gender and dosage.

. margins dose#gender

Adjusted predictions Number of obs = 30

Expression: Linear prediction, predict()

Delta-method

Margin std. err. t P>|t| [95% conf. interval]

dose#gender

250#Male -7.35384 .6563742 -11.20 0.000 -8.708529 -5.99915

250#Female 3.706567 .6563742 5.65 0.000 2.351877 5.061257

500#Male -13.73386 .6563742 -20.92 0.000 -15.08855 -12.37917

500#Female -6.584167 .6563742 -10.03 0.000 -7.938857 -5.229477

750#Male -16.82108 .6563742 -25.63 0.000 -18.17576 -15.46639

750#Female -14.38795 .6563742 -21.92 0.000 -15.74264 -13.03326

Our data are balanced, so these results will not be affected by the many different ways that

margins can compute cell means. Moreover, because our model consists of only dose and gender,

these are also the point estimates for each combination.

contrast — Contrasts and linear hypothesis tests after estimation 17

We can graph the results:

. marginsplot

Variables that uniquely identify margins:

-20

-15

-10

-5

0

5

Linear prediction

250 500 750

Dosage in milligrams per day

Male

Female

Adjusted predictions of dose#gender with 95% CIs

The lines are not parallel, which we expected because the interaction term is significant. Males

experience a greater decline in blood pressure at every dosage level, but the effect of increasing

dosage is greater for females. In fact, it is not clear if we can tell the difference between male and

female response at the maximum dosage.

Simple effects

We can contrast the male and female responses within dosage to see the simple effects of gender.

Because there are only two levels in gender, the choice of contrast operator is largely irrelevant.

Aside from orthogonal polynomials, all operators produce the same estimates, although the effects

can change signs.

. contrast r.gender@dose

Contrasts of marginal linear predictions

Margins: asbalanced

df F P>F

gender@dose

(Female vs Male) 250 1 141.97 0.0000

(Female vs Male) 500 1 59.33 0.0000

(Female vs Male) 750 1 6.87 0.0150

Joint 3 69.39 0.0000

Denominator 24

Contrast Std. err. [95% conf. interval]

gender@dose

(Female vs Male) 250 11.06041 .9282533 9.144586 12.97623

(Female vs Male) 500 7.149691 .9282533 5.23387 9.065512

(Female vs Male) 750 2.433124 .9282533 .5173031 4.348944

18 contrast — Contrasts and linear hypothesis tests after estimation

The effect for females is about 11 points higher than for males at a dosage of 250, and that shrinks

to 2.4 points higher at the maximum dosage of 750.

We can form the simple effects the other way by contrasting the effect of dose at each level of

gender:

. contrast ar.dose@gender

Contrasts of marginal linear predictions

Margins: asbalanced

df F P>F

dose@gender

(500 vs 250) Male 1 47.24 0.0000

(500 vs 250) Female 1 122.90 0.0000

(750 vs 500) Male 1 11.06 0.0028

(750 vs 500) Female 1 70.68 0.0000

Joint 4 122.65 0.0000

Denominator 24

Contrast Std. err. [95% conf. interval]

dose@gender

(500 vs 250) Male -6.380018 .9282533 -8.295839 -4.464198

(500 vs 250) Female

-10.29073 .9282533 -12.20655 -8.374914

(750 vs 500) Male -3.087217 .9282533 -5.003038 -1.171396

(750 vs 500) Female -7.803784 .9282533 -9.719605 -5.887963

Here we use the ar. reverse adjacent contrast operator so that first we are comparing a dosage

of 500 with a dosage of 250, and then we are comparing 750 with 500. We see that increasing the

dosage has a larger effect on females—10.3 points when going from 250 to 500 compared with 6.4

points for males, and 7.8 points when going from 500 to 750 versus 3.1 points for males.

Interaction effects

By specifying contrast operators on both factors, we can decompose the interaction effect into

separate interaction contrasts.

. contrast ar.dose#r.gender

Contrasts of marginal linear predictions

Margins: asbalanced

df F P>F

dose#gender

(500 vs 250) (Female vs Male) 1 8.87 0.0065

(750 vs 500) (Female vs Male) 1 12.91 0.0015

Joint 2 21.66 0.0000

Denominator 24

contrast — Contrasts and linear hypothesis tests after estimation 19

Contrast Std. err. [95% conf. interval]

dose#gender

(500 vs 250)

(Female vs Male) -3.910716 1.312748 -6.620095 -1.201336

(750 vs 500)

(Female vs Male) -4.716567 1.312748 -7.425947 -2.007187

Look for departures from zero to indicate an interaction effect between dose and gender. Both

contrasts are significantly different from zero. Of course, we already knew the overall interaction

was significant from our ANOVA results. The effect of increasing dose from 250 to 500 is 3.9 points

greater in females than in males, and the effect of increasing dose from 500 to 750 is 4.7 points

greater in females than in males. The confidence intervals for both estimates easily exclude zero,

meaning that there is an interaction effect.

The joint test of these two interaction effects reproduces the test of interaction effects in the anova

output. We can see that the F statistic of 21.66 matches the statistic from our original ANOVA results.

Main effects

We can perform tests of the main effects by listing each variable individually in contrast.

. contrast dose gender

Contrasts of marginal linear predictions

Margins: asbalanced

df F P>F

dose 2 223.64 0.0000

gender 1 164.85 0.0000

Denominator 24

The F tests are equivalent to the tests of main effects in the anova output. This is true only for

linear models. contrast provides an easy way to obtain main effects and other ANOVA-style tests

for models whose responses are not linear in the parameters—logistic, probit, glm, etc.

If we include contrast operators on the variables, we can also decompose the main effects into

individual contrasts:

. contrast ar.dose r.gender

Contrasts of marginal linear predictions

Margins: asbalanced

df F P>F

dose

(500 vs 250) 1 161.27 0.0000

(750 vs 500) 1 68.83 0.0000

Joint 2 223.64 0.0000

gender 1 164.85 0.0000

Denominator 24

20 contrast — Contrasts and linear hypothesis tests after estimation

Contrast Std. err. [95% conf. interval]

dose

(500 vs 250) -8.335376 .6563742 -9.690066 -6.980687

(750 vs 500) -5.4455 .6563742 -6.80019 -4.090811

gender

(Female vs Male) 6.881074 .5359273 5.774974 7.987173

By specifying the ar. operator on dose, we decompose the main effect for dose into two one-degree-

of-freedom contrasts, comparing the marginal mean of blood pressure change for each dosage level

with that of the previous level. Because gender has only two levels, we cannot decompose this main

effect any further. However, specifying a contrast operator on gender allowed us to calculate the

difference in the marginal means for women and men.

Partial interaction effects

At this point, we have looked at the total interaction effects and at the main effects of each variable.

The partial interaction effects are a midpoint between these two types of effects where we collect the

individual interaction effects along the levels of one of the variables and perform a joint test of those

interactions. If we think of the interaction effects as forming a table, with the levels of one factor

variable forming the rows and the levels of the other forming the columns, partial interaction effects

are joint tests of the interactions in a row or a column. To perform these tests, we specify a contrast

operator on only one of the variables in our interaction. For this particular model, these are not very

interesting because our variables have only two and three levels. Therefore, the tests of the partial

interaction effects reproduce the tests that we obtained for the total interaction effects. We specify a

contrast operator only on dose to decompose the overall test for interaction effects into joint tests

for each ar.dose contrast:

. contrast ar.dose#gender

Contrasts of marginal linear predictions

Margins: asbalanced

df F P>F

dose#gender

(500 vs 250) (joint) 1 8.87 0.0065

(750 vs 500) (joint) 1 12.91 0.0015

Joint 2 21.66 0.0000

Denominator 24

The first row is a joint test of all the interaction effects involving the (500 vs 250) comparison

of dosages. The second row is a joint test of all the interaction effects involving the (750 vs 500)

comparison. If we look back at our output in Interaction effects, we can see that there was only one of

each of these interaction effects. Therefore, each test labeled (joint) has only one degree-of-freedom.

We could have instead included a contrast operator on gender to compute the partial interaction

effects along the other dimension:

contrast — Contrasts and linear hypothesis tests after estimation 21

. contrast dose#r.gender

Contrasts of marginal linear predictions

Margins: asbalanced

df F P>F

dose#gender 2 21.66 0.0000

Denominator 24

Here we obtain a joint test of all the interaction effects involving the (Female vs Male) comparison

for gender. Because gender has only two levels, the (Female vs Male) contrast is the only reference

category contrast possible. Therefore, we obtain a single joint test of all the interaction effects.

Clearly, the partial interaction effects are not interesting for this particular model. However, if our

factors had more levels, the partial interaction effects would produce tests that are not available in

the total interaction effects. For example, if our model included factors for four dosage levels and

three races, then typing

. contrast ar.dose#race

would produce three joint tests, one for each of the reverse adjacent contrasts for dosage. Each of

these tests would be a two-degree-of-freedom test because race has three levels.

Three-way and higher-order models

All the contrasts and tests that we reviewed above for two-way models can be used with models

that have more terms. For instance, we could fit a three-way full factorial model by using the anova

command:

. use https://www.stata-press.com/data/r18/cont3way

. anova y race##sex##group

We could then test the simple effects of race within each level of the interaction between sex

and group:

. contrast race@sex#group

To see the reference category contrasts that decompose these simple effects, type

. contrast r.race@sex#group

We could test the three-way interaction effects by typing

. contrast race#sex#group

or the interaction effects for the interaction of race and sex by typing

. contrast race#sex

To see the individual reference category contrasts that decompose this interaction effect, type

. contrast r.race#r.sex

We could even obtain joint tests for the interaction of race and sex within each level of group

by typing

. contrast race#sex@group

22 contrast — Contrasts and linear hypothesis tests after estimation

For tests of the main effects of each factor, we can type

. contrast race sex group

We can calculate the individual reference category contrasts that decompose these main effects:

. contrast r.race r.sex r.group

For the partial interaction effects, we could type

. contrast r.race#group

to obtain a joint test of the two-way interaction effects of race and group for each of the individual

r.race contrasts.

We could type

. contrast r.race#sex#group

to obtain a joint test of all the three-way interaction terms for each of the individual r.race contrasts.

Contrast operators

contrast recognizes a set of contrast operators that are used to specify commonly used contrasts.

When these operators are used, contrast will report a test for each individual contrast in addition

to the joint test for the term. We have already seen a few of these, like r. and ar., in the previous

examples. Here we will take a closer look at each of the unweighted operators.

Here we use the cholesterol dataset and the one-way ANOVA model from the example in One-way

models:

. use https://www.stata-press.com/data/r18/cholesterol

(Artificial cholesterol data)

. anova chol agegrp

(output omitted )

The margins command reports the estimated cell means, bµ

1

, . . . , bµ

5

, for each of the five age

groups.

. margins agegrp

Adjusted predictions Number of obs = 75

Expression: Linear prediction, predict()

Delta-method

Margin std. err. t P>|t| [95% conf. interval]

agegrp

10--19 180.5198 2.666944 67.69 0.000 175.2007 185.8388

20--29 188.7233 2.666944 70.76 0.000 183.4043 194.0424

30--39 202.0608 2.666944 75.76 0.000 196.7418 207.3799

40--59 210.6704 2.666944 78.99 0.000 205.3514 215.9895

60--79 219.282 2.666944 82.22 0.000 213.9629 224.601

contrast — Contrasts and linear hypothesis tests after estimation 23

Contrast operators provide an easy way to make certain types of comparisons of these cell means.

We use the ordinal factor agegrp to demonstrate these operators because some types of contrasts are

meaningful only when the levels of the factor have a natural ordering. We demonstrate these contrast

operators using a one-way model; however, they are equally applicable to main effects, simple effects,

and interactions for more complicated models.

Differences from a reference level (r.)

The r. operator specifies that each level of the attached factor variable be compared with a

reference level. These are referred to as reference-level or reference-category contrasts (or effects),

and r. is the reference-level operator.

In the following, we use the r. operator to test the effect of each category of age group when

that category is compared with a reference category.

. contrast r.agegrp

Contrasts of marginal linear predictions

Margins: asbalanced

df F P>F

agegrp

(20--29 vs 10--19) 1 4.73 0.0330

(30--39 vs 10--19) 1 32.62 0.0000

(40--59 vs 10--19) 1 63.91 0.0000

(60--79 vs 10--19) 1 105.62 0.0000

Joint 4 35.02 0.0000

Denominator 70

Contrast Std. err. [95% conf. interval]

agegrp

(20--29 vs 10--19) 8.203575 3.771628 .6812991 15.72585

(30--39 vs 10--19) 21.54105 3.771628 14.01878 29.06333

(40--59 vs 10--19) 30.15067 3.771628 22.6284 37.67295

(60--79 vs 10--19) 38.76221 3.771628 31.23993 46.28448

In the first table, the row labeled (20--29 vs 10--19) is a test of µ

2

= µ

1

, a test that the mean

cholesterol levels for the 10–19 age group and the 20–29 age group are equal. The tests in the

next three rows are defined similarly. The row labeled Joint provides the joint test for these four

hypotheses, which is just the test of the main effects of age group.

The second table provides the contrasts of each category with the reference category along with

confidence intervals. The contrast in the row labeled (20--29 vs 10--19) is the difference in the cell

means of the second age group and the first age group, bµ

2

− bµ

1

.

The first level of a factor is the default reference level, but we can specify a different reference

level by using the b. operator; see [U] 11.4.3.2 Base levels. Here we use the last age group, (60-79),

instead of the first as the reference category. We also include the nowald option so that only the

table of contrasts and their confidence intervals is produced.

24 contrast — Contrasts and linear hypothesis tests after estimation

. contrast rb5.agegrp, nowald

Contrasts of marginal linear predictions

Margins: asbalanced

Contrast Std. err. [95% conf. interval]

agegrp

(10--19 vs 60--79) -38.76221 3.771628 -46.28448 -31.23993

(20--29 vs 60--79) -30.55863 3.771628 -38.08091 -23.03636

(30--39 vs 60--79) -17.22115 3.771628 -24.74343 -9.698877

(40--59 vs 60--79) -8.611533 3.771628 -16.13381 -1.089257

Now, the first row is labeled (10--19 vs 60--79) and is the difference in the cell means of the first

and fifth age groups.

Differences from the next level (a.)

The a. operator specifies that each level of the attached factor variable be compared with the next

level. These are referred to as adjacent contrasts (or effects), and a. is the adjacent operator. This

operator is meaningful only with factor variables that have a natural ordering in the levels.

We can use the a. operator to perform tests that each level of age group differs from the next

adjacent level.

. contrast a.agegrp

Contrasts of marginal linear predictions

Margins: asbalanced

df F P>F

agegrp

(10--19 vs 20--29) 1 4.73 0.0330

(20--29 vs 30--39) 1 12.51 0.0007

(30--39 vs 40--59) 1 5.21 0.0255

(40--59 vs 60--79) 1 5.21 0.0255

Joint 4 35.02 0.0000

Denominator 70

Contrast Std. err. [95% conf. interval]

agegrp

(10--19 vs 20--29) -8.203575 3.771628 -15.72585 -.6812991

(20--29 vs 30--39) -13.33748 3.771628 -20.85976 -5.815204

(30--39 vs 40--59) -8.60962 3.771628 -16.1319 -1.087345

(40--59 vs 60--79) -8.611533 3.771628 -16.13381 -1.089257

In the first table, the row labeled (10--19 vs 20--29) tests the effect of belonging to the 10–19 age

group instead of the 20–29 age group. Likewise, the rows labeled (20--29 vs 30--39), (30--39 vs 40--

59), and (40--59 vs 60--79) are tests for the effects of being in the younger of the two age groups

instead of the older one.

In the second table, the contrast in the row labeled (10--19 vs 20--29) is the difference in the

cell means of the first and second age groups, bµ

1

− bµ

2

. The contrasts in the other rows are defined

similarly.

contrast — Contrasts and linear hypothesis tests after estimation 25

Differences from the previous level (ar.)

The ar. operator specifies that each level of the attached factor variable be compared with the

previous level. These are referred to as reverse adjacent contrasts (or effects), and ar. is the reverse

adjacent operator. As with the a. operator, this operator is meaningful only with factor variables that

have a natural ordering in the levels.

In the following, we use the ar. operator to report tests for the individual reverse adjacent effects

of agegrp.

. contrast ar.agegrp

Contrasts of marginal linear predictions

Margins: asbalanced

df F P>F

agegrp

(20--29 vs 10--19) 1 4.73 0.0330

(30--39 vs 20--29) 1 12.51 0.0007

(40--59 vs 30--39) 1 5.21 0.0255

(60--79 vs 40--59) 1 5.21 0.0255

Joint 4 35.02 0.0000

Denominator 70

Contrast Std. err. [95% conf. interval]

agegrp

(20--29 vs 10--19) 8.203575 3.771628 .6812991 15.72585

(30--39 vs 20--29) 13.33748 3.771628 5.815204 20.85976

(40--59 vs 30--39) 8.60962 3.771628 1.087345 16.1319

(60--79 vs 40--59) 8.611533 3.771628 1.089257 16.13381

Here the Wald tests in the first table for the individual reverse adjacent effects are equivalent to the

tests for the adjacent effects in the previous example. However, if we compare values of the contrasts

in the bottom tables, we see the difference between the r. and the ar. operators. This time, the

contrast in the first row is labeled (20--29 vs 10--19) and is the difference in the cell means of

the second and first age groups, bµ

2

− bµ

1

. This is the estimated effect of belonging to the 20–29

age group instead of the 10–19 age group. The remaining rows make similar comparisons with the

previous level.

Differences from the grand mean (g.)

The g. operator specifies that each level of a factor variable be compared with the grand mean of

all levels. For this operator, the grand mean is computed using a simple average of the cell means.

26 contrast — Contrasts and linear hypothesis tests after estimation

Here are the grand mean effects of agegrp:

. contrast g.agegrp

Contrasts of marginal linear predictions

Margins: asbalanced

df F P>F

agegrp

(10--19 vs mean) 1 68.42 0.0000

(20--29 vs mean) 1 23.36 0.0000

(30--39 vs mean) 1 0.58 0.4506

(40--59 vs mean) 1 19.08 0.0000

(60--79 vs mean) 1 63.65 0.0000

Joint 4 35.02 0.0000

Denominator 70

Contrast Std. err. [95% conf. interval]

agegrp

(10--19 vs mean) -19.7315 2.385387 -24.48901 -14.974

(20--29 vs mean) -11.52793 2.385387 -16.28543 -6.770423

(30--39 vs mean) 1.809552 2.385387 -2.947953 6.567057

(40--59 vs mean) 10.41917 2.385387 5.661668 15.17668

(60--79 vs mean) 19.0307 2.385387 14.2732 23.78821

There are five age groups in our estimation sample. Thus, the row labeled (10--19 vs mean) tests µ

1

=

(µ

1

+µ

2

+µ

3

+µ

4

+µ

5

)/5. The row labeled (20--29 vs mean) tests µ

2

= (µ

1

+µ

2

+µ

3

+µ

4

+µ

5

)/5.

The remaining rows perform similar tests for the third, fourth, and fifth age groups. In our example,

the means for all age groups except the 30–39 age group are statistically different from the grand

mean.

Differences from the mean of subsequent levels (h.)

The h. operator specifies that each level of the attached factor variable be compared with the mean

of subsequent levels. These are referred to as Helmert contrasts (or effects), and h. is the Helmert

operator. For this operator, the mean is computed using a simple average of the cell means. This

operator is meaningful only with factor variables that have a natural ordering in the levels.

contrast — Contrasts and linear hypothesis tests after estimation 27

Here are the Helmert contrasts for agegrp:

. contrast h.agegrp

Contrasts of marginal linear predictions

Margins: asbalanced

df F P>F

agegrp

(10--19 vs >10--19) 1 68.42 0.0000

(20--29 vs >20--29) 1 50.79 0.0000

(30--39 vs >30--39) 1 15.63 0.0002

(40--59 vs 60--79) 1 5.21 0.0255

Joint 4 35.02 0.0000

Denominator 70

Contrast Std. err. [95% conf. interval]

agegrp

(10--19 vs >10--19) -24.66438 2.981734 -30.61126 -18.7175

(20--29 vs >20--29) -21.94774 3.079522 -28.08965 -15.80583

(30--39 vs >30--39) -12.91539 3.266326 -19.42987 -6.400905

(40--59 vs 60--79) -8.611533 3.771628 -16.13381 -1.089257

The row labeled (10--19 vs >10--19) tests µ

1

= (µ

2

+ µ

3

+ µ

4

+ µ

5

)/4, that is, that the cell mean

for the youngest age group is equal to the average of the cell means for the older age groups. The row

labeled (20--29 vs >20--29) tests µ

2

= (µ

3

+ µ

4

+ µ

5

)/3. The tests in the other rows are defined

similarly.

Differences from the mean of previous levels (j.)

The j. operator specifies that each level of the attached factor variable be compared with the

mean of the previous levels. These are referred to as reverse Helmert contrasts (or effects), and j.

is the reverse Helmert operator. For this operator, the mean is computed using a simple average of

the cell means. This operator is meaningful only with factor variables that have a natural ordering in

the levels.

Here are the reverse Helmert contrasts of agegrp:

. contrast j.agegrp

Contrasts of marginal linear predictions

Margins: asbalanced

df F P>F

agegrp

(20--29 vs 10--19) 1 4.73 0.0330

(30--39 vs <30--39) 1 28.51 0.0000

(40--59 vs <40--59) 1 43.18 0.0000

(60--79 vs <60--79) 1 63.65 0.0000

Joint 4 35.02 0.0000

Denominator 70

28 contrast — Contrasts and linear hypothesis tests after estimation

Contrast Std. err. [95% conf. interval]

agegrp

(20--29 vs 10--19) 8.203575 3.771628 .6812991 15.72585

(30--39 vs <30--39) 17.43927 3.266326 10.92479 23.95375

(40--59 vs <40--59) 20.2358 3.079522 14.09389 26.37771

(60--79 vs <60--79) 23.78838 2.981734 17.8415 29.73526

The row labeled (20--29 vs 10--19) tests µ

2

= µ

1

, that is, that the cell means for the 20–29 and the

10–19 age groups are equal. The row labeled (30--39 vs <30--29) tests µ

3

= (µ

1

+ µ

2

)/2, that is,

that the cell mean for the 30–39 age group is equal to the average of the cell means for the 10–19

and 20–29 age groups. The tests in the remaining rows are defined similarly.

Orthogonal polynomials (p. and q.)

The p. and q. operators specify that orthogonal polynomials be applied to the attached factor

variable. Orthogonal polynomial contrasts allow us to partition the effects of a factor variable into

linear, quadratic, cubic, and higher-order polynomial components. The p. operator applies orthogonal

polynomials using the values of the factor variable. The q. operator applies orthogonal polynomials

using the level indices. If the level values of the factor variable are equally spaced, as with our

agegrp variable, then the p. and q. operators yield the same result. These operators are meaningful

only with factor variables that have a natural ordering in the levels.

Because agegrp has five levels, contrast can test the linear, quadratic, cubic, and quartic effects

of agegrp.

. contrast p.agegrp, noeffects

Contrasts of marginal linear predictions

Margins: asbalanced

df F P>F

agegrp

(linear) 1 139.11 0.0000

(quadratic) 1 0.15 0.6962

(cubic) 1 0.37 0.5448

(quartic) 1 0.43 0.5153

Joint 4 35.02 0.0000

Denominator 70

The row labeled (linear) tests the linear effect of agegrp, the only effect that appears to be

significant in this case.

The labels for our agegrp variable show the age ranges that correspond to each level.

. label list ages

ages:

1 10--19

2 20--29

3 30--39

4 40--59

5 60--79

Notice that these groups do not have equal widths. Now, let’s refit our model using the agemidpt

variable. The values of agemidpt indicate the midpoint of each age group that was defined by the

agegrp variable and are, therefore, not equally spaced.

contrast — Contrasts and linear hypothesis tests after estimation 29

. anova chol agemidpt

Number of obs = 75 R-squared = 0.6668

Root MSE = 10.329 Adj R-squared = 0.6477

Source Partial SS df MS F Prob>F

Model 14943.4 4 3735.8499 35.02 0.0000

agemidpt 14943.4 4 3735.8499 35.02 0.0000

Residual 7468.2197 70 106.68885

Total 22411.619 74 302.85972

Now if we use the q. operator, we will obtain the same results as above because the level indices

of agemidpt are equivalent to the values of agegrp.

. contrast q.agemidpt, noeffects

Contrasts of marginal linear predictions

Margins: asbalanced

df F P>F

agemidpt

(linear) 1 139.11 0.0000

(quadratic) 1 0.15 0.6962

(cubic) 1 0.37 0.5448

(quartic)

1 0.43 0.5153

Joint 4 35.02 0.0000

Denominator 70

However, if we use the p. operator, we will instead fit an orthogonal polynomial to the midpoint

values.

. contrast p.agemidpt, noeffects

Contrasts of marginal linear predictions

Margins: asbalanced

df F P>F

agemidpt

(linear) 1 133.45 0.0000

(quadratic) 1 5.40 0.0230

(cubic)

1 0.05 0.8198

(quartic) 1 1.16 0.2850

Joint 4 35.02 0.0000

Denominator 70

Using the values of the midpoints, the quadratic effect is also significant at the 5% level.

Technical note

We used the noeffects option when working with orthogonal polynomial contrasts. Apart from

perhaps the sign of the contrast, the values of the individual contrasts are not meaningful for orthogonal

polynomial contrasts. In addition, many textbooks provide tables with contrast coefficients that can be

used to compute orthogonal polynomial contrasts where the levels of a factor are equally spaced. If

30 contrast — Contrasts and linear hypothesis tests after estimation

we use these coefficients and calculate the contrasts manually with user-defined contrasts, as described

below, the Wald tests for the polynomial terms will be equivalent, but the values of the individual

contrasts will not necessarily match those that we obtain when using the polynomial contrast operator.

When we use one of these contrast operators, an algorithm is used to calculate the coefficients of the

polynomial contrast that will allow for unequal spacing in the levels of the factor as well as in the

weights for the cell frequencies (when using pw. or qw.), as described in Methods and formulas.

User-defined contrasts

In the previous examples, we performed tests using contrast operators. When there is not a contrast

operator available to calculate the contrast in which we are interested, we can specify custom contrasts.

Here we fit a one-way model for cholesterol on the factor race, which has three levels:

. label list race

race:

1 Black

2 White

3 Other

. anova chol race

Number of obs = 75 R-squared = 0.0299

Root MSE = 17.3775 Adj R-squared = 0.0029

Source

Partial SS df MS F Prob>F

Model 669.27823 2 334.63912 1.11 0.3357

race 669.27823 2 334.63912 1.11 0.3357

Residual 21742.341 72 301.97696

Total 22411.619 74 302.85972

margins calculates the estimated cell mean cholesterol level for each race:

. margins race

Adjusted predictions Number of obs = 75

Expression: Linear prediction, predict()

Delta-method

Margin std. err. t P>|t| [95% conf. interval]

race

Black 204.4279 3.475497 58.82 0.000 197.4996 211.3562

White 197.6132 3.475497 56.86 0.000 190.6849 204.5415

Other 198.7127 3.475497 57.18 0.000 191.7844 205.6409

Suppose we want to test the following linear combination:

3

X

i=1

c

i

µ

i

where µ

i

is the cell mean of chol when race is equal to its ith level (the means estimated using

margins above). Assuming the c

i

elements sum to zero, this linear combination is a contrast. We

can specify this type of custom contrast by using the following syntax:

contrast — Contrasts and linear hypothesis tests after estimation 31

{race c

1

c

2

c

3

}

The null hypothesis for the test of the main effects of race is

H

0

race

: µ

1

= µ

2

= µ

3

Although H

0

race

can be tested using any of several different contrasts on the cell means, we will test

it by comparing the second and third cell means with the first. To test that the cell means for blacks

and whites are equal, µ

1

= µ

2

, we can specify the contrast

{race -1 1 0}

To test that the cell means for blacks and other races are equal, µ

1

= µ

3

, we can specify the contrast

{race -1 0 1}

We can use both in a single call to contrast.

. contrast {race -1 1 0} {race -1 0 1}

Contrasts of marginal linear predictions

Margins: asbalanced

df F P>F

race

(1) 1 1.92 0.1699

(2) 1 1.35 0.2488

Joint

2 1.11 0.3357

Denominator 72

Contrast Std. err. [95% conf. interval]

race

(1) -6.814717 4.915095 -16.61278 2.983345

(2) -5.715261 4.915095 -15.51332 4.082801

The row labeled (1) is the test for µ

1

= µ

2

, the first specified contrast. The row labeled (2) is the

test for µ

1

= µ

3

, the second specified contrast. The row labeled Joint is the overall test for the

main effects of race.

32 contrast — Contrasts and linear hypothesis tests after estimation

Now, let’s fit a model with two factors, race and age group:

. anova chol race##agegrp

Number of obs = 75 R-squared = 0.7524

Root MSE = 9.61785 Adj R-squared = 0.6946

Source Partial SS df MS F Prob>F

Model 16861.438 14 1204.3884 13.02 0.0000

race 669.27823 2 334.63912 3.62 0.0329

agegrp 14943.4 4 3735.8499 40.39 0.0000

race#agegrp 1248.7601 8 156.09501 1.69 0.1201

Residual 5550.1814 60 92.503024

Total 22411.619 74 302.85972

The null hypothesis for the test of the main effects of race is now

H

0

race

: µ

1·

= µ

2·

= µ

3·

where µ

i·

is the marginal mean of chol when race is equal to its ith level.

We can use the same syntax as above to perform this test by specifying contrasts on the marginal

means of race:

. contrast {race -1 1 0} {race -1 0 1}

Contrasts of marginal linear predictions

Margins: asbalanced

df F P>F

race

(1) 1 6.28 0.0150

(2) 1 4.41 0.0399

Joint 2 3.62 0.0329

Denominator 60

Contrast Std. err. [95% conf. interval]

race

(1) -6.814717 2.720339 -12.2562 -1.37323

(2) -5.715261 2.720339 -11.15675 -.2737739

contrast — Contrasts and linear hypothesis tests after estimation 33

Custom contrasts may be specified on the cell means of interactions, too. Here we use margins

to calculate the mean of chol for each cell in the interaction of race and agegrp:

. margins race#agegrp

Adjusted predictions Number of obs = 75

Expression: Linear prediction, predict()

Delta-method

Margin std. err. t P>|t| [95% conf. interval]

race#agegrp

Black#10--19 179.2309 4.301233 41.67 0.000 170.6271 187.8346

Black#20--29 196.4777 4.301233 45.68 0.000 187.874 205.0814

Black#30--39 210.6694 4.301233 48.98 0.000 202.0656 219.2731

Black#40--59 214.097 4.301233 49.78 0.000 205.4933 222.7008

Black#60--79 221.6646 4.301233 51.54 0.000 213.0609 230.2684

White#10--19 186.0727 4.301233 43.26 0.000 177.469 194.6765

White#20--29 184.6714 4.301233 42.93 0.000 176.0676 193.2751

White#30--39 196.2633 4.301233 45.63 0.000 187.6595 204.867

White#40--59 209.9953 4.301233 48.82 0.000 201.3916 218.5991

White#60--79 211.0633 4.301233 49.07 0.000 202.4595 219.667

Other#10--19 176.2556 4.301233 40.98 0.000 167.6519 184.8594

Other#20--29 185.0209 4.301233 43.02 0.000 176.4172 193.6247

Other#30--39 199.2498 4.301233 46.32 0.000 190.646 207.8535

Other#40--59 207.9189 4.301233 48.34 0.000 199.3152 216.5227

Other#60--79

225.118 4.301233 52.34 0.000 216.5143 233.7218

Now, we are interested in testing the following linear combination of these cell means:

3

X

i=1

5

X

j=1

c

ij

µ

ij

We can specify this type of custom contrast using the following syntax:

{race#agegrp c

11

c

12

. . . c

15

c

21

c

22

. . . c

25

c

31

c

32

. . . c

35

}

Because the marginal means of chol for each level of race are linear combinations of the cell

means, we can compose the test for the main effects of race in terms of the cell means directly.

The constraint that the marginal means for blacks and whites are equal, µ

1·

= µ

2·

, translates to the

following constraint on the cell means:

1

5

(µ

11

+ µ

12

+ µ

13

+ µ

14

+ µ

15

) =

1

5

(µ

21

+ µ

22

+ µ

23

+ µ

24

+ µ

25

)

Ignoring the common factor, we can specify this contrast as

{race#agegrp -1 -1 -1 -1 -1 1 1 1 1 1 0 0 0 0 0}

contrast will fill in the trailing zeros for us if we neglect to specify them, so

{race#agegrp -1 -1 -1 -1 -1 1 1 1 1 1}

is also allowed. The other constraint, µ

1·

= µ

3·

, translates to

1

5

(µ

11

+ µ

12

+ µ

13

+ µ

14

+ µ

15

) =

1

5

(µ

31

+ µ

32

+ µ

33

+ µ

34

+ µ

35

)

34 contrast — Contrasts and linear hypothesis tests after estimation

This can be specified to contrast as

{race#agegrp -1 -1 -1 -1 -1 0 0 0 0 0 1 1 1 1 1}

The following call to contrast yields the same test results as above.

. contrast {race#agegrp -1 -1 -1 -1 -1 1 1 1 1 1}

> {race#agegrp -1 -1 -1 -1 -1 0 0 0 0 0 1 1 1 1 1}, noeffects

Contrasts of marginal linear predictions

Margins: asbalanced

df F P>F

race#agegrp

(1) (1) 1 6.28 0.0150

(2) (2) 1 4.41 0.0399

Joint 2 3.62 0.0329

Denominator 60

The row labeled (1) (1) is the test for

µ

11

+ µ

12

+ µ

13

+ µ

14

+ µ

15

= µ

21

+ µ

22

+ µ

23

+ µ

24

+ µ

25

It was the first specified contrast. The row labeled (2) (2) is the test for

µ

11

+ µ

12

+ µ

13

+ µ

14

+ µ

15

= µ

31

+ µ

32

+ µ

33

+ µ

34

+ µ

35

It was the second specified contrast. The row labeled Joint tests (1) (1) and (2) (2) simultaneously.

We used the noeffects option above to suppress the table of contrasts. We can omit the 1/5

from the equations for µ

1·

= µ

2·

and µ

1·

= µ

3·

and still obtain the appropriate tests. However, if

we want to calculate the differences in the marginal means, we must include the 1/5 = 0.2 on each

of the contrast coefficients as follows:

. contrast {race#agegrp -0.2 -0.2 -0.2 -0.2 -0.2 ///

0.2 0.2 0.2 0.2 0.2} ///

{race#agegrp -0.2 -0.2 -0.2 -0.2 -0.2 ///

0 0 0 0 0 ///

0.2 0.2 0.2 0.2 0.2}

So far, we have reproduced the reference category contrasts by specifying user-defined contrasts

on the marginal means and then on the cell means. For this test, it would have been easier to use the

r. contrast operator:

. contrast r.race, noeffects

Contrasts of marginal linear predictions

Margins: asbalanced

df F P>F

race

(White vs Black) 1 6.28 0.0150

(Other vs Black) 1 4.41 0.0399

Joint 2 3.62 0.0329

Denominator 60

contrast — Contrasts and linear hypothesis tests after estimation 35

In most cases, we can use contrast operators to perform tests. However, if we want to compare,

for instance, the second and third age groups with the fourth and fifth age groups with the test

1

2

(µ

·2

+ µ

·3

) =

1

2

(µ

·4

+ µ

·5

)

there is not a contrast operator that corresponds to this particular contrast. A custom contrast is

necessary.

. contrast {agegrp 0 -0.5 -0.5 0.5 0.5}

Contrasts of marginal linear predictions

Margins: asbalanced

df F P>F

agegrp 1 62.19 0.0000

Denominator 60

Contrast Std. err. [95% conf. interval]

agegrp

(1) 19.58413 2.483318 14.61675 24.5515

Empty cells

An empty cell is a combination of the levels of factor variables that is not observed in the estimation

sample. In the previous examples, we have seen data with three levels of race, five levels of agegrp,

and all level combinations of race and agegrp present. Suppose there are no observations for white

individuals in the second age group (ages 20–29).

. use https://www.stata-press.com/data/r18/cholesterol2

(Artificial cholesterol data, empty cells)

. label list

race:

1 Black

2 White

3 Other

ages:

1 10--19

2 20--29

3 30--39

4 40--59

5 60--79

36 contrast — Contrasts and linear hypothesis tests after estimation

. regress chol race##agegrp

note: identifies no observations in the sample.

Source SS df MS Number of obs = 70

F(13, 56) = 13.51

Model 15751.6113 13 1211.66241 Prob > F = 0.0000

Residual 5022.71559 56 89.6913498 R-squared = 0.7582

Adj R-squared = 0.7021

Total 20774.3269 69 301.077201 Root MSE = 9.4706

chol Coefficient Std. err. t P>|t| [95% conf. interval]

race

White 12.84185 5.989703 2.14 0.036 .8430383 24.84067

Other -.167627 5.989703 -0.03 0.978 -12.16644 11.83119

agegrp

20--29 17.24681 5.989703 2.88 0.006 5.247991 29.24562

30--39 31.43847 5.989703 5.25 0.000 19.43966 43.43729

40--59 34.86613 5.989703 5.82 0.000 22.86732 46.86495

60--79 44.43374 5.989703 7.42 0.000 32.43492 56.43256

race#agegrp

White#20--29 0 (empty)

White#30--39 -22.83983 8.470719 -2.70 0.009 -39.80872 -5.870939

White#40--59 -14.67558 8.470719 -1.73 0.089 -31.64447 2.293306

White#60--79 -10.51115 8.470719 -1.24 0.220 -27.48004 6.457735

Other#20--29 -6.054425 8.470719 -0.71 0.478 -23.02331 10.91446

Other#30--39 -11.48083 8.470719 -1.36 0.181 -28.44971 5.488063

Other#40--59 -.6796112 8.470719 -0.08 0.936 -17.6485 16.28928

Other#60--79 -1.578052 8.470719 -0.19 0.853 -18.54694 15.39084

_cons 175.2309 4.235359 41.37 0.000 166.7464 183.7153

Now, let’s use contrast to test the main effects of race:

. contrast race

Contrasts of marginal linear predictions

Margins: asbalanced

df F P>F

race (not testable)

Denominator 56

By “not testable”, contrast means that it cannot form a test for the main effects of race based

on estimable functions of the model coefficients. agegrp has five levels, so contrast constructs an

estimate of the ith margin for race as

bµ

i·

=

1

5

5

X

j=1

bµ

ij

= bµ

0

+ bα

i

+

1

5

5

X

j=1

n

b

β

j

+ (

c

αβ)

ij

o

but (

c

αβ)

22

was constrained to zero because of the empty cell, so bµ

2·

is not an estimable function

of the model coefficients.

contrast — Contrasts and linear hypothesis tests after estimation 37

See Estimable functions in Methods and formulas of [R] margins for a technical description of

estimable functions. The emptycells(reweight) option causes contrast to estimate µ

2·

by

bµ

2·

=

bµ

21

+ bµ

23

+ bµ

24

+ bµ

25

4

which is an estimable function of the model coefficients.

. contrast race, emptycells(reweight)

Contrasts of marginal linear predictions

Margins: asbalanced

Empty cells: reweight

df F P>F

race 2 3.17 0.0498

Denominator 56

We can reconstruct the effect of the emptycells(reweight) option by using custom contrasts.

. contrast {race#agegrp -4 -4 -4 -4 -4 5 0 5 5 5}

> {race#agegrp -1 -1 -1 -1 -1 0 0 0 0 0 1 1 1 1 1}, noeffects

Contrasts of marginal linear predictions

Margins: asbalanced

df F P>F

race#agegrp

(1) (1) 1 1.06 0.3080

(2) (2) 1 2.37 0.1291

Joint 2 3.17 0.0498

Denominator 56

The row labeled (1) (1) is the test for

1

5

(µ

11

+ µ

12

+ µ

13

+ µ

14

+ µ

15

) =

1

4

(µ

21

+ µ

23

+ µ

24

+ µ

25

)