9/1/2020 CMS DB2 Standards and Guidelines

i

CENTERS for MEDICARE AND MEDICAID

SERVICES

DATABASE ADMINISTRATION

DB2 STANDARDS

9/1/2020

http://www.cms.hhs.gov/DBAdmin/downloads/DB2StandardsandGuidelines.pdf

CMS DB2 Standards and Guidelines 9/1/2020

ii

O

VERVIEW ................................................................................................................................................ 1

DB2 DATABASE DESIGN STANDARDS .................................................................................................. 1

1.1 DB2 Design Overview ......................................................................................................... 1

1.2 Databases ............................................................................................................................... 2

1.3 Tablespaces ............................................................................................................................ 3

1.4 Tables ....................................................................................................................................... 6

1.5 Columns ................................................................................................................................... 7

1.6 Referential Constraints (Foreign Keys) ........................................................................ 9

1.7 Table Check Constraints .................................................................................................. 11

1.8 Unique Constraints ............................................................................................................ 12

1.9 ROWID .................................................................................................................................... 13

1.10 Identity Columns ............................................................................................................ 15

1.11 Sequence Objects ........................................................................................................... 16

1.12 Views ................................................................................................................................... 17

1.13 Indexes ............................................................................................................................... 18

1.14 Table Alias ......................................................................................................................... 19

1.15 Synonyms .......................................................................................................................... 20

1.16 Stored Procedures .......................................................................................................... 21

1.17 User Defined Functions ................................................................................................ 22

1.18 User Defined Types ........................................................................................................ 22

1.19 Triggers .............................................................................................................................. 23

1.20 LOBs..................................................................................................................................... 23

1.21 Buffer Pools ....................................................................................................................... 24

1.22 Capacity Planning ........................................................................................................... 25

1.23 Space Requests ............................................................................................................... 25

APPLICATION PROGRAMMING ............................................................................................................... 26

2.1 Data Access (SQL) ............................................................................................................. 26

2.2 Application Recovery......................................................................................................... 28

2.3 Program Preparation ......................................................................................................... 28

2.3.1 DB2 Package..................................................................................................................... 28

2.3.2 DB2 Plan ............................................................................................................................. 29

2.3.3 Explains .............................................................................................................................. 29

2.4 DB2 Development Tools .................................................................................................. 29

NAMING STANDARDS ............................................................................................................................ 30

3.1.1 Standard Naming Format for DB2 Objects ........................................................... 30

3.1.2 Application Identifiers ................................................................................................... 36

3.1.3 Environment Identifiers ............................................................................................... 36

3.1.4 Obsolete Object names ................................................................................................ 37

3.2 Image Copy Dataset Names .......................................................................................... 38

3.3 DB2 Subsystem Names ................................................................................................... 40

3.4 Production Library Names ............................................................................................... 40

3.5 Test Library Names ........................................................................................................... 41

3.6 Utility Job Names ............................................................................................................... 41

3.7 DB2/ORACLE Naming Issues ......................................................................................... 42

SECURITY ................................................................................................................................................ 43

4.1 RACF Groups ........................................................................................................................ 43

4.1.1 DB2 Owner Groups ........................................................................................................... 43

4.1.2 Role Based Access Groups ............................................................................................. 43

9/1/2020 CMS DB2 Standards and Guidelines

iii

4.1.3 DB2 specific RACF Groups ............................................................................................. 44

4.2 DB2 Security Administration .......................................................................................... 44

4.2.1 Development .................................................................................................................... 44

4.2.2 Integration ........................................................................................................................ 46

4.2.3 Validation.......................................................................................................................... 47

4.2.4 Production ......................................................................................................................... 48

4.3 Accessing DB2 Resources ............................................................................................... 49

4.3.1 Dynamic SQL Applications (SPUFI, SAS, QMF, DB2 Connect, etc.) ............ 50

4.3.2 Static SQL Applications ................................................................................................ 50

4.3.3 Execution Environments .............................................................................................. 50

DATABASE MIGRATION PROCEDURES ................................................................................................. 52

5.1 DB2 Database Migration Overview .............................................................................. 52

5.2 Preliminary Physical Database Design Review........................................................ 53

5.2.1 Pre-Development Migration Review Checklist ..................................................... 54

5.2.2 Development Setup ....................................................................................................... 54

5.3 Pre-Integration Migration Review ................................................................................ 58

5.3.1 Pre-Integration Migration Review Checklist ......................................................... 59

5.4 Pre-Production Migration Review ................................................................................. 61

5.4.1 Pre-Production Migration Review Checklist .......................................................... 62

DATABASE UTILITIES ............................................................................................................................ 64

6.1 CMS Standard DB2 Utilities ............................................................................................ 64

6.2 Restrictions on Utilities with QREPed Tables ........................................................... 65

DATABASE PERFORMANCE MONITORING ............................................................................................ 66

7.1 TOP 10 SQL Performance Measures ........................................................................... 66

GLOSSARY .............................................................................................................................................. 69

DOCUMENT CHANGES ........................................................................................................................... 77

9/1/2020 CMS DB2 Standards and Guidelines

1

Overview

This document describes the physical database design and naming standards for

DB2 z/OS databases at the Centers for Medicare and Medicaid Services CMS Data

Center.

While developing the physical database design all standards must be followed, it is

imperative that care and consideration be given to the standards noted throughout

this DB2 Standards and Guidelines document with regard to database object

naming conventions, appropriate database object usage, and required object

parameter settings. Although exceptions to the standards may be permitted, any

deviation from the standards must be reviewed with and approved by the Central

DBA staff prior to implementing into development.

DB2 Database Design Standards

1.1 DB2 Design Overview

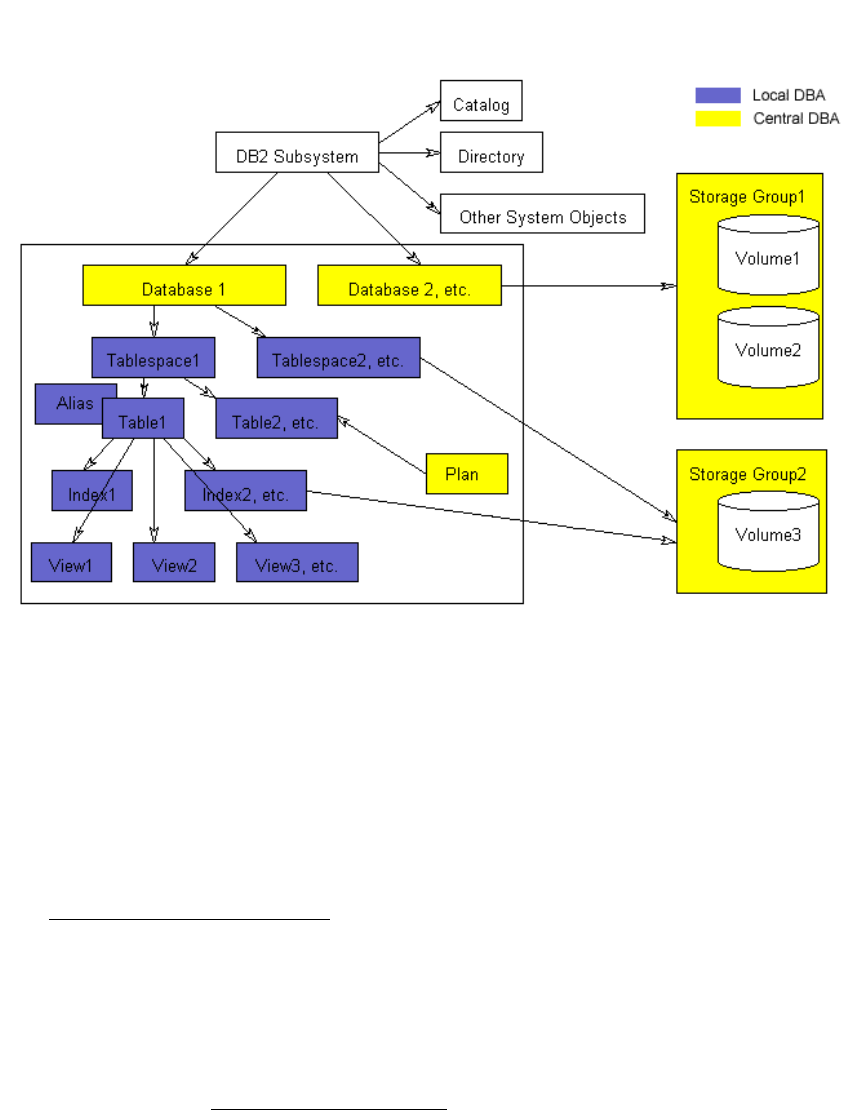

Creating and maintaining objects in DB2 is a shared responsibility. Systems

Programmers are responsible for all system related objects including the catalogs,

directories, buffer pools, and other DB2 system resources. Data Administrators

create and maintain the logical models. In the development environments, Central

DBAs create and maintain objects in preparation for subsequent use by the local

DBAs. These objects include storage groups, databases, and application plans. In

the test environments, Local DBAs are responsible for all database objects that will

be utilized by their corresponding application. Tablespaces, tables, aliases, indexes,

and views are all created and maintained by the local DBA. In integration,

validation, and production Central DBAs maintain all database objects. Data is

managed and maintained by the application team.

CA AllFusion ERwin should be used to translate the approved logical data model to a

physical model and BMC Change Manager should be used to implement data

definitions generated from ERwin to DB2. The CMS standards must be applied even

when the physical data model is developed and implemented using case tools, such

as ERwin and BMC, rather than using the tool’s defaults.

Implementing a new database or a change to an existing database starts with a

database service request to the central DBA and the central DA teams. The change

requester completes the CMS DB2 DB Service Request Form. If required, the

completed form is forwarded to the Central DA team for column naming approval.

After Central DA approval, the form is sent to the Central DBA team for approval

and implementation.

An overview and instructions for the CMS DB2 DB Service Request and CMS DB2

DB Data Refresh forms may be found in the Overview of the CMS DB2 DB Service

Request/Data Refresh Request Forms document.

CMS DB2 Standards and Guidelines 9/1/2020

2

1.2 Databases

A Database in DB2 is an object which is created to logically group other DB2 objects

such as tablespaces, tables, and indexes, within the DB2 catalog. Databases assist

with the administration of DB2 objects by allowing for many operational functions

to occur at the database level. DB2 commands such as -START DATABASE and -

STOP DATABASE, for example, allow entire groups of DB2 objects to be started and

stopped using one command.

See

Standard Naming Convention.

1.2.1 Object Usage

STANDARD

Please refer to the

roles and responsibilities documentation.

9/1/2020 CMS DB2 Standards and Guidelines

3

1.2.2 Required Parameters (DDL Syntax)

STANDARD

The parameters listed below must be included in the DB2 data definition language

(DDL) when defining an application database. DB2 default settings must not be

assumed for any of the following.

Parameter

Instructions

BUFFERPOOL

bpname

Provide a valid bufferpool designation for tablespaces. The

default value BP0 is used for the DB2 Catalog only. Refer to

the bufferpool section of the doc.

STOGROUP

STOGRPT1

Specify a valid storage group. STOGRPT1 is the standard

application storage group in all subsystems. The storage

group specified will indicate the default storage group that

will be assigned to any tablespace or indexspace in the

database only when that object was created without a

storage group specification. Do not specify the default DB2

storage group (SYSDEFLT). Unauthorized tablespaces

residing in the SYSDEFAULT storage group will be dropped

without warning

INDEXBP

bpname

Provide a valid bufferpool designation for indexes. The

default value BP0 is used for the DB2 Catalog only. Refer to

the bufferpool section of the doc.

CCSID encoding

scheme

Specify the default encoding scheme, typically this will be

CCSID EBCDIC.

1.3 Tablespaces

A tablespace is the DB2 object that holds data for one or more DB2 tables. It is a

physical object that is managed in DB2.

See

Standard Naming Convention.

1.3.1 Object Usage

STANDARD

• Assign one table per tablespace.

• Use UTS tablespaces for new tablespaces

• Define as PBR for all tables with a partitioning key unless they meet PBG criteria

below

• Define as PBG-

o for small tables that will always be contained in a single 4GB or less

partition (growth past 4GB will require alter to PBR if an appropriate

key can be determined)

CMS DB2 Standards and Guidelines 9/1/2020

4

o or where no partitioning key can be determined.

o CDBA concurrence must be obtained for PBG usage

• Create tablespaces explicitly using the CREATE TABLESPACE command rather

than implicitly by creating a table without specifying a tablespace name.

• Use DB2 storage groups to manage storage allocation for all application

tablespaces.

• Specify CLOSE YES for all tablespaces.

• Specify LOCKSIZE PAGE or ANY for all tablespaces that are not identified as

'read-only'. LOCKSIZE PAGE is recommended and should be used for new

databases. An exception to this standard is when it is determined that all

updates to the table are performed in batch by only by single threaded jobs,

where LOCKSIZE TABLE is recommended. Due to the additional overhead

associated with maintaining row-level locks, use of LOCKSIZE ROW is permitted

only when a high level of application concurrency is necessary. Additionally, the

Central DBA staff must review and approve the use of any LOCKSIZE option

other than LOCKSIZE PAGE or LOCKSIZE ANY.

• Only specify COMPRESS YES when it has been determined that significant

storage savings can be achieved by doing so. Typically, tablespaces containing

primarily character data will benefit more than those containing mainly numeric

data. Since data is compressed horizontally, tablespaces with longer average

row lengths are also good candidates for compression. When determining the

appropriateness of using DB2 compression, it is advisable to weigh the

associated CPU overhead (especially when modifying data) against the savings

in storage utilization as well as elapsed time for long running queries.

• For read-only tables, define tablespaces with PCTFREE, FREEPAGE of zero (0).

• Specify PRIQTY and SECQTY quantities that fall on a track or cylinder boundary.

On a 3390 device a track equates to 48K and a cylinder equates to 720K.

For tables over 100 cylinders always specify a PRIQTY and SECQTY to a number

that is a multiple of 720. Be sure that PRIQTY and SECQTY allocations are not

less than the size of one segment. For example, if SEGSIZE is 64, both PRIQTY

and SECQTY should be no less than 256K (64 pages * 4K/page). Sliding scale

allocation secondary, SECQTY=-1, is preferred, PRIQTY=-1 is useful in dev.

• Define SEGSIZE as an even multiple of four (4), where the number chosen is

closest to the actual number of pages on a table stored in the tablespace.

Specify SEGSIZE 64 for all tablespaces consisting of greater than 64 pages of

data.

• For single partition tables use UTS PBR with MINVALUE/MAXVALUE in the Create

Table partition range when the range is not predetermined.

• DBA review confirming that there is no appropriate partitioning key is required

before defining Partition by Growth.

• Use multiple partition UTS PBR tablespaces when it is anticipated that the

number of rows in tablespace will exceed one million rows, two gigabytes of

storage or when it is determined that partitioning the tablespace will yield

performance benefit (i.e. parallel query processing or independent partition

utility processing).

9/1/2020 CMS DB2 Standards and Guidelines

5

Index Partitioned Tablespace

• Migrate existing index controlled partitioned tablespaces to UTS PBR when table

changes are required by application development.

1.3.2 Required Parameters (DDL Syntax)

STANDARD

The parameters listed below must be included in the DB2 data definition language

(DDL) when defining an application tablespace. DB2 default settings must not be

assumed for any of the following.

Parameter

Instructions

IN database

name

Specified database must be a valid application database.

Application tablespaces must not be defined in the DB2

default database. Unauthorized tablespaces residing

in the DB2 default database, DSNDB04, will be

dropped without warning.

SEGSIZE

Specify the size of each segment of pages within the

tablespace. For most tables, this value should be set to

64.

DSSIZE

Indicates the maximum data set size for each partition. A

value in gigabytes that indicates the maximum size for

each partition or, for LOB table spaces each data set.

NUMPARTS

/MAXPARTITIONS

Specify the number of partitions (datasets) that will

comprise the entire tablespace. MAXPARTITIONS requires

DBA approval.

USING

STOGROUP

STOGRPT1

Indicate the DB2 storage group on which the application

tablespaces will reside. STOGRPT1 is the standard

application storage group. Do not specify the default DB2

storage group (SYSDEFLT). Unauthorized tablespaces

residing in the SYSDEFAULT storage group will be

dropped without warning.

PRIQTY

Primary quantity is specified in units of 1K bytes. Specify

a primary quantity that will accommodate all of the data

in the tablespace or partition. PRIQTY=-1 is allowed.

SECQTY

Secondary quantity is specified in units of 1K bytes.

Specify a secondary quantity that is consistent with the

anticipated growth of the table. The value specified

should be large enough to prevent the tablespace from

spanning more than three extents prior to the next

scheduled REORG. SECQTY=-1 is preferred.

CMS DB2 Standards and Guidelines 9/1/2020

6

Parameter

Instructions

FREEPAGE

Indicate the frequency in which DB2 should reserve a

page of free space on the tablespace or partition when it

is reorganized or when data is initially loaded. Note: The

maximum value for segmented tables is 1 less than the

value specified for SEGSIZE.

PCTFREE

Indicate what percentage of each page on the tablespace

or partition should be remain unused when it is

reorganized or when data is initially loaded.

COMPRESS

Indicate whether DB2 should store data in the tablespace

or partition in a compressed format

BUFFERPOOL

bpname

Provide a valid bufferpool designation. Do not specify the

default bufferpool of BP0, consult the bufferpool standards

or the Central DBA staff.

LOCKSIZE

Specify the size of lock DB2 will acquire when data on the

tablespace is accessed (see Object Usage standard

above).

CLOSE

Indicate whether DB2 should close the corresponding

VSAM dataset when no activity on tablespace is detected

(see Object Usage standard above).

1.4 Tables

A table in DB2 is a named collection of columns and rows. The data represented in

these rows can be accessed using SQL data manipulation language (select, insert,

update, and delete). Generally, it is a table in DB2 that applications and end-users

access to retrieve and manage business data.

See

Standard Naming Convention.

1.4.1 Object Usage

STANDARD

• Assign one table per tablespace. Table and Clone are treated as one table.

• Default tablespaces and databases must not be used. Tables must be created in

a tablespace which was explicitly created for the corresponding application.

• In conformance with relational theory, all rows of a table should be uniquely

identified by a column or set of columns to avoid the advent of duplicate rows. It

is therefore required that every table defined to DB2 includes a primary key. If a

table does not have a viable group of columns that can be defined as a primary

key, consult the Central DBA staff for direction.

9/1/2020 CMS DB2 Standards and Guidelines

7

1.4.2 Required Parameters (DDL Syntax)

STANDARD

The parameters listed below must be included in the DB2 data definition language

(DDL) when defining an application table. DB2 default settings must not be

assumed for any of the following.

Parameter

Instructions

(column definition,

...)

List column specifications for each column that is to be

defined to the table (see Additional Table Definition

Parameters below for more details).

IN

database.tablespace

Specify the fully qualified tablespace name where data

for the table should be stored. Do not reference the

DB2 default database. Unauthorized tablespaces

residing in the DB2 Default database, DSNDB04,

will be dropped without warning.

PARTITION BY

RANGE(…)

Required to create standard UTS PBR tablespace.

1.5 Columns

Columns in a DB2 table contain data that was loaded, inserted or updated by some

process. All columns have a corresponding datatype to indicate the format of the

data within. In addition to datatypes, other edits can be associated with columns in

DB2 in order to enforce defined business rule. These rules include default values,

check constraints, unique constraints, and referential integrity (foreign keys).

See

Standard Naming Convention.

1.5.1 Object Usage

STANDARD

• Nulls should only be used when there is a need to know the difference between

the absence of a value versus a default value.

• Nulls are used for missing dates and amounts. Character columns will generally

be, Not Null with Default, to use the system default of blanks. instead of null for

missing values. This saves the space and additional variable required by a null

indicator. IE, Y/N/blank or A/B/C/blank, rather then Y/N/null. Nulls may be

used for character columns to support RI such as on delete set null, or were

blank is a valid non missing value.

CMS DB2 Standards and Guidelines 9/1/2020

8

• The Central DA team should be consulted for a list of standard domains and

column definitions.

• Columns that represent the same data but are stored on different tables; must

have the same name, datatype and length specification. An example of this is

where tables are related referentially. If on a PROVIDER table, for example, the

primary key is defined as PROV_NUM CHAR(08), then dependent tables must

include PROV_NUM CHAR(08) as a foreign key column.

• Columns that contain data which is determined to have the same domain must

be defined using identical datatype and length specifications. For example,

columns LAST_CHG_USER_ID and CASE_ADMN_USER_ID serve different

business purposes, however both should be defined as CHAR (08) in DB2. This

standard applies to the entire enterprise and should not be enforced solely at an

application level.

• All columns containing date information must be defined using the

DATE/TIMESTAMP data types.

• All columns containing time information must be defined using the

TIME/TIMESTAMP data types.

• Specify a datatype that most represents the data the column will contain. For

numeric data, use one of the supported numeric data types taking into account

the minimum and maximum value limits as well as storage requirements for

each.

• For character data which may exceed 30 characters in length, consider use of

the VARCHAR datatype. This could provide substantial savings in storage

requirements. When weighing the benefits of defining a column to be variable in

length, consider the average length of data that will be stored in this column. If

the average length is less than 80% of the total column width, a variable length

column may be appropriate. If data compression is used on the tablespace the

central DBA should be contacted to determine if VARCHAR, should still be used.

• Consider sequence of the column definitions to improve database performance.

Use the following as a guideline for sequencing columns on DB2 tables.

• Primary Key columns (for reference purposes only)

• Frequently read columns

• Infrequently read columns

• Infrequently updated columns

• Variable length columns

• Frequently updated columns

• For columns defined as DECIMAL be sure to use an odd number as the precision

specification to ensure efficient storage utilization. Precision represents the

entire length of the column, so in the definition DECIMAL (9,2), the precision is

9.

• For columns with whole numbers SMALLINT and INTEGER should be used.

9/1/2020 CMS DB2 Standards and Guidelines

9

1.5.2 Required Parameters (DDL Syntax)

STANDARD

The parameters listed below must be included in the DB2 data definition language

(DDL) when defining columns in an application table. DB2 default settings must not

be assumed for any of the following.

Parameter

Instructions

Column name

Provide a name for the column which conforms to DB2

Standard Naming Conventions.

Datatype

specification

Provide a datatype specification consistent with the

characteristics of the data the column will hold.

NOT NULL/

NOT NULL WITH

DEFAULT

[default value]

Indicate that a value or default value is required for the

column. Under some circumstances, this clause may not be

required (see Object Usage Standard above).

For additional information see IBM’s Reference manuals.

1.6 Referential Constraints (Foreign Keys)

A referential constraint is a rule that defines the relationship between two tables. It

is implemented by creating a foreign key on a dependent table that relates to the

primary key (or unique constraint) of a parent table.

See

Standard Naming Convention.

1.6.1 Object Usage

STANDARD

• Names for all foreign key constraints must be explicitly defined using data

definition language (DDL). Do not allow DB2 to generate default names.

• DDL syntax allows for foreign key constraints to be defined along side of the

corresponding column definition, as a separate clause at the end of the table

definition, or in an ALTER table statement. Each of these methods are

acceptable at CMS provided all of the required parameters noted below are

included in the definition.

• Avoid establishing referential constraints for read-only tables.

• Do not define referential constraints on tables residing in multi-table tablespaces

where the tables in the tablespace are not part of the same referential structure.

• When possible, limit the number of levels in a referential structure (all tables

which have a relationship to one another) to three.

• Use column constraints instead of referential constraints to a code table for

small groups of unchanging codes, such as sex, or y/n values and reason codes.

CMS DB2 Standards and Guidelines 9/1/2020

10

1.6.2 Required Parameters (DDL Syntax)

STANDARD

The parameters listed below must be included in the DB2 data definition language

(DDL) when defining a foreign key constraint in an application table. DB2 default

settings must not be assumed for any of the following.

Parameter

Instructions

constraint name

Specify a name for the foreign key constraint based on the

see Standard Naming Convention.

(column

name...)

Indicate the column(s) that make up the foreign key

constraint. These columns must correspond to a primary

key or unique constraint in the parent table.

REFERENCES

table name

Specify the name of the table to which the foreign key

constraint refers. If the foreign key constraint is based on a

unique constraint of a parent table, also provide the column

names that make up the corresponding unique constraint.

ON DELETE

delete rule

Specify the appropriate delete rule which should be applied

whenever an attempt is made to delete a corresponding

parent row. Valid values include RESTRICT, NO ACTION,

SET NULL, and CASCADE. (Warning: Use of the CASCADE

delete rule can result in the mass deletion of numerous

rows from dependent tables when one row is deleted from

the corresponding parent. No response is returned to the

deleting application indicating the mass delete occurred.

Therefore, strong consideration should be given as to the

appropriateness of implementing this rule in the physical

design.)

For additional information see IBM’s Reference manuals.

9/1/2020 CMS DB2 Standards and Guidelines

11

1.7 Table Check Constraints

A check constraint, (also known as a table or column constraint), is a rule defined

to a table which dictates how edits will be applied against data that is either

inserted or updated. The constraint rule is implemented using data definition

language (DDL) and can apply to a specific column or multiple columns on a table.

Constraint rules are always checked against one row of data at any point in time.

Check Constraints are preferred to RI on code tables for performance. Static codes

with under a dozen values are more efficient using check constraints for

enforcement, with descriptions kept locally in UI code rather accessing a code table

with a select.

See

Standard Naming Convention.

1.7.1 Object Usage

STANDARD

• Names for all check constraints must be explicitly defined using data definition

language (DDL). Do not allow DB2 to generate default names.

• DDL syntax allows for check constraints to be defined along side of the

corresponding column definition, as a separate clause at the end of the table

definition, or in an ALTER table statement. Each of these methods are

acceptable at CMS provided all of the required parameters noted below are

included in the definition.

• Several restrictions apply when defining check constraints on a table or column.

Refer to the DB2 SQL Reference manual for more details.

• Use caution when defining check constraints. Although DB2 will verify the syntax

of SQL commands, it will not verify the meaning. It is possible to implement a

check constraint that conflicts with other rules defined for the table. For

example, the syntax listed below would be accepted by DB2 even though the

column could never be inserted with a default value of 5.

CREATE table XYZ_TAB_A

(COL1_CD SMALLINT NOT NULL WITH DEFAULT 5

CONSTRAINT XYZ013_COL1_CD

CHECK (COL_1 BETWEEN 10 AND 20))

• Verify that check constraint rules do not conflict with any defined referential

rules. For example, if a foreign key is defined as ON DELETE SET NULL and a

check constraint is defined on the foreign key column as a rule that would

prohibit nulls, DB2 will allow both rules to exist. However, any attempt to delete

a parent row will fail due to the check constraint on the dependent table.

• Code tables with the ‘Not Enforced’ Foreign key parameter, may be used along

with check constraints when there are many values which require descriptions,

or when multiple application environments require consistent descriptions. This

identifies the RI for the code table to Erwin while retaining the efficiency of using

a check constraint.

CMS DB2 Standards and Guidelines 9/1/2020

12

1.7.2 Required Parameters (DDL Syntax)

STANDARD

The parameters listed below must be included in the DB2 data definition language

(DDL) when defining a table check constraint in an application table. DB2 default

settings must not be assumed for any of the following.

Parameter

Instructions

CONSTRAINT

constraint

name

Specify a name for the check constraint based on the

Standard Naming Convention.

CHECK

(condition)

Indicate the business rule using valid SQL syntax. This rule

will look similar to conditions expressed in an SQL WHERE

clause.

For additional information see IBM’s Reference manuals.

1.8 Unique Constraints

A unique constraint is a rule defined to a table which indicates that any occurrence

(row) in the table contains a distinct value in the column or combination of columns

that make up the constraint. The constraint rule is implemented using data

definition language (DDL) and can apply to a specific column or multiple columns on

a table. A unique constraint is similar to a primary key where both implement entity

integrity rules which state that each row in a table is uniquely identified by a non-

null value or set of values. The major difference between primary keys and unique

constraints is the fact that although only one primary key can be defined for one

table, multiple unique constraints can exist.

1.8.1 Object Usage

STANDARD

• Names for all unique constraints must be explicitly defined using data definition

language (DDL). Do not allow DB2 to generate default names.

• DDL syntax allows for unique constraints to be defined along side of the

corresponding column definition, as a separate clause at the end of the table

definition, or in an ALTER table statement. Each of these methods are

acceptable at CMS provided all of the required parameters noted below are

included in the definition.

• DB2 requires that a unique index be created to support each unique constraint

defined for a table. DB2 will mark a table as unusable until such an index is

created.

9/1/2020 CMS DB2 Standards and Guidelines

13

1.8.2 Required Parameters (DDL Syntax)

STANDARD

The parameters listed below must be included in the DB2 data definition language

(DDL) when defining a unique constraint in an application table.

Parameter

Instructions

Constraint-

name

Specify a name for the unique constraint based on the

Standard Naming Convention.

(column name,

...)

Specify the name of the column or list of columns which

make up the unique constraint.

1.9 ROWID

ROWID is a column data type that generates a unique value for each row in a table.

Using the ROWID column as the partitioning key may allow for random distribution

across partitions. DB2 will always generate the value for a ROWID column if

"GENERATED ALWAYS" is specified. ROWID has an internal representation of 19

bytes which never changes. The first two bytes are the length field followed by 17

bytes for the ROWID. The external representation of the ROWID is 40 bytes with

the RID appended(this changes with a REORG). ROWID values can only be assigned

to a ROWID column or ROWID variable. When using a host variable to receive a

ROWID it is necessary to declare the host variable as a ROWID for processing by

the precompiler:

EXEC SQL BEGIN DECLARE SECTION END-EXEC.

01 ABC-ROWID SQL TYPE IS ROWID,

EXEC SQL END DECLARE SECTION END-EXEC.

Assigning a character string to a ROWID you must first cast it to the ROWID data

type. Two ways in which to cast a CHAR, VARCHAR, or HEX literal:

• CAST (expression AS ROWID)

• CAST (X’hex_literal’ AS ROWID)

1.9.1 Object Usage

STANDARD

CMS DB2 Standards and Guidelines 9/1/2020

14

• Table cannot have more than one ROWID column.

• Trigger cannot modify a ROWID column.

• ROWID column cannot be declared a primary or foreign key.

• ROWID column cannot be updated.

• ROWID column cannot be defined as nullable.

• Cannot have a ROWID column on a temporary table.

• ROWID column cannot have field procedures.

• ROWID column cannot have check constraints.

• Tables with a ROWID column cannot have an EDITPROC

• ROWID data type cannot be used with DB2 private protocol, can only be used

with DRDA.

• ROWID column is required for implementing LOBs.

1.9.2 Required Parameters (DDL Syntax)

STANDARD

The parameters listed below must be included in the DB2 data definition language

(DDL) when defining a ROWID column. DB2 default settings must not be assumed

for any of the following.

Parameter

Instructions

GENERATED

BY DEFAULT

DB2 accepts valid row ID’s as inserted values for a row.

DB2 will generate a value if none are specified.

Must define a unique single column index on the ROWID

column (cannot INSERT until created).

Recommended for tables populated from another table.

GENERATED

ALWAYS

DB2 will always generate value.

An index is not required if generated with this approach.

LOAD utility cannot be used to load ROWID values if the

ROWID column was created with the GENERATED ALWAYS

clause.

This is the recommended approach.

For additional information see IBM’s Reference manuals.

9/1/2020 CMS DB2 Standards and Guidelines

15

1.10 Identity Columns

A column type for generating unique sequential value for a column when a row is

added to the table. Sequential incrementing is not guaranteed if more than one

transaction is updating the table. For a unit of work that is rolled back, the allocated

numbers that have already been used are not reissued.

1.10.1 Object Usage

STANDARD

• Table cannot have more than one Identity column.

• Identity columns cannot be defined as nullable.

• Cannot have a FIELDPROC.

• Table(s) with an Identity column cannot have an EDITPROC.

• Use of "WITH DEFAULT" is not allowed.

• The data type of an identity column can be INTEGER, SMALLINT, or DECIMAL

with a scale of 0. The column can also be a distinct type based on one of these

data types.

• Issuing a recovery to a prior point in time will cause DB2 not to reissue those

values already used.

• CREATE table ... LIKE ... INCLUDING IDENTITY causes the newly created table

to inherit the identity column of the old table.

• The ALTER table statement can be used to add an identity column. If the table is

populated when the ALTER is issued, the table is placed in the REORG pending

state.

1.10.2 Required Parameters (DDL Syntax)

STANDARD

The parameters listed below must be included in the DB2 data definition language

(DDL) when defining an Identity column. DB2 default settings must not be assumed

for any of the following.

Parameter

Instructions

GENERATED

BY DEFAULT

An explicit value can be provided, if a value is not provided

then DB2 generates a value.

Uniqueness is not guaranteed except for previously DB2

generated values. You must create an unique index to

guarantee unique values. Developers need to check for -803

SQLCODE indicating an attempt to insert a duplicate value in

a column with an unique index.

CMS DB2 Standards and Guidelines 9/1/2020

16

Parameter

Instructions

GENERATED

ALWAYS

DB2 will always generate a value, an explicit value cannot be

specified with an INSERT and UPDATE statement. RI to

columns using GENERATED ALWAYS is not allowed as new

values are assigned if the table is reloaded.

AS IDENTITY

This designates the column as an identity column. The

column is implicitly NOT NULL.

START WITH

Only need to specify if the number is not "1" furthermore,

must be a valid number for the chosen data type. This

number can be a positive or negative number. If it is a

positive number, it is an ascending number. If it is a

negative number, it is a descending number.

INCREMENTED

BY

Value by which the Identity column will be incremented (1 is

the default). The value must be valid for the chosen data

type.

CACHE 20

Preallocated values that are kept in memory by DB2 (the

default is 20). The minimum value that can be specified is 2,

and the maximum is the largest value that can be

represented as an integer.

During a system failure, all cached Identity column values

that are yet to be assigned are lost, and thus, will never be

used. Therefore, the value specified for CACHE also

represents the maximum number of values for the identity

column that could be lost during a system failure.

NO CACHE

No preallocated values are kept in memory by DB2. This is

useful if it is necessary to assign numbers in sequence as

the rows are inserted in a sysplex data sharing environment.

For additional information see IBM’s Reference manuals.

1.11 Sequence Objects

Sequence Objects generate unique sequential numbers independently of a table.

“Many users can access and increment the sequence concurrently without waiting.

DB2 does not wait for a transaction that has incremented a sequence to commit

before allowing another transaction to increment the sequence again.”

Sequence Objects are preferred over identity columns when used as an id with RI

on multiple tables. The Sequence object is known before inserting the parent

record, removing the need to insert and select the parent, before inserting the child

records.

9/1/2020 CMS DB2 Standards and Guidelines

17

1.11.1 Object Usage

Sequence Objects may not be defined with NO CACHE or ORDER, unless an

exception is given by the Central DBA’s. This does mean that there may be gaps in

the numbering between records.

1.12 Views

A View in DB2 is an alternative representation of data from one or more tables or

views. Although they can be accessed using data manipulation language (SELECT,

INSERT, DELETE, UPDATE), views do not contain data. Actual data is stored with

the underlying base table(s). Views are generally created to solve business

requirements related to security or ease of data access. A view may be required to

limit the columns or rows of a particular table a class of business users are

permitted to see. Views are also created to simplify user access to data by resolving

complicated SQL calls in the view definition.

See Standard Naming Convention.

1.12.1 Object Usage

STANDARD

• View definitions must reference base tables only.

• Do not create views which reference other views.

Use views sparingly. Create views only when it is determined that direct access to

the actual table does not adequately serve a particular business need. In general,

views should not be required if the data on a table is not sensitive and is only

accessed using predefined SQL such as static embedded SQL.

1.12.2 Required Parameters (DDL Syntax)

STANDARD

The parameters listed below must be included in the DB2 data definition language

(DDL) when defining a view. DB2 default settings must not be assumed for any of

the following.

Parameter

Instructions

(column list,

...)

List column names for each column that is to be defined to

the view. These names must conform to the DB2 Standard

Naming Convention for column names.

AS select

statement

Specify SQL select statement that defines this view. Do not

include existing views as part of the view definition. Do not

include a SELECT * ... as a select statement.

CMS DB2 Standards and Guidelines 9/1/2020

18

1.13 Indexes

An index is an ordered set of data values with corresponding record identifiers

(RIDs) which identify the location of data rows on a tablespace. An index is created

for one table and consists of data values from one or many columns on the table.

When created, one or more VSAM linear datasets used to hold the data and RID

values is also created. This physical object (dataset), known as an indexspace, can

be managed in DB2 using storage groups (DB2 Managed).

See

Standard Naming Convention.

1.13.1 Object Usage

STANDARD

• Use DB2 storage groups to manage storage allocation for all indexes.

• Specify CLOSE YES for all indexes.

• Specify COPY YES for all indexes that will be Image Copied.

• For read-only tables, define indexes with PCTFREE 0, FREEPAGE 0.

• When creating an index on a table that already contains over 5,000,000 rows,

use the DEFER option then use a REBUILD INDEX utility to build the index. By

stating DEFER YES on the CREATE INDEX statement, DB2 will simply add the

index definition to the DB2 catalog; the actual index entries will not be built.

Using this method can significantly reduce the amount of resources needed to

create an index on a large table.

• One index on every table must be explicitly defined as the clustering index.

• Specify PRIQTY and SECQTY quantities that fall on a track or cylinder boundary.

On a 3390 device a track equates to 48K and a cylinder equates to 720K.

• For indices over 100 cylinders always specify a PRIQTY and SECQTY on a

cylinder boundary, or a number that is a multiple of 720.

1.13.2 Required Parameters (DDL Syntax)

STANDARD

The parameters listed below must be included in the DB2 data definition language

(DDL) when defining an index. DB2 default settings must not be assumed for any of

the following.

9/1/2020 CMS DB2 Standards and Guidelines

19

Parameter

Instructions

CLUSTER

One index for each table must be designated as the

clustering index. Not doing so will cause DB2 to assume the

first index created on the table as the clustering index.

USING

STOGROUP

stogroup name

Indicate the DB2 storage group on which the indexspace will

reside. Do not specify the default DB2 storage group

(SYSDEFLT). Unauthorized objects defined in the default

storage group will be dropped without warning. See

Standard

Naming Convention.

PRIQTY

Primary quantity is specified in units of 1K bytes. Specify a

primary quantity that will accommodate all of the index

entries in the table or partition (for partitioned indexes).

SECQTY

Secondary quantity is specified in units of 1K bytes. Specify

a secondary quantity that is consistent with the anticipated

growth of the table or partition (for partitioned indexes). The

value specified should be large enough to prevent the

indexspace from spanning more that three extents prior to

the next scheduled REORG.

FREEPAGE

Indicate the frequency in which DB2 should reserve a page

of free space on the indexspace when data is initially loaded

to the table or when the index or index partition is rebuilt or

reorganized.

PCTFREE

Indicate what percentage of each page on the indexspace

should be remain unused when data is initially loaded to the

table or when the index or index partition is rebuilt or

reorganized.

BUFFERPOOL

bpname

Provide a valid bufferpool designation. The default value is

BP0 which should never be used - it is reserved for the DB2

catalog. Consult with the central DBA to determine the

appropriate bufferpool setting for your database objects.

CLOSE YES

Indicate whether DB2 should close the corresponding VSAM

dataset when no activity on indexspace is detected (see

Object Usage standard above).

COPY

Indicates Whether the COPY utility is allowed for this index.

Valid values are NO or YES.

1.14 Table Alias

A DB2 Alias provides an alternate name for a table or view that resides on the local

or remote database server. At CMS, aliases will be maintained to allow application

programs use to reference unqualified table and view names of different owners

with the single owner of their packages.

See

Standard Naming Convention.

CMS DB2 Standards and Guidelines 9/1/2020

20

1.14.1 Object Usage

STANDARD

Aliases are utilized in the development environments to access another application’s

tables with different qualifiers. A common owner is used in production instead of

aliases.

1.14.2 Required Parameters (DDL Syntax)

STANDARD

The parameters listed below must be included in the DB2 data definition language

(DDL) when defining an alias. DB2 default settings must not be assumed for any of

the following.

Parameter

Instructions

CREATE ALIAS

alias name

Specify an alias name consistent with the

Standard Naming

Convention.

FOR

owner.tablename

Provide a fully qualified table name (creator.tablename)

for the corresponding table.

1.15 Synonyms

A DB2 Synonym is an alternate name an individual can assign to a table or a view.

After creating a synonym, the individual can refer to the unqualified synonym name

(name without a creator prefix). DB2 will recognize the unqualified name as a

synonym and will translate the synonym name to the actual fully qualified table or

view name (creator.name). With current releases of DB2, synonyms no longer

provide a significant benefit in a development environment and will not be

supported at CMS.

1.15.1 Object Usage

STANDARD

Applications developed and maintained at CMS must not reference DB2 synonyms.

Synonyms will not be supported in the validation and production environments.

9/1/2020 CMS DB2 Standards and Guidelines

21

1.16 Stored Procedures

A Stored Procedure is a compiled program defined at a local or remote DB2 server

that is invoked using the SQL CALL statement.

See

Standard Naming Convention.

1.16.1 Object Usage

STANDARD

• Stored Procedures will be maintained, migrated and compiled through Endevor.

• Close and commit statements must be executed in the invoking modules to

release held DB2 resources.

• To plan the configuration of the WLM environment, the application is responsible

for notifying the Central DBA of the intent to use External Stored Procedures and

what purpose the Stored Procedures will have, prior to developing and testing.

This will include but is not limited to, business requirements, type of access, and

performance considerations.

• Nested External Stored Procedures are not permitted at CMS. After invoking the

initial stored procedure subsequent procedures should be invoked using

application language Call/Link statements. Nested Stored Procedure calls have

been proven to be inefficient.

• External Stored Procedures will be defined as STAYRESIDENT YES and PROGRAM

TYPE SUB.

1.16.2 Required Parameters (DDL Syntax)

STANDARD

The parameters listed below must be included in the DB2 data definition language

(DDL) when defining a Stored Procedure. DB2 default settings must not be

assumed for any of the following.

Parameter

Instructions

IN, OUT, INOUT

Identifies the parameter as an input, output or input and

output parameter to the Stored Procedure.

DYNAMIC

RESULT SETS

integer

Specifies the number of query result sets that can be

returned. A value of zero indicates no result sets will be

returned.

EXTERNAL

NAME

procedure-

name

Identifies the user-written program/code that implements

the Stored Procedure.

CMS DB2 Standards and Guidelines 9/1/2020

22

Parameter

Instructions

LANGUAGE

Identifies the application programming language that the

Stored Procedure is coded in.

PARAMETER

STYLE

Specifies the linkage convention used to pass parameters to

the Stored Procedure.

DBINFO

Specifies whether specific information known by DB2 is

passed to the Stored Procedure when it is called.

COLLID

Identifies the package collection that is used when the

Stored Procedure is called.

WLM

ENVIRONMENT

Identifies the MVS Workload Manager (WLM)environment

that the Stored Procedure is to run in.

STAY RESIDENT

YES

Specifies whether the Stored Procedure load module stays

resident in memory when the stored procedure terminates.

PROGRAM TYPE

SUB

Identifies if the Stored Procedure runs as a Main or a

Subroutine.

SECURITY

Identifies how the Stored Procedure interacts with RACF to

control access to non-DB2 resources. Only USER and DB2

are allowed.

COMMIT ON

RETURN

Specifies if DB2 commits the transaction immediately on

return from the Stored Procedure.

1.17 User Defined Functions

A User Defined Function (or UDF) is similar to a host language subprogram or

function that is invoked in an SQL statement.

UDF’s will follow the rules used for Stored Procedures.

Two common CMS function to convert Railroad Retirement board numbers to SSA

HICN format have been defined:

CMS.CMS_RRBTOSSA (rrb CHAR(12)) RETURNS CHAR(11)

CMS.CMS_SSATORRB (ssa CHAR(11) RETURNS CHAR(12)

1.18 User Defined Types

A Distinct Type (or User Defined Data Type) is based on a built-in database data

type, but is considered to be a separate and incompatible type for semantic

purposes. Example: One could define a US_Dollar and Mexican Peso data types,

although they may both be defined as DECIMAL(10,2), the business may want to

prevent these columns from being compared to one another.

9/1/2020 CMS DB2 Standards and Guidelines

23

There are currently no CMS standards for UDTs. If there is a need for a UDT please

contact the Central DBA group for guidance.

1.19 Triggers

A Trigger is a defined set of SQL defined for a table that executes when a specified

SQL event occurs.

See

Standard Naming Convention.

1.19.1 Object Usage

STANDARD

• Single triggers combining multiple actions for a table should be used rather than

multiple triggers to reduce the trigger calling overhead.

• Triggers firing other triggers should be avoided.

1.19.2 Required Parameters (DDL Syntax)

STANDARD

The parameters listed below must be included in the DB2 data definition language

(DDL) when defining a Trigger. DB2 default settings must not be assumed for any

of the following.

Parameter

Instructions

ON table table-

name

Identifies the triggering table that the trigger is associated

with.

FOR EACH

ROW - Specifies that DB2 executes the trigger for each row

of the triggering table that the triggering SQL operation

changes or Inserts. STATEMENT - Specifies that DB2

executes the triggered action only once for the triggering

SQL operation.

WHEN

Identifies a condition that evaluates to true, false, or

unknown.

BEGIN

AUTOMIC

Specifies the SQL that is executed for the triggered action.

1.20 LOBs

A large object is a data type used by DB2 to manage unstructured data. DB2

provides three built-in data types for storing large objects:

CMS DB2 Standards and Guidelines 9/1/2020

24

• Binary Large Objects, also known as BLOBs can contain up to 2GB of binary

data. Typical uses for BLOB data include photographs and pictures, audio and

sound clips, and video clips.

• Character Large Objects, also known as CLOBs can contain up to 2GB of single

byte character data. CLOBs are ideal for storing large text documents in a DB2

database.

• Double Byte Character Large Objects, also known as DBCLOBs can contain up to

1GB of double byte character data, for a total of 2GB. DBCLOBs are useful for

storing text documents in languages that require double byte characters, such

as Kanji.

BLOBs, CLOBs, and DBCLOBs are collectively referred to as LOBs. The actual data

storage limit for BLOB, CLOBs, and DBCLOBs, is 1 byte less than 2 gigabytes of

data.

ROWID column is required for implementing LOBs.

There are currently no written standards for LOBs. The CMS standard for

unstructured data is to use Content Manager. If there is a need for LOBs please

contact the Central DBA group for guidance.

1.21 Buffer Pools

Buffer pools are areas of virtual storage where DB2 temporarily stores pages of

table spaces or indexes. When an application program requests a row of a table,

the page containing the row is retrieved by DB2 and placed in a buffer. If the

requested page is already in a buffer, DB2 retrieves the page from the buffer,

significantly reducing the cost of retrieving the page.

1.21.1 Object Usage

STANDARD

Bufferpools are assigned and created by the Software Support Group with Central

DBA input.

The Bufferpool assignments are listed below:

BP1 Large Tables ( > 48000K or > 1000 tracks)

BP2 Large Indexes ( > 48000K or > 1000 tracks)

BP3 Medium Tables (( > 240K and < 48000K) or

( > 5 tracks and < 1000 tracks))

BP4 Medium Indexes (( > 240K and < 48000K) or

( > 5 tracks and < 1000 tracks))

BP5 Small Tables ( < than 240K or < than 5 tracks)

BP6 Small Indexes ( < than 240K or < than 5 tracks)

9/1/2020 CMS DB2 Standards and Guidelines

25

1.22 Capacity Planning

It is the responsibility of the Project GTL to schedule a meeting with EDCG,

Lockheed Martin and the Central DBA team to discuss and plan for impacts a new

system or major modifications to an existing system will have on the CMS computer

environments. This includes but not limited to Development, Test, Performance

Testing, Production, etc.

1.23 Space Requests

It is the responsibility of the Project GTL to request necessary space from EDCG.

The space required in each subsystem (all test environments and production) must

be delivered as part of the pre-development walk-through.

CMS DB2 Standards and Guidelines 9/1/2020

26

Application Programming

2.1 Data Access (SQL)

STANDARD

The following is a list of SQL usage standards to which all application programs

developed and maintained at CMS must adhere.

General SQL Usage

• Application programs must not include data definition language (DDL) or data

control language (DCL) SQL statements.

• SQL statements must include unqualified table and view names only. At no time

should a table name be referenced from an application program using the table

creator as a prefix.

• SQL columns must be accessed with elementary data items. At no time should

host structures be referenced in an SQL statement. The use of group level data

items, or structures are only permitted to manipulate variable length data or null

indicators, (i.e., VARCHAR).

SQL Error Handling

• All application programs must check the value of SQLCODE immediately after

each executable SQL command is issued, to determine the outcome of the SQL

request. Depending on the requirements of the application program, appropriate

logic to handle all possible values of SQLCODE must be performed

• All application programs must utilize a CMS Standard SQL Error Handling

Routine calling DSNTIAR and formatting output to SYSOUT to handle all

unexpected DB2 error conditions that are not accommodated within the

application.

• Standard Error Handling Routines

• A complete list of SQLCODEs can be located in the DB2 Messages and Codes or

DB2 Reference Summary manuals.

DECLARE TABLE

• Table Declarations for all tables and views accessed must be generated using

the DB2 DCLGEN command.

• Table declarations must be stored individually as members in a Endevor library.

Table declarations will be expanded in application source code at program

preparation time using the EXEC SQL, INCLUDE command.

HOST VARIABLES

• Host Variable Declarations must be defined as elementary data items in the

Working-Storage section in Cobol programs. Group level data items as host

variables are only permitted to manipulate variable length data or null

indicators, (i.e., VARCHAR).

9/1/2020 CMS DB2 Standards and Guidelines

27

DECLARE CURSOR

• DECLARE CURSOR statements must be defined in the Working-Storage section

in Cobol programs.

• Since cursor definitions include a valid SQL SELECT statement, DECLARE

CURSOR statements must also adhere to the SELECT statement standards.

• Cursor declarations for read-only cursors must include the FOR FETCH ONLY, to

make use of block fetching (improved I/O).

• Cursor declarations for cursors which will retrieve a small number of rows, must

include the OPTIMIZE FOR 1 ROWS clause to avoid sequential prefetch

processing (increased I/O).

SELECT

• SELECT statements must include an explicit list of column names and

expressions, which are being retrieved from DB2. Asterisks (*) are only

permitted in SELECT statements under the following circumstances:

• When using the SQL COUNT column function to determine a number of rows

(SELECT COUNT(*) FROM table-name...). (SELECT 1 INTO :WS-COUNT FROM

table-name WHERE …) with a -811 check for duplicates, or similar correlated

select, is preferred for existence checking when the number of rows isn’t

important

• Old code with a subselect to determine the existence of a condition (... WHERE

EXISTS (SELECT * FROM table-name ...)). Using (… WHERE EXISTS (SELECT 1

FROM table-name)) is preferred.

INSERT

• INSERT statements must use the format of a column list with corresponding

host variables for each column. INSERT INTO tablename (col1, col2, col3,...)

VALUES (:hvar1, :hvar2, :hvar3...)

LOCK table

• Due to the adverse affect on concurrent usage of DB2 resources, the use of the

LOCK table command is limited. Use of the LOCK table statement must be

approved by the Central DBA staff when it is required to achieve improved

performance or data integrity.

CLOSE CURSOR

• All application programs designed to process SQL cursors must execute a CLOSE

CURSOR statement for every corresponding OPEN CURSOR.

CMS DB2 Standards and Guidelines 9/1/2020

28

2.2 Application Recovery

To achieve a high level of data recoverability and application concurrency, all DB2

application programs updating data, (INSERT, DELETE, UPDATE), will incorporate

logical unit of work processing. Application logic will clearly identify begin and end

points for sets of work which must be processed as a whole. Commit and rollback

commands will be incorporated where appropriate.

All batch DB2 application programs will include abend/restart logic which will

minimize the impact to DB2 resources as well as the allotted batch processing

window in the event of an application failure.

Quickstart/MVS from BMC is the standard development tool that must be used at

CMS in order to comply with this standard.

2.3 Program Preparation

2.3.1 DB2 Package

All DB2 application programs developed and maintained at CMS are bound to DB2

as packages and are included in DB2 plans using the PKLIST parameter in the BIND

PLAN statement. Application programs are normally bound as part of the Endevor

procedures.

All DB2 packages must be bound using an ISOLATION level of 'CS' (cursor

stability). Specifying any other isolation level, such as repeatable read (RR) or

uncommitted read (UR) requires approval of the Central DBA staff. EXPLAIN(YES)

is required.

The following is an example of BIND PACKAGE syntax which should be used to

create DB2 packages at CMS:

BIND PACKAGE(XYZ0D0) -

MEMBER(XYZPROG1) -

LIBRARY('ASCM.#ENDVOUT.XYZ.XYZP0.CODDBRM')-

OWNER(DBXYZ0D0) -

QUALIFIER(DBXYZ0D0) –

VALIDATE(BIND) -

EXPLAIN(YES) -

RELEASE(COMMIT) –

ISOLATION(CS) –

ACTION(REPLACE)

9/1/2020 CMS DB2 Standards and Guidelines

29

2.3.2 DB2 Plan

All DB2 applications developed and maintained at CMS will implement DB2 plans

which contain a list of DB2 packages only. Use of the MEMBER parameter in the

BIND PLAN statement to include DBRM members is prohibited.

Al

l DB2 plans must be bound using an ISOLATION level of 'CS' (cursor stability).

Specifying any other isolation level, such as repeatable read (RR) or uncommitted

read (UR) requires approval of the Central DBA staff.

2.

3.3 Explains

Al

iases to plan tables for each database qualifier (EX DBXYZ0D1) will be created by

the Central DBA group. EXPLAIN(YES) is required on all binds to allow access paths

to be reviewed for performance.

2.

4 DB2 Development Tools

SP

UFI queries may not use RR repeatable read. This has been restricted by not

allowing access to the RR plan.

Adho

c access should be limited to Warehouse tables. Adhoc access to Operational

tables requires special approval from the owning GTL.

The

DB2 sample programs should be called with non-version plan names: DSNTEP2

DSNTEP4, DSNTIAD, and DSNTIAUL.

CMS DB2 Standards and Guidelines 9/1/2020

30

Naming Standards

• 3.1 Conventions for Objects and Datasets

Thi

s section discusses the standard naming conventions for DB2 objects and

datasets. These conventions were designed to meet the following objectives:

• Guarantee uniqueness of DB2 object names within a DB2 subsystem.

• Provide a uniform naming structure for DB2 objects of similar types.

• Simplify physical database design decisions regarding naming strategies.

• Provide ability to visually group DB2 Objects by the application and/or subject

area for which the objects were designed to support.

• Th

e following naming standards apply to any DB2 object (database,

tablespace, table, view, package, etc.) used to hold or maintain user

data, as well as external user objects, (partitioned datasets, production

job names, etc.) that will be used in conjunction with DB2 as part of

standard application development and system operation procedures.

3.1.1 Standard Naming Format for DB2 Objects

Al

l DB2 objects will be named according to the standard formats listed in the

following table. All object names must begin with an alphabetic character and

cannot begin with DSN, SQL, or DSQ.

Object

Format

Example

• Required Attributes

Alias

creator.aliasname

DBXYZOT1.NPS_PROV_INFO

• Alias names will always match the name of the table to which the

alias pertains

Auxillary

Index

DBAAAxEn.AISSSNNN

DBNLR0D0.AI001001

• AAAxEn and DBAAAxEn follow the standard of naming convention

of Table Owner and Tablespace respectively

• AI for Auxiliary index

• SSS the Tablespace numeral from characters 4,5,6

• NNN is a running number for that particular table. For example a

partition Tablespace having 200 parts and 2 CLOB columns can

have can have NNN from 1-200 for column 1 and 401 to 600 for

column 2.

Auxillary

Table

DBAAAxEn.ATSSSNNN

DBNLR0D0.AT001001

• AAAxEn and DBAAAxEn follow the standard of naming convention

of Table Owner and Tablespace respectively

9/1/2020 CMS DB2 Standards and Guidelines

31

• AT for Auxiliary index

• SSS the Tablespace numeral from characters 4,5,6

• NNN is a running number for that particular table. For example a

partition Tablespace having 200 parts and 2 CLOB columns can

have can have NNN from 1-200 for column 1 and 401 to 600 for

column 2.

Auxillary

Tablespace

AAAxEn.ASSSSNNN

NLR0D0.AS001001

• AAAxEn and DBAAAxEn follow the standard of naming convention

of Table Owner and Tablespace respectively

• AI for Auxiliary index

• SSS the Tablespace numeral from characters 4,5,6

• NNN is a running number for that particular table. For example a

partition Tablespace having 200 parts and 2 CLOB columns can

have can have NNN from 1-200 for column 1 and 401 to 600 for

column 2.

Column

bb..bb

PROV_ID

• 30 character name (maximum),

• Applications creating DCLGENs should use a shorter limit to keep

the generated variable length with in 30 when the DCLGEN prefix

and indicator suffix are added.

• The name should be derived from the business name identified

during the business/data analysis process;

• The name must include acceptable class and modifying words as

defined by the data administration group.

Collection

AAAxEn

XYZ0D0

• 6 character name

• The first three-characters (AAA) is the Application Identifier

• The fourth char numbers multiple collections

• The last chars are the environment and version

• Plans are always created by the Central DBA

Database

AAAxEn

XYZ0T0

• 6 character name

• the first three characters indicate the application responsible for

the data; this abbreviation must be approved by APCSS and the

Central DBAs

• The fourth char numbers multiple databases

• The last chars are the environment and version

• All Databases are defined by the Central DBA

DBRM

AAAbbbbb

NPSPROG1

• 8 character name (maximum), (three-character Application

Identifier & five-char description);

CMS DB2 Standards and Guidelines 9/1/2020

32

• DBRM name must be identical to the source module name of the

corresponding application program.

DCLGEN

AAAttt for tables

AAAVttt for views

NPS001

NPSV001

• 6-7 character library member name

• for tables, the DCLGEN member name will correspond to the

name of the corresponding tablespace.

• for approved views, the DCLGEN member name should begin

with a three-character Application Identifier, a one-position

alphabetic character ('V' for 'view'), and a three-position

sequential number uniquely identifying the view member name

Foreign Key

AAAtttFn

NPS001F1

• 8 character name;

• three-character Application Identifier, a three-position sequential

number indicating the corresponding tablespace number, a one-

position fixed character ("F"), and a one-position sequential

number indicating the specific foreign key for the corresponding

table/tablespace;

• must be unique within the corresponding database

Index

AAAtttnn

NPS00201

• 8 character name;

• three-character Application Identifier, a three-position sequential

number indicating the corresponding tablespace number, and a

two-position sequential number indicating the specific index for

the corresponding table/tablespace;

• must be unique within database

Package

AAAbbbbb

NPSPROG1

• 8 character name (maximum), (three-character Application

Identifier & five-char description); package name must be

identical to the source module name of the corresponding

application program.

Plan

AAACxEn

MSIB0V0

• CMS utilizes two plans for each application one for online

programs and one for Batch programs

• 7 character name

• The first three-characters (AAA) is the Application Identifier

• The fourth is either a ‘C’ for CICS or a ‘B’ for non-CICS

• The fifth char numbers multiple plans

• The last chars are the environment and version

• Plans are always created by the Central DBA

9/1/2020 CMS DB2 Standards and Guidelines

33

Primary Key

AAAtttPK

NPS001PK

• 8 character name;

• three-character Application Identifier, a three-position sequential

number indicating the corresponding tablespace number, a two-

position fixed character ("PK");

Program

AAAbbbbb

NPSPROG1

• 8 character name (maximum),

• three-character Application Identifier & five-char descriptive

name

• use existing standards (refer to CMS Data Center Users' Guide

Schema

See Collection

• The name is the same as the collection used for the application

Sequence

Object

AAA_bbbbbb_SQNC

NPS_TRANS_ID_SQNC

• 30 character name (maximum),

• Prefixed with three character Application identifier

• Suffixed with _SQNC

• bbbbbb descripitive name following DA standards. This will

usually be the column name the sequence is being used to

populate.

Storage

Group

STOGRPbb

STOGRPT1

• STOGRPT1 is the standard storage group for all applications in all

the subsystems.

• Storage groups are defined by the Central DBA staff

Stored

Procedure

AAASPbbb_aaaaaaaaa

MBDSP001_BENE_MATCH

• 30 character name (maximum),

• Three-character application identifier,

• a two position fixed character “SP”,

• a three position alphanumeric program id,

• for external procedures AAASPbbb will match the external

program name, for internal SQL procedures this will match the

member name the definition is stored as in Endevor

• a functional description, Abbreviations used will match the CMS

DA dictionary. The description is optional for existing stored

procedures.

Table

AAA_bb.bbb

NPS_PROV_INFO